Tutorial 7.6b - Factorial ANOVA (Bayesian)

12 Jan 2018

Overview

Factorial designs are an extension of single factor ANOVA designs in which additional factors are added such that each level of one factor is applied to all levels of the other factor(s) and these combinations are replicated.

For example, we might design an experiment in which the effects of temperature (high vs low) and fertilizer (added vs not added) on the growth rate of seedlings are investigated by growing seedlings under the different temperature and fertilizer combinations.

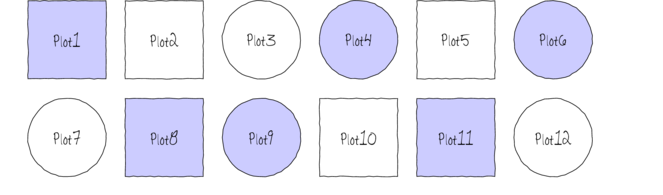

The following diagram depicts a very simple factorial design in which there are two factors (Shape and Color) each with two levels (Shape: square and circle; Color: blue and white) and each combination has 3 replicates.

In addition to investigating the impacts of the main factors, factorial designs allow us to investigate whether the effects of one factor are consistent across levels of another factor. For example, is the effect of temperature on growth rate the same for both fertilized and unfertilized seedlings and similarly, does the impact of fertilizer treatment depend on the temperature under which the seedlings are grown?

Arguably, these interactions give more sophisticated insights into the dynamics of the system we are investigating. Hence, we could add additional main effects, such as soil pH, amount of water etc along with all the two way (temp:fert, temp:pH, temp:water, etc), three-way (temp:fert:pH, temp:pH:water), four-way etc interactions in order to explore how these various factors interact with one another to effect the response.

However, the more interactions, the more complex the model becomes to specify, compute and interpret - not to mention the rate at which the number of required observations increases.

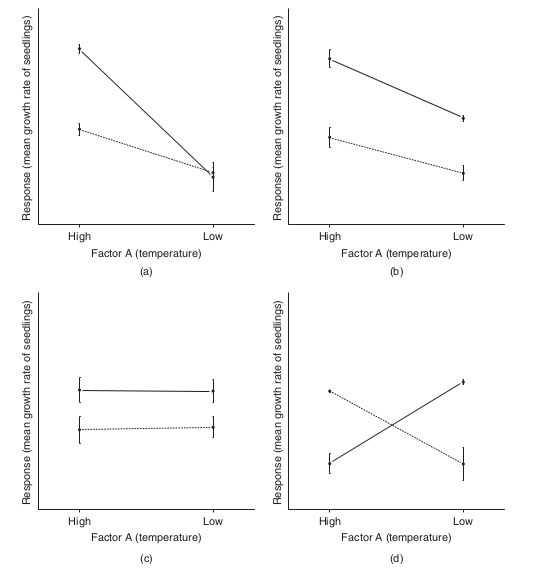

To appreciate the interpretation of interactions, consider the following figure that depicts fictitious two factor (temperature and fertilizer) designs.

It is clear from the top-right figure that whether or not there is an observed effect of adding fertilizer or not depends on whether we are focused on seedlings growth under high or low temperatures. Fertilizer is only important for seedlings grown under high temperatures. In this case, it is not possible to simply state that there is an effect of fertilizer as it depends on the level of temperature. Similarly, the magnitude of the effect of temperature depends on whether fertilizer has been added or not.

Such interactions are represented by plots in which lines either intersect or converge. The top-right and bottom-left figures both depict parallel lines which are indicative of no interaction. That is, the effects of temperature are similar for both fertilizer added and controls and vice verse. Whilst the former displays an effect of both fertilizer and temperature, the latter only fertilizer is important.

Finally, the bottom-right figure represents a strong interaction that would mask the main effects of temperature and fertilizer (since the nature of the effect of temperature is very different for the different fertilizer treatments and visa verse).

In a frequentist framework, factorial designs can consist:

- entirely of crossed fixed factors (Model I ANOVA - most common) in which conclusions are restricted to the specific combinations of levels selected for the experiment,

- entirely of crossed random factors (Model II ANOVA) or

- a mixture of crossed fixed and random factors (Model III ANOVA).

The tutorial on frequentist factorial ANOVA described procedures used to further investigate models in the presence of significant interactions as well as the complications that arise with linear models fitted to unbalanced designs. Again, these issues largely evaporate in a Bayesian framework. Consequently, we will not really dwell on these complications in this tutorial. At most, we will model some unbalanced data, yet it should be noted that we will not need to make any special adjustments in order to do so.

Linear model

The generic linear model is presented here purely for revisory purposes. If, it is unfamiliar to you or you are unsure about what parameters are to be estimated and tested, you are strongly advised to review the the tutorial on frequentist factorial ANOVA

The linear models for two and three factor design are: $$y_{ijk}=\mu+\alpha_i + \beta_{j} + (\alpha\beta)_{ij} + \varepsilon_{ijk}$$ $$y_{ijkl}=\mu+\alpha_i + \beta_{j} + \gamma_{k} + (\alpha\beta)_{ij} + (\alpha\gamma)_{ik} + (\beta\gamma)_{jk} + (\alpha\beta\gamma)_{ijk} + \varepsilon_{ijkl}$$ where $\mu$ is the overall mean, $\alpha$ is the effect of Factor A, $\beta$ is the effect of Factor B, $\gamma$ is the effect of Factor C and $\varepsilon$ is the random unexplained or residual component.

Scenario and Data

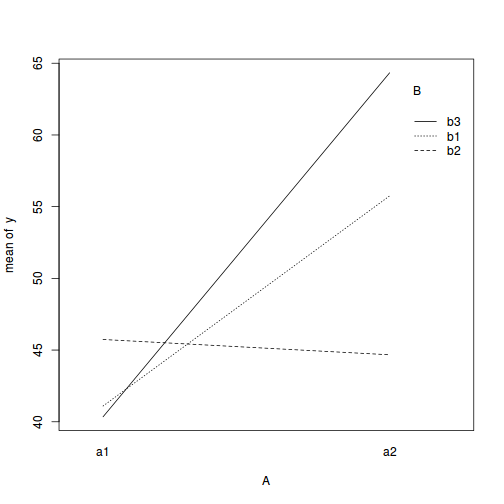

Imagine we has designed an experiment in which we had measured the response ($y$) under a combination of two different potential influences (Factor A: levels $a1$ and $a2$; and Factor B: levels $b1$, $b2$ and $b3$), each combination replicated 10 times ($n=10$). As this section is mainly about the generation of artificial data (and not specifically about what to do with the data), understanding the actual details are optional and can be safely skipped. Consequently, I have folded (toggled) this section away.

- the sample size per treatment=10

- factor A with 2 levels

- factor B with 3 levels

- the 6 effects parameters are 40, 15, 5, 0, -15,10 ($\mu_{a1b1}=40$, $\mu_{a2b1}=40+15=55$, $\mu_{a1b2}=40+5=45$, $\mu_{a1b3}=40+0=40$, $\mu_{a2b2}=40+15+5-15=45$ and $\mu_{a2b3}=40+15+0+10=65$)

- the data are drawn from normal distributions with a mean of 0 and standard deviation of 3 ($\sigma^2=9$)

set.seed(1) nA <- 2 #number of levels of A nB <- 3 #number of levels of B nsample <- 10 #number of reps in each A <- gl(nA, 1, nA, lab = paste("a", 1:nA, sep = "")) B <- gl(nB, 1, nB, lab = paste("b", 1:nB, sep = "")) data <- expand.grid(A = A, B = B, n = 1:nsample) X <- model.matrix(~A * B, data = data) eff <- c(40, 15, 5, 0, -15, 10) sigma <- 3 #residual standard deviation n <- nrow(data) eps <- rnorm(n, 0, sigma) #residuals data$y <- as.numeric(X %*% eff + eps) head(data) #print out the first six rows of the data set

A B n y 1 a1 b1 1 38.12064 2 a2 b1 1 55.55093 3 a1 b2 1 42.49311 4 a2 b2 1 49.78584 5 a1 b3 1 40.98852 6 a2 b3 1 62.53859

with(data, interaction.plot(A, B, y))

## ALTERNATIVELY, we could supply the population means and get the effect parameters from these. To ## correspond to the model matrix, enter the population means in the order of: a1b1, a2b1, a1b1, ## a2b2,a1b3,a2b3 pop.means <- as.matrix(c(40, 55, 45, 45, 40, 65), byrow = F) ## Generate a minimum model matrix for the effects XX <- model.matrix(~A * B, expand.grid(A = factor(1:2), B = factor(1:3))) ## Use the solve() function to solve what are effectively simultaneous equations (eff <- as.vector(solve(XX, pop.means)))

[1] 40 15 5 0 -15 10

data$y <- as.numeric(X %*% eff + eps)

With these sort of data, we are primarily interested in investigating whether there is a relationship between the continuous response variable and the treatment type. Does treatment type effect the response.

Assumptions

- All of the observations are independent - this must be addressed at the design and collection stages. Importantly, to be considered independent replicates, the replicates must be made at the same scale at which the treatment is applied. For example, if the experiment involves subjecting organisms housed in tanks to different water temperatures, then the unit of replication is the individual tanks not the individual organisms in the tanks. The individuals in a tank are strictly not independent with respect to the treatment.

- The response variable (and thus the residuals) should be normally distributed for each sampled populations (combination of factors). Boxplots of each treatment combination are useful for diagnosing major issues with normality.

- The response variable should be equally varied (variance should not be related to mean as these are supposed to be estimated separately) for each combination of treatments. Again, boxplots are useful.

Exploratory data analysis

Normality and Homogeneity of variance

boxplot(y ~ A * B, data)

# OR via ggplot2 library(ggplot2) ggplot(data, aes(y = y, x = A, fill = B)) + geom_boxplot()

Conclusions:

- there is no evidence that the response variable is consistently non-normal across all populations - each boxplot is approximately symmetrical

- there is no evidence that variance (as estimated by the height of the boxplots) differs between the five populations. . More importantly, there is no evidence of a relationship between mean and variance - the height of boxplots does not increase with increasing position along the y-axis. Hence it there is no evidence of non-homogeneity

- transform the scale of the response variables (to address normality etc). Note transformations should be applied to the entire response variable (not just those populations that are skewed).

Model fitting or statistical analysis

It is possible to model in all sorts of specific comparisons (contrasts) into a JAGS or STAN model statement. Likewise, it is possible to define specific main effects type tests within the model statement. However, for consistency across each of the routines, we will just define the minimum required model and perform all other posterior derivatives (such as main effects tests and contrasts) from the MCMC samples using R. This way, the techniques can be applied no mater which of the Bayesian modelling routines (JAGS, STAN, MCMCpack etc) were used.

The observed response ($y_i$) are assumed to be drawn from a normal distribution with a given mean ($\mu$) and standard deviation ($\sigma$). The expected values ($\mu$) are themselves determined by the linear predictor ($\mathbf{X}\boldsymbol{\beta}$). In this case, $\boldsymbol{\beta}$ represents the vector of $\beta$'s - the intercept associated with the first combination of groups, as well as the (effects) differences between this intercept and each other group. $\mathbf{X}$ is the model matrix.

MCMC sampling requires priors on all parameters. We will employ weakly informative priors. Specifying 'uninformative' priors is always a bit of a balancing act. If the priors are too vague (wide) the MCMC sampler can wander off into nonscence areas of likelihood rather than concentrate around areas of highest likelihood (desired when wanting the outcomes to be largely driven by the data). On the other hand, if the priors are too strong, they may have an influence on the parameters. In such a simple model, this balance is very forgiving - it is for more complex models that prior choice becomes more important.

For this simple model, we will go with zero-centered Gaussian (normal) priors with relatively large standard deviations (100) for both the intercept and the treatment effect and a wide half-cauchy (scale=5) for the standard deviation. $$ \begin{align} y_{ij} &\sim{} N(\mu_{ij}, \sigma)\\ \mu_{ij} &= \beta_0 + \mathbf{X}\boldsymbol{\beta}\\[1em] \beta_0 &\sim{} N(0,100)\\ \beta &\sim{} N(0,10)\\ \sigma &\sim{} cauchy(0,5)\\ \end{align} $$ Exploratory data analysis suggests that the intercept and effects could be drawn from similar distributions (with mean in the 10's and variances in the 100's). Whilst we might therefore be tempted to provide different priors for the intercept, compared to the effects, for a simple model such as this, it is unlikely to be necessary. However, for more complex models, where prior specification becomes more critical, separate priors would probably be necessary.

library(MCMCpack) data.mcmcpack <- MCMCregress(y ~ A * B, data = data)

Define the model

modelString = " model { #Likelihood for (i in 1:n) { y[i]~dnorm(mean[i],tau) mean[i] <- inprod(beta[],X[i,]) } #Priors for (i in 1:ngroups) { beta[i] ~ dnorm(0, 1.0E-6) } sigma ~ dunif(0, 100) tau <- 1 / (sigma * sigma) } "

Define the data

Arrange the data as a list (as required by BUGS). As input, JAGS will need to be supplied with:

- the response variable (y)

- the predictor model matrix (X)

- the total number of observed items (n)

- the number of predictor terms (nX)

X <- model.matrix(~A * B, data) data.list <- with(data, list(y = y, X = X, n = nrow(data), ngroups = ncol(X)))

Define the MCMC chain parameters

Next we should define the behavioural parameters of the MCMC sampling chains. Include the following:

- the nodes (estimated parameters) to monitor (return samples for)

- the number of MCMC chains (3)

- the number of burnin steps (1000)

- the thinning factor (10)

- the number of MCMC iterations - determined by the number of samples to save, the rate of thinning and the number of chains

params <- c("beta", "sigma") nChains = 3 burnInSteps = 3000 thinSteps = 10 numSavedSteps = 15000 #across all chains nIter = ceiling(burnInSteps + (numSavedSteps * thinSteps)/nChains) nIter

[1] 53000

Fit the model

Now run the JAGS code via the R2jags interface. Note that the first time jags is run after the R2jags package is loaded, it is often necessary to run any kind of randomization function just to initiate the .Random.seed variable.

library(R2jags)

data.r2jags <- jags(data = data.list, inits = NULL, parameters.to.save = params, model.file = textConnection(modelString), n.chains = nChains, n.iter = nIter, n.burnin = burnInSteps, n.thin = thinSteps)

Compiling model graph Resolving undeclared variables Allocating nodes Graph information: Observed stochastic nodes: 60 Unobserved stochastic nodes: 7 Total graph size: 514 Initializing model

print(data.r2jags)

Inference for Bugs model at "5", fit using jags,

3 chains, each with 53000 iterations (first 3000 discarded), n.thin = 10

n.sims = 15000 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

beta[1] 41.094 0.844 39.441 40.530 41.095 41.652 42.743 1.001 15000

beta[2] 14.647 1.194 12.274 13.867 14.652 15.442 16.982 1.001 15000

beta[3] 4.652 1.193 2.295 3.852 4.654 5.441 6.977 1.001 15000

beta[4] -0.746 1.190 -3.126 -1.515 -0.736 0.046 1.597 1.001 15000

beta[5] -15.712 1.695 -19.045 -16.834 -15.721 -14.601 -12.325 1.001 15000

beta[6] 9.336 1.673 6.039 8.232 9.335 10.434 12.585 1.001 15000

sigma 2.662 0.261 2.210 2.478 2.639 2.826 3.227 1.001 15000

deviance 286.111 4.072 280.390 283.115 285.406 288.308 295.927 1.001 15000

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 8.3 and DIC = 294.4

DIC is an estimate of expected predictive error (lower deviance is better).

data.mcmc.list <- as.mcmc(data.r2jags)

Whilst Gibbs sampling provides an elegantly simple MCMC sampling routine, very complex hierarchical models can take enormous numbers of iterations (often prohibitory large) to converge on a stable posterior distribution.

To address this, Andrew Gelman (and other collaborators) have implemented a variation on Hamiltonian Monte Carlo (HMC: a sampler that selects subsequent samples in a way that reduces the correlation between samples, thereby speeding up convergence) called the No-U-Turn (NUTS) sampler. All of these developments are brought together into a tool called Stan ("Sampling Through Adaptive Neighborhoods").

By design (to appeal to the vast BUGS users), Stan models are defined in a manner reminiscent of BUGS. Stan first converts these models into C++ code which is then compiled to allow very rapid computation.

Consistent with the use of C++, the model must be accompanied by variable declarations for all inputs and parameters.

One important difference between Stan and JAGS is that whereas BUGS (and thus JAGS) use precision rather than variance, Stan uses variance.

Stan itself is a stand-alone command line application. However, conveniently, the authors of Stan have also developed an R interface to Stan called Rstan which can be used much like R2jags.

Model matrix formulation

The minimum model in Stan required to fit the above simple regression follows. Note the following modifications from the model defined in JAGS:- the normal distribution is defined by variance rather than precision

- rather than using a uniform prior for sigma, I am using a half-Cauchy

We now translate the likelihood model into STAN code.

$$\begin{align}

y_{ij}&\sim{}N(\mu_{ij}, \sigma)\\

\mu_{ij} &= \mathbf{X}\boldsymbol{\beta}\\

\beta_0&\sim{}N(0,100)\\

\beta&\sim{}N(0,10)\\

\sigma&\sim{}Cauchy(0,5)\\

\end{align}

$$

Define the model

modelString = " data { int<lower=1> n; int<lower=1> nX; vector [n] y; matrix [n,nX] X; } parameters { vector[nX] beta; real<lower=0> sigma; } transformed parameters { vector[n] mu; mu = X*beta; } model { #Likelihood y~normal(mu,sigma); #Priors beta ~ normal(0,100); sigma~cauchy(0,5); } generated quantities { vector[n] log_lik; for (i in 1:n) { log_lik[i] = normal_lpdf(y[i] | mu[i], sigma); } } "

Define the data

Arrange the data as a list (as required by BUGS). As input, JAGS will need to be supplied with:

- the response variable (y)

- the predictor model matrix (X)

- the total number of observed items (n)

- the number of predictor terms (nX)

Xmat <- model.matrix(~A * B, data) data.list <- with(data, list(y = y, X = Xmat, nX = ncol(Xmat), n = nrow(data)))

Fit the model

Now run the JAGS code via the R2jags interface. Note that the first time jags is run after the R2jags package is loaded, it is often necessary to run any kind of randomization function just to initiate the .Random.seed variable.

## load the rstan package library(rstan)

data.rstan <- stan(data = data.list, model_code = modelString, chains = 3, iter = 2000, warmup = 500, thin = 3)

In file included from /usr/local/lib/R/site-library/BH/include/boost/config.hpp:39:0,

from /usr/local/lib/R/site-library/BH/include/boost/math/tools/config.hpp:13,

from /usr/local/lib/R/site-library/StanHeaders/include/stan/math/rev/core/var.hpp:7,

from /usr/local/lib/R/site-library/StanHeaders/include/stan/math/rev/core/gevv_vvv_vari.hpp:5,

from /usr/local/lib/R/site-library/StanHeaders/include/stan/math/rev/core.hpp:12,

from /usr/local/lib/R/site-library/StanHeaders/include/stan/math/rev/mat.hpp:4,

from /usr/local/lib/R/site-library/StanHeaders/include/stan/math.hpp:4,

from /usr/local/lib/R/site-library/StanHeaders/include/src/stan/model/model_header.hpp:4,

from file1cc41099a43b.cpp:8:

/usr/local/lib/R/site-library/BH/include/boost/config/compiler/gcc.hpp:186:0: warning: "BOOST_NO_CXX11_RVALUE_REFERENCES" redefined

# define BOOST_NO_CXX11_RVALUE_REFERENCES

^

<command-line>:0:0: note: this is the location of the previous definition

SAMPLING FOR MODEL '3d2414c9dcf4b5e12be870eadd2c894a' NOW (CHAIN 1).

Gradient evaluation took 1.7e-05 seconds

1000 transitions using 10 leapfrog steps per transition would take 0.17 seconds.

Adjust your expectations accordingly!

Iteration: 1 / 2000 [ 0%] (Warmup)

Iteration: 200 / 2000 [ 10%] (Warmup)

Iteration: 400 / 2000 [ 20%] (Warmup)

Iteration: 501 / 2000 [ 25%] (Sampling)

Iteration: 700 / 2000 [ 35%] (Sampling)

Iteration: 900 / 2000 [ 45%] (Sampling)

Iteration: 1100 / 2000 [ 55%] (Sampling)

Iteration: 1300 / 2000 [ 65%] (Sampling)

Iteration: 1500 / 2000 [ 75%] (Sampling)

Iteration: 1700 / 2000 [ 85%] (Sampling)

Iteration: 1900 / 2000 [ 95%] (Sampling)

Iteration: 2000 / 2000 [100%] (Sampling)

Elapsed Time: 0.051944 seconds (Warm-up)

0.117211 seconds (Sampling)

0.169155 seconds (Total)

SAMPLING FOR MODEL '3d2414c9dcf4b5e12be870eadd2c894a' NOW (CHAIN 2).

Gradient evaluation took 8e-06 seconds

1000 transitions using 10 leapfrog steps per transition would take 0.08 seconds.

Adjust your expectations accordingly!

Iteration: 1 / 2000 [ 0%] (Warmup)

Iteration: 200 / 2000 [ 10%] (Warmup)

Iteration: 400 / 2000 [ 20%] (Warmup)

Iteration: 501 / 2000 [ 25%] (Sampling)

Iteration: 700 / 2000 [ 35%] (Sampling)

Iteration: 900 / 2000 [ 45%] (Sampling)

Iteration: 1100 / 2000 [ 55%] (Sampling)

Iteration: 1300 / 2000 [ 65%] (Sampling)

Iteration: 1500 / 2000 [ 75%] (Sampling)

Iteration: 1700 / 2000 [ 85%] (Sampling)

Iteration: 1900 / 2000 [ 95%] (Sampling)

Iteration: 2000 / 2000 [100%] (Sampling)

Elapsed Time: 0.050512 seconds (Warm-up)

0.117971 seconds (Sampling)

0.168483 seconds (Total)

SAMPLING FOR MODEL '3d2414c9dcf4b5e12be870eadd2c894a' NOW (CHAIN 3).

Gradient evaluation took 9e-06 seconds

1000 transitions using 10 leapfrog steps per transition would take 0.09 seconds.

Adjust your expectations accordingly!

Iteration: 1 / 2000 [ 0%] (Warmup)

Iteration: 200 / 2000 [ 10%] (Warmup)

Iteration: 400 / 2000 [ 20%] (Warmup)

Iteration: 501 / 2000 [ 25%] (Sampling)

Iteration: 700 / 2000 [ 35%] (Sampling)

Iteration: 900 / 2000 [ 45%] (Sampling)

Iteration: 1100 / 2000 [ 55%] (Sampling)

Iteration: 1300 / 2000 [ 65%] (Sampling)

Iteration: 1500 / 2000 [ 75%] (Sampling)

Iteration: 1700 / 2000 [ 85%] (Sampling)

Iteration: 1900 / 2000 [ 95%] (Sampling)

Iteration: 2000 / 2000 [100%] (Sampling)

Elapsed Time: 0.053997 seconds (Warm-up)

0.118393 seconds (Sampling)

0.17239 seconds (Total)

print(data.rstan, par = c("beta", "sigma"))

Inference for Stan model: 3d2414c9dcf4b5e12be870eadd2c894a.

3 chains, each with iter=2000; warmup=500; thin=3;

post-warmup draws per chain=500, total post-warmup draws=1500.

mean se_mean sd 2.5% 25% 50% 75% 97.5% n_eff Rhat

beta[1] 41.13 0.03 0.84 39.47 40.54 41.16 41.68 42.74 1070 1

beta[2] 14.60 0.04 1.17 12.36 13.80 14.62 15.36 16.94 1026 1

beta[3] 4.60 0.04 1.20 2.28 3.81 4.59 5.40 6.89 1134 1

beta[4] -0.79 0.04 1.17 -3.07 -1.55 -0.81 -0.03 1.65 1065 1

beta[5] -15.64 0.05 1.61 -18.74 -16.74 -15.64 -14.51 -12.48 1141 1

beta[6] 9.38 0.05 1.63 6.22 8.27 9.38 10.51 12.69 1080 1

sigma 2.66 0.01 0.26 2.21 2.47 2.63 2.83 3.24 1232 1

Samples were drawn using NUTS(diag_e) at Sat Nov 25 17:19:17 2017.

For each parameter, n_eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor on split chains (at

convergence, Rhat=1).

The STAN team has put together pre-compiled modules (functions) to make specifying and applying STAN models much simpler. Each function offers a consistent interface that is Also reminiscent of major frequentist linear modelling routines in R.

Whilst it is not necessary to specify priors when using rstanarm functions (as defaults will be generated), there is no guarantee that the routines for determining these defaults will persist over time. Furthermore, it is always better to define your own priors if for no other reason that it forces you to thing about what you re doing. Consistent with the pure STAN version, we will employ the following priors:

- weakly informative Gaussian prior for the intercept $\beta_0 \sim{} N(0, 100)$

- weakly informative Gaussian prior for the treatment effect $\beta_1 \sim{} N(0, 100)$

- half-cauchy prior for the variance $\sigma \sim{} Cauchy(0, 5)$

Note, I am using the refresh=0 option so as to suppress the larger regular output in the interest of keeping output to what is necessary for this tutorial. When running outside of a tutorial context, the regular verbose output is useful as it provides a way to gauge progress.

library(rstanarm) library(broom) library(coda)

data.rstanarm = stan_glm(y ~ A * B, data = data, iter = 2000, warmup = 500, chains = 3, thin = 2, refresh = 0, prior_intercept = normal(0, 100), prior = normal(0, 100), prior_aux = cauchy(0, 2))

Gradient evaluation took 4.9e-05 seconds

1000 transitions using 10 leapfrog steps per transition would take 0.49 seconds.

Adjust your expectations accordingly!

Elapsed Time: 0.154465 seconds (Warm-up)

0.321999 seconds (Sampling)

0.476464 seconds (Total)

Gradient evaluation took 1.6e-05 seconds

1000 transitions using 10 leapfrog steps per transition would take 0.16 seconds.

Adjust your expectations accordingly!

Elapsed Time: 0.195459 seconds (Warm-up)

0.301423 seconds (Sampling)

0.496882 seconds (Total)

Gradient evaluation took 1.4e-05 seconds

1000 transitions using 10 leapfrog steps per transition would take 0.14 seconds.

Adjust your expectations accordingly!

Elapsed Time: 0.135384 seconds (Warm-up)

0.281972 seconds (Sampling)

0.417356 seconds (Total)

print(data.rstanarm)

stan_glm

family: gaussian [identity]

formula: y ~ A * B

------

Estimates:

Median MAD_SD

(Intercept) 41.1 0.9

Aa2 14.7 1.2

Bb2 4.7 1.2

Bb3 -0.7 1.2

Aa2:Bb2 -15.7 1.6

Aa2:Bb3 9.3 1.7

sigma 2.6 0.3

Sample avg. posterior predictive

distribution of y (X = xbar):

Median MAD_SD

mean_PPD 48.7 0.5

------

For info on the priors used see help('prior_summary.stanreg').

tidyMCMC(data.rstanarm, conf.int = TRUE, conf.method = "HPDinterval")

term estimate std.error conf.low conf.high 1 (Intercept) 41.0695427 0.8462585 39.407984 42.715661 2 Aa2 14.6591690 1.1981562 12.398261 17.112206 3 Bb2 4.6819518 1.1857841 2.323231 6.980180 4 Bb3 -0.7107484 1.1808212 -2.927747 1.642205 5 Aa2:Bb2 -15.7289876 1.6946307 -19.022967 -12.286452 6 Aa2:Bb3 9.2932738 1.7176328 6.132746 12.911395 7 sigma 2.6549943 0.2653598 2.169464 3.191681

The brms package serves a similar goal to the rstanarm package - to provide a simple user interface to STAN. However, unlike the rstanarm implementation, brms simply converts the formula, data, priors and family into STAN model code and data before executing stan with those elements.

Whilst it is not necessary to specify priors when using brms functions (as defaults will be generated), there is no guarantee that the routines for determining these defaults will persist over time. Furthermore, it is always better to define your own priors if for no other reason that it forces you to thing about what you are doing. Consistent with the pure STAN version, we will employ the following priors:

- weakly informative Gaussian prior for the intercept $\beta_0 \sim{} N(0, 100)$

- weakly informative Gaussian prior for the treatment effect $\beta_1 \sim{} N(0, 100)$

- half-cauchy prior for the variance $\sigma \sim{} Cauchy(0, 5)$

Note, I am using the refresh=0. option so as to suppress the larger regular output in the interest of keeping output to what is necessary for this tutorial. When running outside of a tutorial context, the regular verbose output is useful as it provides a way to gauge progress.

library(brms) library(broom) library(coda)

data.brms = brm(y ~ A * B, data = data, iter = 2000, warmup = 500, chains = 3, thin = 2, refresh = 0, prior = c(prior(normal(0, 100), class = "Intercept"), prior(normal(0, 100), class = "b"), prior(cauchy(0, 5), class = "sigma")))

Gradient evaluation took 1.5e-05 seconds

1000 transitions using 10 leapfrog steps per transition would take 0.15 seconds.

Adjust your expectations accordingly!

Elapsed Time: 0.059325 seconds (Warm-up)

0.110061 seconds (Sampling)

0.169386 seconds (Total)

Gradient evaluation took 9e-06 seconds

1000 transitions using 10 leapfrog steps per transition would take 0.09 seconds.

Adjust your expectations accordingly!

Elapsed Time: 0.053149 seconds (Warm-up)

0.109058 seconds (Sampling)

0.162207 seconds (Total)

Gradient evaluation took 9e-06 seconds

1000 transitions using 10 leapfrog steps per transition would take 0.09 seconds.

Adjust your expectations accordingly!

Elapsed Time: 0.05343 seconds (Warm-up)

0.107953 seconds (Sampling)

0.161383 seconds (Total)

print(data.brms)

Family: gaussian(identity)

Formula: y ~ A * B

Data: data (Number of observations: 60)

Samples: 3 chains, each with iter = 2000; warmup = 500; thin = 2;

total post-warmup samples = 2250

ICs: LOO = NA; WAIC = NA; R2 = NA

Population-Level Effects:

Estimate Est.Error l-95% CI u-95% CI Eff.Sample Rhat

Intercept 41.12 0.85 39.44 42.92 1936 1

Aa2 14.63 1.20 12.27 16.87 1538 1

Bb2 4.62 1.20 2.29 7.01 1914 1

Bb3 -0.76 1.21 -3.13 1.58 2013 1

Aa2:Bb2 -15.68 1.71 -18.92 -12.25 1696 1

Aa2:Bb3 9.31 1.72 6.00 12.66 1713 1

Family Specific Parameters:

Estimate Est.Error l-95% CI u-95% CI Eff.Sample Rhat

sigma 2.64 0.26 2.2 3.21 1401 1

Samples were drawn using sampling(NUTS). For each parameter, Eff.Sample

is a crude measure of effective sample size, and Rhat is the potential

scale reduction factor on split chains (at convergence, Rhat = 1).

tidyMCMC(data.brms, conf.int = TRUE, conf.method = "HPDinterval")

term estimate std.error conf.low conf.high 1 b_Intercept 41.1249801 0.8520689 39.282630 42.674204 2 b_Aa2 14.6333534 1.1996304 12.239047 16.843446 3 b_Bb2 4.6176257 1.2040485 2.334325 7.039437 4 b_Bb3 -0.7612484 1.2100417 -3.033821 1.662261 5 b_Aa2:Bb2 -15.6839628 1.7100995 -18.928721 -12.285494 6 b_Aa2:Bb3 9.3101691 1.7191171 5.774495 12.433714 7 sigma 2.6429857 0.2638466 2.158565 3.141588

MCMC diagnostics

In addition to the regular model diagnostic checks (such as residual plots), for Bayesian analyses, it is necessary to explore the characteristics of the MCMC chains and the sampler in general. Recall that the purpose of MCMC sampling is to replicate the posterior distribution of the model likelihood and priors by drawing a known number of samples from this posterior (thereby formulating a probability distribution). This is only reliable if the MCMC samples accurately reflect the posterior.

Unfortunately, since we only know the posterior in the most trivial of circumstances, it is necessary to rely on indirect measures of how accurately the MCMC samples are likely to reflect the likelihood. I will breifly outline the most important diagnostics, however, please refer to Tutorial 4.3, Secton 3.1: Markov Chain Monte Carlo sampling for a discussion of these diagnostics.

- Traceplots for each parameter illustrate the MCMC sample values after each successive

iteration along the chain. Bad chain mixing (characterized by any sort of pattern) suggests

that the MCMC sampling chains may not have completely traversed all features of the posterior

distribution and that more iterations are required to ensure the distribution has been accurately

represented.

- Autocorrelation plot for each paramter illustrate the degree of correlation between

MCMC samples separated by different lags. For example, a lag of 0 represents the degree of

correlation between each MCMC sample and itself (obviously this will be a correlation of 1).

A lag of 1 represents the degree of correlation between each MCMC sample and the next sample along the Chain

and so on. In order to be able to generate unbiased estimates of parameters, the MCMC samples should be

independent (uncorrelated). In the figures below, this would be violated in the top autocorrelation plot and met in the bottom

autocorrelation plot.

- Rhat statistic for each parameter provides a measure of sampling efficiency/effectiveness. Ideally, all values should be less than 1.05. If there are values of 1.05 or greater it suggests that the sampler was not very efficient or effective. Not only does this mean that the sampler was potentiall slower than it could have been, more importantly, it could indicate that the sampler spent time sampling in a region of the likelihood that is less informative. Such a situation can arise from either a misspecified model or overly vague priors that permit sampling in otherwise nonscence parameter space.

Prior to inspecting any summaries of the parameter estimates, it is prudent to inspect a range of chain convergence diagnostics.

- Trace plots

View trace plotsTrace plots show no evidence that the chains have not reasonably traversed the entire multidimensional parameter space.

library(MCMCpack) plot(data.mcmcpack)

- Raftery diagnostic

View Raftery diagnosticThe Raftery diagnostics estimate that we would require about 3900 samples to reach the specified level of confidence in convergence. As we have 10,000 samples, we can be confidence that convergence has occurred.

library(MCMCpack) raftery.diag(data.mcmcpack)

Quantile (q) = 0.025 Accuracy (r) = +/- 0.005 Probability (s) = 0.95 Burn-in Total Lower bound Dependence (M) (N) (Nmin) factor (I) (Intercept) 2 3962 3746 1.060 Aa2 2 3620 3746 0.966 Bb2 2 3650 3746 0.974 Bb3 2 3771 3746 1.010 Aa2:Bb2 2 3865 3746 1.030 Aa2:Bb3 2 3741 3746 0.999 sigma2 2 3962 3746 1.060 - Autocorrelation diagnostic

View autocorrelationsA lag of 1 appears to be mainly sufficient to avoid autocorrelation.

library(MCMCpack) autocorr.diag(data.mcmcpack)

(Intercept) Aa2 Bb2 Bb3 Aa2:Bb2 Aa2:Bb3 sigma2 Lag 0 1.000000000 1.000000000 1.000000000 1.0000000000 1.000000000 1.000000000 1.000000000 Lag 1 -0.004894719 -0.012641557 -0.005109851 0.0087851394 -0.013378761 0.003611532 0.108486310 Lag 5 0.015615547 0.020010786 -0.008051140 0.0007380127 0.008677204 0.011141740 -0.003758313 Lag 10 0.012051793 0.023302011 -0.003096659 -0.0042219123 0.011092359 0.011419096 0.024786573 Lag 50 -0.002205204 -0.009273217 0.003096931 -0.0124255527 -0.019278730 -0.009166804 0.005734623

Again, prior to examining the summaries, we should have explored the convergence diagnostics.

library(coda) data.mcmc = as.mcmc(data.r2jags)

- Trace plots

Trace plots show no evidence that the chains have not reasonably traversed the entire multidimensional parameter space.

plot(data.mcmc)

When there are a lot of parameters, this can result in a very large number of traceplots. To focus on just certain parameters (such as $\beta$s)

data.mcmc = as.mcmc(data.r2jags) preds <- grep("beta", colnames(data.mcmc[[1]])) plot(data.mcmc[, preds])

- Raftery diagnostic

The Raftery diagnostics for each chain estimate that we would require no more than 5000 samples to reach the specified level of confidence in convergence. As we have 16,667 samples, we can be confidence that convergence has occurred.

raftery.diag(data.mcmc)

[[1]] Quantile (q) = 0.025 Accuracy (r) = +/- 0.005 Probability (s) = 0.95 Burn-in Total Lower bound Dependence (M) (N) (Nmin) factor (I) beta[1] 20 38660 3746 10.30 beta[2] 20 37410 3746 9.99 beta[3] 20 38030 3746 10.20 beta[4] 20 36800 3746 9.82 beta[5] 20 36800 3746 9.82 beta[6] 20 35610 3746 9.51 deviance 20 36810 3746 9.83 sigma 20 38030 3746 10.20 [[2]] Quantile (q) = 0.025 Accuracy (r) = +/- 0.005 Probability (s) = 0.95 Burn-in Total Lower bound Dependence (M) (N) (Nmin) factor (I) beta[1] 20 38660 3746 10.30 beta[2] 20 36800 3746 9.82 beta[3] 20 36800 3746 9.82 beta[4] 20 35610 3746 9.51 beta[5] 20 37410 3746 9.99 beta[6] 20 36800 3746 9.82 deviance 20 37410 3746 9.99 sigma 20 38030 3746 10.20 [[3]] Quantile (q) = 0.025 Accuracy (r) = +/- 0.005 Probability (s) = 0.95 Burn-in Total Lower bound Dependence (M) (N) (Nmin) factor (I) beta[1] 20 38660 3746 10.30 beta[2] 20 38660 3746 10.30 beta[3] 20 37410 3746 9.99 beta[4] 20 39300 3746 10.50 beta[5] 20 36800 3746 9.82 beta[6] 20 36200 3746 9.66 deviance 20 37410 3746 9.99 sigma 20 37410 3746 9.99 - Autocorrelation diagnostic

A lag of 10 appears to be sufficient to avoid autocorrelation (poor mixing).

autocorr.diag(data.mcmc)

beta[1] beta[2] beta[3] beta[4] beta[5] beta[6] deviance Lag 0 1.000000000 1.000000000 1.000000000 1.000000000 1.000000000 1.0000000000 1.0000000000 Lag 10 0.022642183 0.007087573 0.015327392 0.016544619 0.009691909 0.0127083887 0.0004530568 Lag 50 -0.005155744 0.006008383 0.001204183 -0.003596475 0.015488010 0.0008986928 0.0117358545 Lag 100 -0.010587005 0.014977295 -0.006923069 -0.008110816 -0.002500236 0.0059617664 0.0011406222 Lag 500 0.002228922 -0.001740023 -0.004237329 -0.002055156 0.006778323 -0.0046455847 0.0169645325 sigma Lag 0 1.0000000000 Lag 10 0.0111103826 Lag 50 -0.0003064979 Lag 100 0.0010915518 Lag 500 0.0063924921

Again, prior to examining the summaries, we should have explored the convergence diagnostics. There are numerous ways of working with STAN model fits (for exploring diagnostics and summarization).

- extract the mcmc samples and convert them into a mcmc.list to leverage the various coda routines

- use the numerous routines that come with the rstan package

- use the routines that come with the bayesplot package

- explore the diagnostics interactively via shinystan

- via coda

- Traceplots

- Autocorrelation

Trace plots show no evidence that the chains have not reasonably traversed the entire multidimensional parameter space.library(coda) s = as.array(data.rstan) wch = grep("beta", dimnames(s)$parameters) s = s[, , wch] mcmc <- do.call(mcmc.list, plyr:::alply(s[, , -(length(s[1, 1, ]))], 2, as.mcmc)) plot(mcmc)

Trace plots show no evidence that the chains have not reasonably traversed the entire multidimensional parameter space.

Trace plots show no evidence that the chains have not reasonably traversed the entire multidimensional parameter space.library(coda) s = as.array(data.rstan) wch = grep("beta", dimnames(s)$parameters) s = s[, , wch] mcmc <- do.call(mcmc.list, plyr:::alply(s[, , -(length(s[1, 1, ]))], 2, as.mcmc)) autocorr.diag(mcmc)

beta[1] beta[2] beta[3] beta[4] beta[5] Lag 0 1.00000000 1.000000000 1.00000000 1.000000000 1.00000000 Lag 1 0.14383604 0.176608647 0.12964311 0.126322833 0.13048872 Lag 5 -0.03522429 0.009474199 -0.05235367 -0.025111374 -0.01157408 Lag 10 0.01753957 -0.021221896 0.00910290 0.004300764 -0.02599045 Lag 50 0.02127630 0.036706859 0.01003985 -0.032431129 0.01426767

- via rstan

- Traceplots

Trace plots show no evidence that the chains have not reasonably traversed the entire multidimensional parameter space.

stan_trace(data.rstan)

- Raftery diagnostic

The Raftery diagnostics for each chain estimate that we would require no more than 5000 samples to reach the specified level of confidence in convergence. As we have 16,667 samples, we can be confidence that convergence has occurred.

raftery.diag(data.rstan)

Quantile (q) = 0.025 Accuracy (r) = +/- 0.005 Probability (s) = 0.95 You need a sample size of at least 3746 with these values of q, r and s

- Autocorrelation diagnostic

A lag of 2 appears broadly sufficient to avoid autocorrelation (poor mixing).

stan_ac(data.rstan)

- Rhat values. These values are a measure of sampling efficiency/effectiveness. Ideally, all values should be less than 1.05.

If there are values of 1.05 or greater it suggests that the sampler was not very efficient or effective. Not only does this

mean that the sampler was potentiall slower than it could have been, more importantly, it could indicate that the sampler spent time sampling

in a region of the likelihood that is less informative. Such a situation can arise from either a misspecified model or

overly vague priors that permit sampling in otherwise nonscence parameter space.

In this instance, all rhat values are well below 1.05 (a good thing).

stan_rhat(data.rstan)

- Another measure of sampling efficiency is Effective Sample Size (ess).

ess indicate the number samples (or proportion of samples that the sampling algorithm deamed effective. The sampler rejects samples

on the basis of certain criterion and when it does so, the previous sample value is used. Hence while the MCMC sampling chain

may contain 1000 samples, if there are only 10 effective samples (1%), the estimated properties are not likely to be reliable.

In this instance, most of the parameters have reasonably high effective samples and thus there is likely to be a good range of values from which to estimate paramter properties.

stan_ess(data.rstan)

- Traceplots

- via bayesplot

- Trace plots and density plots

Trace plots show no evidence that the chains have not reasonably traversed the entire multidimensional parameter space.

library(bayesplot) mcmc_trace(as.matrix(data.rstan), regex_pars = "beta|sigma")

library(bayesplot) mcmc_combo(as.matrix(data.rstan), regex_pars = "beta|sigma")

- Density plots

Density plots sugggest mean or median would be appropriate to describe the fixed posteriors and median is appropriate for the sigma posterior.

library(bayesplot) mcmc_dens(as.matrix(data.rstan), regex_pars = "beta|sigma")

- Trace plots and density plots

- via shinystan

library(shinystan) launch_shinystan(data.rstan)

- It is worth exploring the influence of our priors.

Again, prior to examining the summaries, we should have explored the convergence diagnostics. There are numerous ways of working with STANARM model fits (for exploring diagnostics and summarization).

- extract the mcmc samples and convert them into a mcmc.list to leverage the various coda routines

- use the numerous routines that come with the rstan package

- use the routines that come with the bayesplot package

- explore the diagnostics interactively via shinystan

- via coda

- Traceplots

- Autocorrelation

Trace plots show no evidence that the chains have not reasonably traversed the entire multidimensional parameter space.library(coda) s = as.array(data.rstanarm) mcmc <- do.call(mcmc.list, plyr:::alply(s[, , -(length(s[1, 1, ]))], 2, as.mcmc)) plot(mcmc)

Trace plots show no evidence that the chains have not reasonably traversed the entire multidimensional parameter space.

Trace plots show no evidence that the chains have not reasonably traversed the entire multidimensional parameter space.library(coda) s = as.array(data.rstanarm) mcmc <- do.call(mcmc.list, plyr:::alply(s[, , -(length(s[1, 1, ]))], 2, as.mcmc)) autocorr.diag(mcmc)

(Intercept) Aa2 Bb2 Bb3 Aa2:Bb2 Aa2:Bb3 Lag 0 1.000000000 1.0000000000 1.00000000 1.0000000000 1.000000000 1.000000000 Lag 1 0.079250889 0.0525242478 0.02921679 0.0118875596 0.019013378 0.050538090 Lag 5 0.016286755 0.0210690165 -0.01755251 -0.0006499424 -0.001479584 0.019445419 Lag 10 -0.003125278 -0.0058528012 -0.01445239 -0.0308228680 -0.022582741 -0.009611371 Lag 50 -0.001455135 -0.0002293142 0.03901632 -0.0366835504 0.048671623 -0.001621902

- via rstan

- Traceplots

Trace plots show no evidence that the chains have not reasonably traversed the entire multidimensional parameter space.

stan_trace(data.rstanarm)

- Raftery diagnostic

The Raftery diagnostics for each chain estimate that we would require no more than 5000 samples to reach the specified level of confidence in convergence. As we have 16,667 samples, we can be confidence that convergence has occurred.

raftery.diag(data.rstanarm)

Quantile (q) = 0.025 Accuracy (r) = +/- 0.005 Probability (s) = 0.95 You need a sample size of at least 3746 with these values of q, r and s

- Autocorrelation diagnostic

A lag of 2 appears broadly sufficient to avoid autocorrelation (poor mixing).

stan_ac(data.rstanarm)

- Rhat values. These values are a measure of sampling efficiency/effectiveness. Ideally, all values should be less than 1.05.

If there are values of 1.05 or greater it suggests that the sampler was not very efficient or effective. Not only does this

mean that the sampler was potentiall slower than it could have been, more importantly, it could indicate that the sampler spent time sampling

in a region of the likelihood that is less informative. Such a situation can arise from either a misspecified model or

overly vague priors that permit sampling in otherwise nonscence parameter space.

In this instance, all rhat values are well below 1.05 (a good thing).

stan_rhat(data.rstanarm)

- Another measure of sampling efficiency is Effective Sample Size (ess).

ess indicate the number samples (or proportion of samples that the sampling algorithm deamed effective. The sampler rejects samples

on the basis of certain criterion and when it does so, the previous sample value is used. Hence while the MCMC sampling chain

may contain 1000 samples, if there are only 10 effective samples (1%), the estimated properties are not likely to be reliable.

In this instance, most of the parameters have reasonably high effective samples and thus there is likely to be a good range of values from which to estimate paramter properties.

stan_ess(data.rstanarm)

- Traceplots

- via bayesplot

- Trace plots and density plots

Trace plots show no evidence that the chains have not reasonably traversed the entire multidimensional parameter space.

library(bayesplot) mcmc_trace(as.array(data.rstanarm), regex_pars = "Intercept|x|sigma")

mcmc_combo(as.array(data.rstanarm))

- Density plots

Density plots sugggest mean or median would be appropriate to describe the fixed posteriors and median is appropriate for the sigma posterior.

mcmc_dens(as.array(data.rstanarm))

- Trace plots and density plots

- via rstanarm

The rstanarm package provides additional posterior checks.- Posterior vs Prior - this compares the posterior estimate for each parameter against the associated prior.

If the spread of the priors is small relative to the posterior, then it is likely that the priors are too influential.

On the other hand, overly wide priors can lead to computational issues.

library(rstanarm) posterior_vs_prior(data.rstanarm, color_by = "vs", group_by = TRUE, facet_args = list(scales = "free_y"))

Gradient evaluation took 3.5e-05 seconds 1000 transitions using 10 leapfrog steps per transition would take 0.35 seconds. Adjust your expectations accordingly! Elapsed Time: 0.287642 seconds (Warm-up) 0.12345 seconds (Sampling) 0.411092 seconds (Total) Gradient evaluation took 1.3e-05 seconds 1000 transitions using 10 leapfrog steps per transition would take 0.13 seconds. Adjust your expectations accordingly! Elapsed Time: 0.262367 seconds (Warm-up) 0.1157 seconds (Sampling) 0.378067 seconds (Total)

- Posterior vs Prior - this compares the posterior estimate for each parameter against the associated prior.

If the spread of the priors is small relative to the posterior, then it is likely that the priors are too influential.

On the other hand, overly wide priors can lead to computational issues.

- via shinystan

library(shinystan) launch_shinystan(data.rstanarm)

Again, prior to examining the summaries, we should have explored the convergence diagnostics. Rather than dublicate this for both additive and multiplicative models, we will only explore the multiplicative model. There are numerous ways of working with STAN model fits (for exploring diagnostics and summarization).

- extract the mcmc samples and convert them into a mcmc.list to leverage the various coda routines

- use the numerous routines that come with the rstan package

- use the routines that come with the bayesplot package

- explore the diagnostics interactively via shinystan

- via coda

- Traceplots

- Autocorrelation

Trace plots show no evidence that the chains have not reasonably traversed the entire multidimensional parameter space.library(coda) mcmc = as.mcmc(data.brms) plot(mcmc)

Trace plots show no evidence that the chains have not reasonably traversed the entire multidimensional parameter space.

Trace plots show no evidence that the chains have not reasonably traversed the entire multidimensional parameter space.library(coda) mcmc = as.mcmc(data.brms) autocorr.diag(mcmc)

Error in ts(x, start = start(x), end = end(x), deltat = thin(x)): invalid time series parameters specified

- via rstan

- Traceplots

Trace plots show no evidence that the chains have not reasonably traversed the entire multidimensional parameter space.

stan_trace(data.brms$fit)

- Raftery diagnostic

The Raftery diagnostics for each chain estimate that we would require no more than 5000 samples to reach the specified level of confidence in convergence. As we have 16,667 samples, we can be confidence that convergence has occurred.

raftery.diag(data.brms)

Quantile (q) = 0.025 Accuracy (r) = +/- 0.005 Probability (s) = 0.95 You need a sample size of at least 3746 with these values of q, r and s

- Autocorrelation diagnostic

A lag of 2 appears broadly sufficient to avoid autocorrelation (poor mixing).

stan_ac(data.brms$fit)

- Rhat values. These values are a measure of sampling efficiency/effectiveness. Ideally, all values should be less than 1.05.

If there are values of 1.05 or greater it suggests that the sampler was not very efficient or effective. Not only does this

mean that the sampler was potentiall slower than it could have been, more importantly, it could indicate that the sampler spent time sampling

in a region of the likelihood that is less informative. Such a situation can arise from either a misspecified model or

overly vague priors that permit sampling in otherwise nonscence parameter space.

In this instance, all rhat values are well below 1.05 (a good thing).

stan_rhat(data.brms$fit)

- Another measure of sampling efficiency is Effective Sample Size (ess).

ess indicate the number samples (or proportion of samples that the sampling algorithm deamed effective. The sampler rejects samples

on the basis of certain criterion and when it does so, the previous sample value is used. Hence while the MCMC sampling chain

may contain 1000 samples, if there are only 10 effective samples (1%), the estimated properties are not likely to be reliable.

In this instance, most of the parameters have reasonably high effective samples and thus there is likely to be a good range of values from which to estimate paramter properties.

stan_ess(data.brms$fit)

- Traceplots

Model validation

Model validation involves exploring the model diagnostics and fit to ensure that the model is broadly appropriate for the data. As such, exploration of the residuals should be routine.

For more complex models (those that contain multiple effects, it is also advisable to plot the residuals against each of the individual predictors. For sampling designs that involve sample collection over space or time, it is also a good idea to explore whether there are any temporal or spatial patterns in the residuals.

There are numerous situations (e.g. when applying specific variance-covariance structures to a model) where raw residuals do not reflect the interior workings of the model. Typically, this is because they do not take into account the variance-covariance matrix or assume a very simple variance-covariance matrix. Since the purpose of exploring residuals is to evaluate the model, for these cases, it is arguably better to draw conclusions based on standardized (or studentized) residuals.

Unfortunately the definitions of standardized and studentized residuals appears to vary and the two terms get used interchangeably. I will adopt the following definitions:

| Standardized residuals: | the raw residuals divided by the true standard deviation of the residuals (which of course is rarely known). | |

| Studentized residuals: | the raw residuals divided by the standard deviation of the residuals. Note that externally studentized residuals are calculated by dividing the raw residuals by a unique standard deviation for each observation that is calculated from regressions having left each successive observation out. | |

| Pearson residuals: | the raw residuals divided by the standard deviation of the response variable. |

The mark of a good model is being able to predict well. In an ideal world, we would have sufficiently large sample size as to permit us to hold a fraction (such as 25%) back thereby allowing us to train the model on 75% of the data and then see how well the model can predict the withheld 25%. Unfortunately, such a luxury is still rare in ecology.

The next best option is to see how well the model can predict the observed data. Models tend to struggle most with the extremes of trends and have particular issues when the extremes approach logical boundaries (such as zero for count data and standard deviations). We can use the fitted model to generate random predicted observations and then explore some properties of these compared to the actual observed data.

Residuals are not computed directly within MCMCpack. However, we can calculate them manually form the posteriors.

mcmc = as.data.frame(data.mcmcpack) # generate a model matrix newdata = data Xmat = model.matrix(~A * B, newdata) ## get median parameter estimates head(mcmc)

(Intercept) Aa2 Bb2 Bb3 Aa2:Bb2 Aa2:Bb3 sigma2 1 40.42172 14.17136 3.941759 -0.3299995 -14.06168 10.470534 5.338679 2 41.37944 14.82348 4.396285 -2.2414088 -16.73676 9.835985 8.130920 3 40.90443 14.60643 3.996873 -2.1078572 -12.63888 9.953201 7.456621 4 41.13543 15.74346 5.006105 -1.1591951 -18.15799 9.737565 8.063221 5 41.09695 15.74244 4.816085 0.2531722 -16.46468 7.425350 6.836169 6 40.77523 15.04709 5.929086 0.7056246 -16.61962 8.402492 5.451654

wch = grepl("sigma2", colnames(mcmc)) == 0 coefs = apply(mcmc[, wch], 2, median) fit = as.vector(coefs %*% t(Xmat)) resid = data$y - fit ggplot() + geom_point(data = NULL, aes(y = resid, x = fit))

Residuals against predictors

mcmc = as.data.frame(data.mcmcpack) # generate a model matrix newdata = newdata Xmat = model.matrix(~A * B, newdata) ## get median parameter estimates wch = grepl("sigma", colnames(mcmc)) == 0 coefs = apply(mcmc[, wch], 2, median) fit = as.vector(coefs %*% t(Xmat)) resid = data$y - fit newdata = newdata %>% cbind(fit, resid) ggplot(newdata) + geom_point(aes(y = resid, x = A))

ggplot(newdata) + geom_point(aes(y = resid, x = B))

And now for studentized residuals

mcmc = as.data.frame(data.mcmcpack) # generate a model matrix newdata = data Xmat = model.matrix(~A * B, newdata) ## get median parameter estimates wch = grepl("sigma", colnames(mcmc)) == 0 coefs = apply(mcmc[, wch], 2, median) fit = as.vector(coefs %*% t(Xmat)) resid = data$y - fit sresid = resid/sd(resid) ggplot() + geom_point(data = NULL, aes(y = sresid, x = fit))

Conclusions: for this simple model, the studentized residuals yield the same pattern as the raw residuals (or the Pearson residuals for that matter).

Lets see how well data simulated from the model reflects the raw data

mcmc = as.matrix(data.mcmcpack) #generate a model matrix Xmat = model.matrix(~A*B, data) ##get median parameter estimates wch = grepl('sigma',colnames(mcmc))==0 coefs = mcmc[,wch] fit = coefs %*% t(Xmat) ## draw samples from this model yRep = sapply(1:nrow(mcmc), function(i) rnorm(nrow(data), fit[i,], sqrt(mcmc[i, 'sigma2']))) newdata = data.frame(A=data$A, B=data$B, yRep) %>% gather(key=Sample, value=Value,-A,-B) ggplot(newdata) + geom_violin(aes(y=Value, x=A, fill='Model'), alpha=0.5)+ geom_violin(data=data, aes(y=y,x=A,fill='Obs'), alpha=0.5) + geom_point(data=data, aes(y=y, x=A), position=position_jitter(width=0.1,height=0), color='black')

ggplot(newdata) + geom_violin(aes(y=Value, x=B, fill='Model', group=B, color=A), alpha=0.5)+ geom_point(data=data, aes(y=y, x=B, group=B,color=A))

Conclusions the predicted trends do encapsulate the actual data, suggesting that the model is a reasonable representation of the underlying processes. Note, these are prediction intervals rather than confidence intervals as we are seeking intervals within which we can predict individual observations rather than means.

We can also explore the posteriors of each parameter.

library(bayesplot) mcmc_intervals(as.matrix(data.mcmcpack), regex_pars = "Intercept|^A|^B|sigma")

mcmc_areas(as.matrix(data.mcmcpack), regex_pars = "Intercept|^A|^B|sigma")

Residuals are not computed directly within JAGS. However, we can calculate them manually form the posteriors.

mcmc = data.r2jags$BUGSoutput$sims.matrix # generate a model matrix newdata = data Xmat = model.matrix(~A * B, newdata) ## get median parameter estimates wch = grep("beta\\[", colnames(mcmc)) wch

[1] 1 2 3 4 5 6

head(mcmc)

beta[1] beta[2] beta[3] beta[4] beta[5] beta[6] deviance sigma [1,] 40.48339 15.06364 5.935638 -0.3093790 -17.87132 8.043827 283.5346 2.538900 [2,] 41.63781 14.36993 1.931867 -1.1518205 -13.69143 9.739930 286.9971 2.768452 [3,] 40.61291 14.92807 4.636804 -0.4964191 -15.13200 10.374972 289.3339 3.337160 [4,] 42.04693 14.50413 5.536484 -2.2035782 -17.60762 8.681790 289.7733 2.488243 [5,] 42.38741 12.94789 4.119175 -0.8898141 -14.22881 11.716873 289.7752 2.617655 [6,] 40.14774 15.40453 4.608544 2.2212448 -16.07778 6.200402 288.8282 2.851959

coefs = apply(mcmc[, wch], 2, median) fit = as.vector(coefs %*% t(Xmat)) resid = data$y - fit ggplot() + geom_point(data = NULL, aes(y = resid, x = fit))

Residuals against predictors

mcmc = data.r2jags$BUGSoutput$sims.matrix wch = grep("beta\\[", colnames(mcmc)) # generate a model matrix newdata = newdata Xmat = model.matrix(~A * B, newdata) ## get median parameter estimates coefs = apply(mcmc[, wch], 2, median) print(coefs)

beta[1] beta[2] beta[3] beta[4] beta[5] beta[6] 41.0945676 14.6518796 4.6535912 -0.7356942 -15.7214406 9.3350177

fit = as.vector(coefs %*% t(Xmat)) resid = data$y - fit newdata = newdata %>% cbind(fit, resid) ggplot(newdata) + geom_point(aes(y = resid, x = A))

ggplot(newdata) + geom_point(aes(y = resid, x = B))

And now for studentized residuals

mcmc = data.r2jags$BUGSoutput$sims.matrix wch = grep("beta\\[", colnames(mcmc)) # generate a model matrix newdata = data Xmat = model.matrix(~A * B, newdata) ## get median parameter estimates coefs = apply(mcmc[, wch], 2, median) fit = as.vector(coefs %*% t(Xmat)) resid = data$y - fit sresid = resid/sd(resid) ggplot() + geom_point(data = NULL, aes(y = sresid, x = fit))

Conclusions: for this simple model, the studentized residuals yield the same pattern as the raw residuals (or the Pearson residuals for that matter).

Lets see how well data simulated from the model reflects the raw data

mcmc = data.r2jags$BUGSoutput$sims.matrix wch = grep("beta\\[", colnames(mcmc)) #generate a model matrix Xmat = model.matrix(~A*B, data) ##get median parameter estimates coefs = mcmc[,wch] fit = coefs %*% t(Xmat) ## draw samples from this model yRep = sapply(1:nrow(mcmc), function(i) rnorm(nrow(data), fit[i,], mcmc[i, 'sigma'])) newdata = data.frame(A=data$A, B=data$B, yRep) %>% gather(key=Sample, value=Value,-A,-B) ggplot(newdata) + geom_violin(aes(y=Value, x=A, fill='Model'), alpha=0.5)+ geom_violin(data=data, aes(y=y,x=A,fill='Obs'), alpha=0.5) + geom_point(data=data, aes(y=y, x=A), position=position_jitter(width=0.1,height=0), color='black')

ggplot(newdata) + geom_violin(aes(y=Value, x=B, fill='Model', group=B, color=A), alpha=0.5)+ geom_point(data=data, aes(y=y, x=B, group=B,color=A))

Conclusions the predicted trends do encapsulate the actual data, suggesting that the model is a reasonable representation of the underlying processes. Note, these are prediction intervals rather than confidence intervals as we are seeking intervals within which we can predict individual observations rather than means.

We can also explore the posteriors of each parameter.

library(bayesplot) mcmc_intervals(data.r2jags$BUGSoutput$sims.matrix, regex_pars = "beta|sigma")

mcmc_areas(data.r2jags$BUGSoutput$sims.matrix, regex_pars = "beta|sigma")

Residuals are not computed directly within STAN. However, we can calculate them manually form the posteriors.

mcmc = as.matrix(data.rstan) # generate a model matrix newdata = data Xmat = model.matrix(~A * B, newdata) ## get median parameter estimates wch = grep("beta\\[", colnames(mcmc)) coefs = apply(mcmc[, wch], 2, median) fit = as.vector(coefs %*% t(Xmat)) resid = data$y - fit ggplot() + geom_point(data = NULL, aes(y = resid, x = fit))

Residuals against predictors

mcmc = as.matrix(data.rstan) wch = grep("beta\\[", colnames(mcmc)) # generate a model matrix newdata = newdata Xmat = model.matrix(~A * B, newdata) ## get median parameter estimates coefs = apply(mcmc[, wch], 2, median) print(coefs)

beta[1] beta[2] beta[3] beta[4] beta[5] beta[6] 41.1578967 14.6211877 4.5876538 -0.8094151 -15.6448600 9.3816379

fit = as.vector(coefs %*% t(Xmat)) resid = data$y - fit newdata = newdata %>% cbind(fit, resid) ggplot(newdata) + geom_point(aes(y = resid, x = A))

ggplot(newdata) + geom_point(aes(y = resid, x = B))

And now for studentized residuals

mcmc = as.matrix(data.rstan) wch = grep("beta\\[", colnames(mcmc)) # generate a model matrix newdata = data Xmat = model.matrix(~A * B, newdata) ## get median parameter estimates coefs = apply(mcmc[, wch], 2, median) fit = as.vector(coefs %*% t(Xmat)) resid = data$y - fit sresid = resid/sd(resid) ggplot() + geom_point(data = NULL, aes(y = sresid, x = fit))

Conclusions: for this simple model, the studentized residuals yield the same pattern as the raw residuals (or the Pearson residuals for that matter).

Lets see how well data simulated from the model reflects the raw data

mcmc = as.matrix(data.rstan) wch = grep("beta\\[", colnames(mcmc)) #generate a model matrix Xmat = model.matrix(~A*B, data) ##get median parameter estimates coefs = mcmc[,wch] fit = coefs %*% t(Xmat) ## draw samples from this model yRep = sapply(1:nrow(mcmc), function(i) rnorm(nrow(data), fit[i,], mcmc[i, 'sigma'])) newdata = data.frame(A=data$A, B=data$B, yRep) %>% gather(key=Sample, value=Value,-A,-B) ggplot(newdata) + geom_violin(aes(y=Value, x=A, fill='Model'), alpha=0.5)+ geom_violin(data=data, aes(y=y,x=A,fill='Obs'), alpha=0.5) + geom_point(data=data, aes(y=y, x=A), position=position_jitter(width=0.1,height=0), color='black')

ggplot(newdata) + geom_violin(aes(y=Value, x=B, fill='Model', group=B, color=A), alpha=0.5)+ geom_point(data=data, aes(y=y, x=B, group=B,color=A))

Conclusions the predicted trends do encapsulate the actual data, suggesting that the model is a reasonable representation of the underlying processes. Note, these are prediction intervals rather than confidence intervals as we are seeking intervals within which we can predict individual observations rather than means.

We can also explore the posteriors of each parameter.

library(bayesplot) mcmc_intervals(as.matrix(data.rstan), regex_pars = "beta|sigma")

mcmc_areas(as.matrix(data.rstan), regex_pars = "beta|sigma")

Residuals are not computed directly within RSTANARM. However, we can calculate them manually form the posteriors.

resid = resid(data.rstanarm) fit = fitted(data.rstanarm) ggplot() + geom_point(data = NULL, aes(y = resid, x = fit))

Residuals against predictors

resid = resid(data.rstanarm) dat = data %>% mutate(resid = resid) ggplot(dat) + geom_point(aes(y = resid, x = A))

ggplot(dat) + geom_point(aes(y = resid, x = B))

And now for studentized residuals

resid = resid(data.rstanarm) sigma(data.rstanarm)

[1] 2.626127

sresid = resid/sigma(data.rstanarm) fit = fitted(data.rstanarm) ggplot() + geom_point(data = NULL, aes(y = sresid, x = fit))

Conclusions: for this simple model, the studentized residuals yield the same pattern as the raw residuals (or the Pearson residuals for that matter).

Lets see how well data simulated from the model reflects the raw data

y_pred = posterior_predict(data.rstanarm) newdata = data %>% cbind(t(y_pred)) %>% gather(key = "Rep", value = "Value", -A,-B,-y) ggplot(newdata) + geom_violin(aes(y = Value, x = A, fill = "Model"),alpha = 0.5) + geom_violin(data = data, aes(y = y, x = A,fill = "Obs"), alpha = 0.5) + geom_point(data = data, aes(y = y,x = A), position = position_jitter(width = 0.1, height = 0), color = "black")

ggplot(newdata) + geom_violin(aes(y=Value, x=B, fill='Model', group=B, color=A), alpha=0.5)+ geom_point(data=data, aes(y=y, x=B, group=B,color=A))

Conclusions the predicted trends do encapsulate the actual data, suggesting that the model is a reasonable representation of the underlying processes. Note, these are prediction intervals rather than confidence intervals as we are seeking intervals within which we can predict individual observations rather than means.

We can also explore the posteriors of each parameter.

library(bayesplot) mcmc_intervals(as.matrix(data.rstanarm), regex_pars = "Intercept|^A|^B|sigma")

mcmc_areas(as.matrix(data.rstanarm), regex_pars = "Intercept|^A|^B|sigma")

Residuals are not computed directly within BRMS. However, we can calculate them manually form the posteriors.

resid = resid(data.brms)[, "Estimate"] fit = fitted(data.brms)[, "Estimate"] ggplot() + geom_point(data = NULL, aes(y = resid, x = fit))

Residuals against predictors

resid = resid(data.brms)[, "Estimate"] dat = data %>% mutate(resid = resid) ggplot(dat) + geom_point(aes(y = resid, x = A))

ggplot(dat) + geom_point(aes(y = resid, x = B))

And now for studentized residuals

resid = resid(data.brms)[, "Estimate"] sresid = resid(data.brms, type = "pearson")[, "Estimate"] fit = fitted(data.brms)[, "Estimate"] ggplot() + geom_point(data = NULL, aes(y = sresid, x = fit))

Conclusions: for this simple model, the studentized residuals yield the same pattern as the raw residuals (or the Pearson residuals for that matter).

Lets see how well data simulated from the model reflects the raw data

y_pred = posterior_predict(data.brms) newdata = data %>% cbind(t(y_pred)) %>% gather(key = "Rep", value = "Value", -A,-B,-y) ggplot(newdata) + geom_violin(aes(y = Value, x = A, fill = "Model"),alpha = 0.5) + geom_violin(data = data, aes(y = y, x = A,fill = "Obs"), alpha = 0.5) + geom_point(data = data, aes(y = y,x = A), position = position_jitter(width = 0.1, height = 0), color = "black")

ggplot(newdata) + geom_violin(aes(y=Value, x=B, fill='Model', group=B, color=A), alpha=0.5)+ geom_point(data=data, aes(y=y, x=B, group=B,color=A))

Conclusions the predicted trends do encapsulate the actual data, suggesting that the model is a reasonable representation of the underlying processes. Note, these are prediction intervals rather than confidence intervals as we are seeking intervals within which we can predict individual observations rather than means.

We can also explore the posteriors of each parameter.

library(bayesplot) mcmc_intervals(as.matrix(data.brms), regex_pars = "Intercept|b_|sigma")

mcmc_areas(as.matrix(data.brms), regex_pars = "Intercept|b_|sigma")

Parameter estimates (posterior summaries)

Although all parameters in a Bayesian analysis are considered random and are considered a distribution, rarely would it be useful to present tables of all the samples from each distribution. On the other hand, plots of the posterior distributions are do have some use. Nevertheless, most workers prefer to present simple statistical summaries of the posteriors. Popular choices include the median (or mean) and 95% credibility intervals.

library(coda) mcmcpvalue <- function(samp) { ## elementary version that creates an empirical p-value for the ## hypothesis that the columns of samp have mean zero versus a general ## multivariate distribution with elliptical contours. ## differences from the mean standardized by the observed ## variance-covariance factor ## Note, I put in the bit for single terms if (length(dim(samp)) == 0) { std <- backsolve(chol(var(samp)), cbind(0, t(samp)) - mean(samp), transpose = TRUE) sqdist <- colSums(std * std) sum(sqdist[-1] > sqdist[1])/length(samp) } else { std <- backsolve(chol(var(samp)), cbind(0, t(samp)) - colMeans(samp), transpose = TRUE) sqdist <- colSums(std * std) sum(sqdist[-1] > sqdist[1])/nrow(samp) } }

Matrix model (MCMCpack)

summary(data.mcmcpack)

Iterations = 1001:11000

Thinning interval = 1

Number of chains = 1

Sample size per chain = 10000

1. Empirical mean and standard deviation for each variable,

plus standard error of the mean:

Mean SD Naive SE Time-series SE

(Intercept) 41.103 0.8453 0.008453 0.008513

Aa2 14.645 1.1977 0.011977 0.011977

Bb2 4.638 1.1843 0.011843 0.011843

Bb3 -0.767 1.1920 0.011920 0.011920

Aa2:Bb2 -15.714 1.6826 0.016826 0.016923

Aa2:Bb3 9.350 1.6806 0.016806 0.016806

sigma2 7.019 1.4188 0.014188 0.015822

2. Quantiles for each variable:

2.5% 25% 50% 75% 97.5%

(Intercept) 39.456 40.534 41.1032 41.66037 42.778

Aa2 12.270 13.844 14.6562 15.44678 16.996

Bb2 2.325 3.844 4.6501 5.42905 6.966

Bb3 -3.122 -1.547 -0.7767 0.03978 1.564

Aa2:Bb2 -19.008 -16.846 -15.7026 -14.57069 -12.477

Aa2:Bb3 6.017 8.231 9.3582 10.47091 12.658

sigma2 4.759 6.006 6.8646 7.83681 10.312

# OR library(broom) tidyMCMC(data.mcmcpack, conf.int = TRUE, conf.method = "HPDinterval")

term estimate std.error conf.low conf.high 1 (Intercept) 41.1032161 0.8453382 39.473479 42.784450 2 Aa2 14.6449428 1.1976886 12.238706 16.934341 3 Bb2 4.6379857 1.1843355 2.219722 6.842731 4 Bb3 -0.7670406 1.1920203 -3.141803 1.531671 5 Aa2:Bb2 -15.7143139 1.6825773 -18.991196 -12.467581 6 Aa2:Bb3 9.3499949 1.6805981 6.073684 12.710434 7 sigma2 7.0191843 1.4188454 4.563271 9.892696

- the intercept represents the mean of the first combination Aa1:Bb1 is

41.1032161 - Aa2:Bb1 is

14.6449428units greater than Aa1:Bb1 - Aa1:Bb2 is

4.6379857units greater Aa1:Bb1 - Aa1:Bb3 is

-0.7670406units greater Aa1:Bb1 - Aa2:Bb2 is

-15.7143139units greater than the difference between (Aa1:Bb2 + Aa2:Bb1) and (2*Aa1:Bb1) - Aa2:Bb3 is

9.3499949units greater than the difference between (Aa1:Bb3 + Aa2:Bb1) and (2*Aa1:Bb1)

While workers attempt to become comfortable with a new statistical framework, it is only natural that they like to evaluate and comprehend new structures and output alongside more familiar concepts. One way to facilitate this is via Bayesian p-values that are somewhat analogous to the frequentist p-values for investigating the hypothesis that a parameter is equal to zero.

## since values are less than zero mcmcpvalue(data.mcmcpack[, 2])

[1] 0

mcmcpvalue(data.mcmcpack[, 3])

[1] 4e-04

mcmcpvalue(data.mcmcpack[, 4])

[1] 0.5129

mcmcpvalue(data.mcmcpack[, 5])

[1] 0

mcmcpvalue(data.mcmcpack[, 6])

[1] 0

mcmcpvalue(data.mcmcpack[, 5:6])

[1] 0

There is evidence of an interaction between A and B.

Matrix model (JAGS)

print(data.r2jags)

Inference for Bugs model at "5", fit using jags,

3 chains, each with 53000 iterations (first 3000 discarded), n.thin = 10

n.sims = 15000 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

beta[1] 41.094 0.844 39.441 40.530 41.095 41.652 42.743 1.001 15000

beta[2] 14.647 1.194 12.274 13.867 14.652 15.442 16.982 1.001 15000

beta[3] 4.652 1.193 2.295 3.852 4.654 5.441 6.977 1.001 15000

beta[4] -0.746 1.190 -3.126 -1.515 -0.736 0.046 1.597 1.001 15000

beta[5] -15.712 1.695 -19.045 -16.834 -15.721 -14.601 -12.325 1.001 15000

beta[6] 9.336 1.673 6.039 8.232 9.335 10.434 12.585 1.001 15000

sigma 2.662 0.261 2.210 2.478 2.639 2.826 3.227 1.001 15000

deviance 286.111 4.072 280.390 283.115 285.406 288.308 295.927 1.001 15000

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 8.3 and DIC = 294.4

DIC is an estimate of expected predictive error (lower deviance is better).

# OR library(broom) tidyMCMC(as.mcmc(data.r2jags), conf.int = TRUE, conf.method = "HPDinterval")

term estimate std.error conf.low conf.high 1 beta[1] 41.0939179 0.8444629 39.424828 42.720949 2 beta[2] 14.6468728 1.1936367 12.362757 17.040371 3 beta[3] 4.6516490 1.1925610 2.296748 6.978871 4 beta[4] -0.7456183 1.1896540 -3.175750 1.543696 5 beta[5] -15.7117566 1.6948094 -19.140973 -12.442649 6 beta[6] 9.3364992 1.6732387 6.034525 12.572229 7 deviance 286.1113628 4.0724442 279.611182 294.244142 8 sigma 2.6621371 0.2610362 2.193585 3.199500

- the intercept represents the mean of the first combination Aa1:Bb1 is

41.0939179 - Aa2:Bb1 is

14.6468728units greater than Aa1:Bb1 - Aa1:Bb2 is

4.651649units greater Aa1:Bb1 - Aa1:Bb3 is

-0.7456183units greater Aa1:Bb1 - Aa2:Bb2 is

-15.7117566units greater than the difference between (Aa1:Bb2 + Aa2:Bb1) and (2*Aa1:Bb1) - Aa2:Bb3 is

9.3364992units greater than the difference between (Aa1:Bb3 + Aa2:Bb1) and (2*Aa1:Bb1)

While workers attempt to become comfortable with a new statistical framework, it is only natural that they like to evaluate and comprehend new structures and output alongside more familiar concepts. One way to facilitate this is via Bayesian p-values that are somewhat analogous to the frequentist p-values for investigating the hypothesis that a parameter is equal to zero.

## since values are less than zero mcmcpvalue(data.r2jags$BUGSoutput$sims.matrix[, "beta[2]"])

[1] 0

mcmcpvalue(data.r2jags$BUGSoutput$sims.matrix[, "beta[3]"])

[1] 6.666667e-05

mcmcpvalue(data.r2jags$BUGSoutput$sims.matrix[, "beta[4]"])

[1] 0.5181333

mcmcpvalue(data.r2jags$BUGSoutput$sims.matrix[, "beta[5]"])

[1] 0

mcmcpvalue(data.r2jags$BUGSoutput$sims.matrix[, "beta[6]"])

[1] 0

mcmcpvalue(data.r2jags$BUGSoutput$sims.matrix[, c("beta[5]", "beta[6]")])

[1] 0

There is evidence of an interaction between A and B.

Matrix model (STAN)

print(data.rstan, pars = c("beta", "sigma"))

Inference for Stan model: 3d2414c9dcf4b5e12be870eadd2c894a.

3 chains, each with iter=2000; warmup=500; thin=3;

post-warmup draws per chain=500, total post-warmup draws=1500.

mean se_mean sd 2.5% 25% 50% 75% 97.5% n_eff Rhat

beta[1] 41.13 0.03 0.84 39.47 40.54 41.16 41.68 42.74 1070 1

beta[2] 14.60 0.04 1.17 12.36 13.80 14.62 15.36 16.94 1026 1

beta[3] 4.60 0.04 1.20 2.28 3.81 4.59 5.40 6.89 1134 1

beta[4] -0.79 0.04 1.17 -3.07 -1.55 -0.81 -0.03 1.65 1065 1

beta[5] -15.64 0.05 1.61 -18.74 -16.74 -15.64 -14.51 -12.48 1141 1

beta[6] 9.38 0.05 1.63 6.22 8.27 9.38 10.51 12.69 1080 1

sigma 2.66 0.01 0.26 2.21 2.47 2.63 2.83 3.24 1232 1

Samples were drawn using NUTS(diag_e) at Sat Nov 25 17:19:17 2017.

For each parameter, n_eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor on split chains (at

convergence, Rhat=1).

# OR library(broom) tidyMCMC(data.rstan, conf.int = TRUE, conf.method = "HPDinterval", pars = c("beta", "sigma"))

term estimate std.error conf.low conf.high 1 beta[1] 41.128049 0.8374676 39.596978 42.796440 2 beta[2] 14.604800 1.1720223 12.294697 16.856289 3 beta[3] 4.601844 1.1980414 2.314024 6.918298 4 beta[4] -0.790552 1.1684885 -3.102146 1.620022 5 beta[5] -15.639649 1.6141412 -18.956622 -12.847933 6 beta[6] 9.384906 1.6348109 6.033244 12.348575 7 sigma 2.661834 0.2635065 2.193934 3.195871

- the intercept represents the mean of the first combination Aa1:Bb1 is

41.1280486 - Aa2:Bb1 is

14.6047995units greater than Aa1:Bb1 - Aa1:Bb2 is

4.6018442units greater Aa1:Bb1 - Aa1:Bb3 is

-0.790552units greater Aa1:Bb1 - Aa2:Bb2 is

-15.6396486units greater than the difference between (Aa1:Bb2 + Aa2:Bb1) and (2*Aa1:Bb1) - Aa2:Bb3 is

9.3849055units greater than the difference between (Aa1:Bb3 + Aa2:Bb1) and (2*Aa1:Bb1)

While workers attempt to become comfortable with a new statistical framework, it is only natural that they like to evaluate and comprehend new structures and output alongside more familiar concepts. One way to facilitate this is via Bayesian p-values that are somewhat analogous to the frequentist p-values for investigating the hypothesis that a parameter is equal to zero.

## since values are less than zero mcmcpvalue(as.matrix(data.rstan)[, "beta[2]"])

[1] 0

mcmcpvalue(as.matrix(data.rstan)[, "beta[3]"])

[1] 0.0006666667

mcmcpvalue(as.matrix(data.rstan)[, "beta[4]"])

[1] 0.486

mcmcpvalue(as.matrix(data.rstan)[, "beta[5]"])

[1] 0

mcmcpvalue(as.matrix(data.rstan)[, "beta[6]"])

[1] 0

mcmcpvalue(as.matrix(data.rstan)[, c("beta[5]", "beta[6]")])

[1] 0

There is evidence of an interaction between A and B.

library(loo) (full = loo(extract_log_lik(data.rstan)))

Computed from 1500 by 60 log-likelihood matrix

Estimate SE

elpd_loo -146.8 6.1

p_loo 6.7 1.5

looic 293.7 12.2

Pareto k diagnostic values:

Count Pct

(-Inf, 0.5] (good) 59 98.3%

(0.5, 0.7] (ok) 1 1.7%

(0.7, 1] (bad) 0 0.0%

(1, Inf) (very bad) 0 0.0%

All Pareto k estimates are ok (k < 0.7)

See help('pareto-k-diagnostic') for details.

# now fit a model without main factor modelString = " data { int<lower=1> n; int<lower=1> nX; vector [n] y; matrix [n,nX] X; } parameters { vector[nX] beta; real<lower=0> sigma; } transformed parameters { vector[n] mu; mu = X*beta; } model { #Likelihood y~normal(mu,sigma); #Priors beta ~ normal(0,1000); sigma~cauchy(0,5); } generated quantities { vector[n] log_lik; for (i in 1:n) { log_lik[i] = normal_lpdf(y[i] | mu[i], sigma); } } " Xmat <- model.matrix(~A + B, data) data.list <- with(data, list(y = y, X = Xmat, n = nrow(data), nX = ncol(Xmat))) data.rstan.red <- stan(data = data.list, model_code = modelString, chains = 3, iter = 2000, warmup = 500, thin = 3)

In file included from /usr/local/lib/R/site-library/BH/include/boost/config.hpp:39:0,

from /usr/local/lib/R/site-library/BH/include/boost/math/tools/config.hpp:13,

from /usr/local/lib/R/site-library/StanHeaders/include/stan/math/rev/core/var.hpp:7,

from /usr/local/lib/R/site-library/StanHeaders/include/stan/math/rev/core/gevv_vvv_vari.hpp:5,

from /usr/local/lib/R/site-library/StanHeaders/include/stan/math/rev/core.hpp:12,