Tutorial 10.5a - Logistic regression and proportional and percentage data

01 Aug 2018

Binary data

Scenario and Data

Logistic regression is a type of generalized linear model (GLM) that models a binary response against a linear predictor via a specific link function. The linear predictor is the typically a linear combination of effects parameters (e.g. $\beta_0 + \beta_1x_x$). The role of the link function is to transform the expected values of the response y (which is on the scale of (0,1), as is the binomial distribution from which expectations are drawn) into the scale of the linear predictor (which is $-\infty,\infty$). GLM's transform the expected values (via a link) whereas LM's transform the observed data. Thus while GLM's operate on the scale of the original data and yet also on a scale appropriate of the residuals, LM's do neither.

There are many ways (transformations) that can map values on the (0,1) scale into values on the ($-\infty,\infty$) scale, however, the three most common are:

- logit: $log\left(\frac{\pi}{1-\pi}\right)$ - log odds ratio

- probit: $\phi^{-1}(\pi)$ where $\phi^{-1}$ is an inverse normal cumulative density function

- complimentary log-log: $log(-log(1-\pi))$

Lets say we wanted to model the presence/absence of an item ($y$) against a continuous predictor ($x$) As this section is mainly about the generation of artificial data (and not specifically about what to do with the data), understanding the actual details are optional and can be safely skipped. Consequently, I have folded (toggled) this section away.

- the sample size = 20

- the continuous $x$ variable is a random uniform spread of measurements between 1 and 20

- the rate of change in log odds ratio per unit change in x (slope) = 0.5. The magnitude of the slope is an indicator of how abruptly the log odds ratio changes. A very abrupt change would occur if there was very little variability in the trend. That is, a large slope would be indicative of a threshold effect. A lower slope indicates a gradual change in odds ratio and thus a less obvious and precise switch between the likelihood of 1 vs 0.

- the inflection point (point where the slope of the line is greatest) = 10. The inflection point indicates the value of the predictor ($x$) where the log Odds are 50% (switching between 1 being more likely and 0 being more likely). This is also known as the LD50.

- the intercept (which has no real interpretation) is equal to the negative of the slope multiplied by the inflection point

- to generate the values of $y$ expected at each $x$ value, we evaluate the linear predictor. These expected values are then transformed into a scale mapped by (0,1) by using the inverse logit function $\frac{e^{linear~predictor}}{1+e^{linear~predictor}}$

- finally, we generate $y$ values by using the expected $y$ values as probabilities when drawing random numbers from a binomial distribution. This step adds random noise to the expected $y$ values and returns only 1's and 0's.

set.seed(8) # The number of samples n.x <- 20 # Create x values that at uniformly distributed throughout the rate of 1 to 20 x <- sort(runif(n = n.x, min = 1, max = 20)) # The slope is the rate of change in log odds ratio for each unit change in x the smaller the slope, # the slower the change (more variability in data too) slope = 0.5 # Inflection point is where the slope of the line is greatest this is also the LD50 point inflect <- 10 # Intercept (no interpretation) intercept <- -1 * (slope * inflect) # The linear predictor linpred <- intercept + slope * x # Predicted y values y.pred <- exp(linpred)/(1 + exp(linpred)) # Add some noise and make binomial n.y <- rbinom(n = n.x, 20, p = 0.9) y <- rbinom(n = n.x, size = 1, prob = y.pred) dat <- data.frame(y, x)

With these sort of data, we are primarily interested in investigating whether there is a relationship between the binary response variable and the linear predictor (linear combination of one or more continuous or categorical predictors).

Exploratory data analysis and initial assumption checking

- All of the observations are independent - this must be addressed at the design and collection stages

- The response variable (and thus the residuals) should be matched by an appropriate distribution (in the case of a binary response - a binomial is appropriate).

- All observations are equally influential in determining the trends - or at least no observations are overly influential. This is most effectively diagnosed via residuals and other influence indices and is very difficult to diagnose prior to analysis

- the relationship between the linear predictor (right hand side of the regression formula) and the link function should be linear. A scatterplot with smoother can be useful for identifying possible non-linearity.

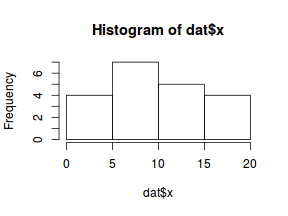

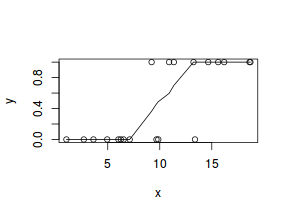

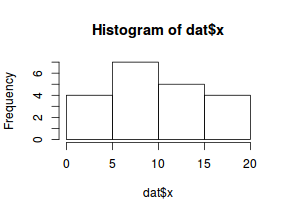

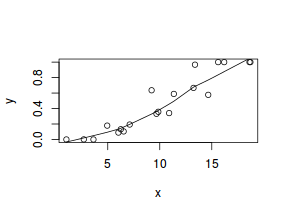

So lets explore linearity by creating a histogram of the predictor variable ($x$) and a scatterplot of the relationship between the response ($y$) and the predictor ($x$)

hist(dat$x)

# now for the scatterplot plot(y ~ x, dat) with(dat, lines(lowess(y ~ x)))

Conclusions: the predictor ($x$) does not display any skewness or other issues that might lead to non-linearity. The lowess smoother on the scatterplot does not display major deviations from a standard sigmoidal curve and thus linearity is satisfied. Violations of linearity could be addressed by either:

- define a non-linear linear predictor (such as a polynomial, spline or other non-linear function)

- transform the scale of the predictor variables

Model fitting or statistical analysis

There are a few of different functions and packages that we could use to fit a generalized linear model (or in this case a logist regression). Three of the most popular are:

- glm() from the stats package

- glmmTMB() from the glmmTMB package

- gamlss() from the gamlss package

We perform the logistic regression using the glm() function. The most important (=commonly used) parameters/arguments for logistic regression are:

- formula: the linear model relating the response variable to the linear predictor

- family: specification of the error distribution (and link function). Can be specified as either a string or as one of the family functions (which allows for the explicit declaration of the link function).

For examples:

- family="binomial" or equivalently family=binomial(link="logit")

- family=binomial(link="probit")

- data: the data frame containing the data

- weights: vector of weights to be used in the fitting process. These are particularly useful when dealing with grouped binary data from unequal sample sizes (see below).

I will demonstrate logistic regression with a range of possible link functions (each of which yield different parameter interpretations):

- logit

$$log\left(\frac{\pi}{1-\pi}\right)=\beta_0+\beta_1x_1+\epsilon_i, \hspace{1cm}\epsilon\sim Binom(\lambda)$$

dat.glmL <- glm(y ~ x, data = dat, family = "binomial")

- probit

$$\phi^{-1}\left(\pi\right)=\beta_0+\beta_1x_1+\epsilon_i, \hspace{1cm}\epsilon\sim Binom(\lambda)$$

dat.glmP <- glm(y ~ x, data = dat, family = binomial(link = "probit"))

- complimentary log-log

$$log(-log(1-\pi))=\beta_0+\beta_1x_1+\epsilon_i, \hspace{1cm}\epsilon\sim Binom(\lambda)$$

dat.glmC <- glm(y ~ x, data = dat, family = binomial(link = "cloglog"))

We perform the logistic regression using the glmmTMB() function. The most important (=commonly used) parameters/arguments for logistic regression are:

- formula: the linear model relating the response variable to the linear predictor

- family: specification of the error distribution (and link function). Can be specified as either a string or as one of the family functions (which allows for the explicit declaration of the link function).

For examples:

- family="binomial" or equivalently family=binomial(link="logit")

- family=binomial(link="probit")

- data: the data frame containing the data

- weights: vector of weights to be used in the fitting process. These are particularly useful when dealing with grouped binary data from unequal sample sizes (see below).

I will demonstrate logistic regression with a range of possible link functions (each of which yield different parameter interpretations):

- logit

$$log\left(\frac{\pi}{1-\pi}\right)=\beta_0+\beta_1x_1+\epsilon_i, \hspace{1cm}\epsilon\sim Binom(\lambda)$$

library(glmmTMB) dat.glmmTMBL <- glmmTMB(y ~ x, data = dat, family = "binomial")

- probit

$$\phi^{-1}\left(\pi\right)=\beta_0+\beta_1x_1+\epsilon_i, \hspace{1cm}\epsilon\sim Binom(\lambda)$$

dat.glmmTMBP <- glmmTMB(y ~ x, data = dat, family = binomial(link = "probit"))

- complimentary log-log

$$log(-log(1-\pi))=\beta_0+\beta_1x_1+\epsilon_i, \hspace{1cm}\epsilon\sim Binom(\lambda)$$

dat.glmmTMBC <- glmmTMB(y ~ x, data = dat, family = binomial(link = "cloglog"))

We perform the logistic regression using the gamlss() function. The most important (=commonly used) parameters/arguments for logistic regression are:

- formula: the linear model relating the response variable to the linear predictor

- family: specification of the error distribution (and link function).

This is a different specification from glm() and supports a much wider variety of

families, including mixtures.

For examples:

- family=BI() or equivalently family=BI(mu.link="logit")

- family=BI(mu.link="probit")

- data: the data frame containing the data

- weights: vector of weights to be used in the fitting process. These are particularly useful when dealing with grouped binary data from unequal sample sizes (see below).

I will demonstrate logistic regression with a range of possible link functions (each of which yield different parameter interpretations):

- logit

$$log\left(\frac{\pi}{1-\pi}\right)=\beta_0+\beta_1x_1+\epsilon_i, \hspace{1cm}\epsilon\sim Binom(\lambda)$$

library(gamlss) dat.gamlssL <- gamlss(y ~ x, data = dat, family = BI())

GAMLSS-RS iteration 1: Global Deviance = 11.6514 GAMLSS-RS iteration 2: Global Deviance = 11.6514

- probit

$$\phi^{-1}\left(\pi\right)=\beta_0+\beta_1x_1+\epsilon_i, \hspace{1cm}\epsilon\sim Binom(\lambda)$$

dat.gamlssP <- gamlss(y ~ x, data = dat, family = BI(mu.link = "probit"))

GAMLSS-RS iteration 1: Global Deviance = 11.4522 GAMLSS-RS iteration 2: Global Deviance = 11.4522

- complimentary log-log

$$log(-log(1-\pi))=\beta_0+\beta_1x_1+\epsilon_i, \hspace{1cm}\epsilon\sim Binom(\lambda)$$

dat.gamlssC <- gamlss(y ~ x, data = dat, family = BI(mu.link = "cloglog"))

GAMLSS-RS iteration 1: Global Deviance = 12.1082 GAMLSS-RS iteration 2: Global Deviance = 12.1082

Model evaluation

Prior to exploring the model parameters, it is prudent to confirm that the model did indeed fit the assumptions and was an appropriate fit to the data.

| Extractor | Description |

|---|---|

| residuals() | Extracts the residuals from the model |

| fitted() | Extracts the predicted (expected) response values (on the link scale) at the observed levels of the linear predictor |

| predict() | Extracts the predicted (expected) response values (on either the link, response or terms (linear predictor) scale) |

| coef() | Extracts the model coefficients |

| deviance() | Extracts the deviance from the model |

| AIC() | Extracts the Akaike's Information Criterion from the model |

| extractAIC() | Extracts the generalized Akaike's Information Criterion from the model |

| summary() | Summarizes the important output and characteristics of the model |

| anova() | Computes an analysis of deviance or log-likelihood ratio test (LRT) from the model |

| plot() | Generates a series of diagnostic plots from the model |

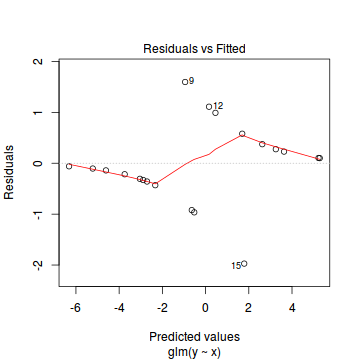

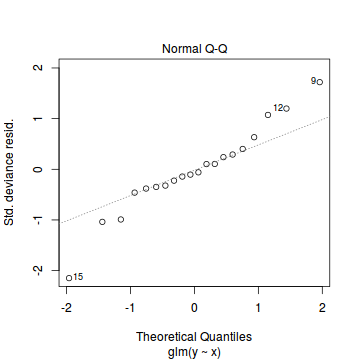

Lets explore the diagnostics - particularly the residuals

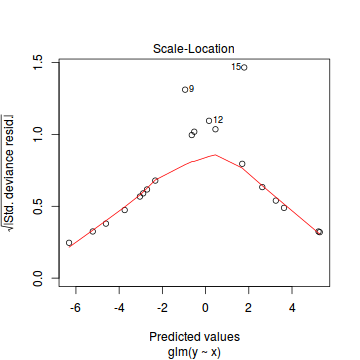

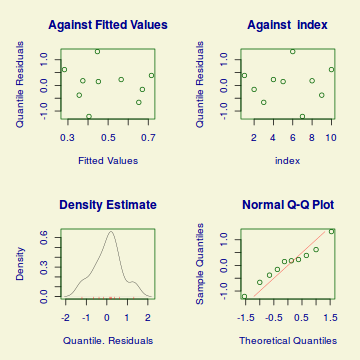

plot(dat.glmL)

Unfortunately, unlike with linear models (Gaussian family), the expected distribution of data (residuals) varies over the range of fitted values for numerous (often competing) ways that make diagnosing (and attributing causes thereof) miss-specified generalized linear models from standard residual plots very difficult. The use of standardized (Pearson) residuals or deviance residuals can partly address this issue, yet they still do not offer completely consistent diagnoses across all issues (miss-specified model, over-dispersion, zero-inflation).

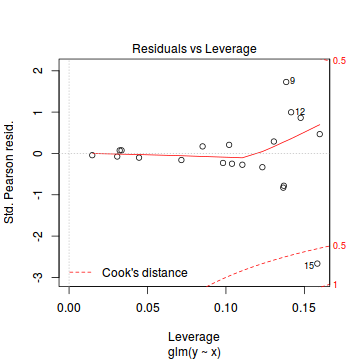

An alternative approach is to use simulated data from the fitted model to calculate an empirical cumulative density function from which residuals are are generated as values corresponding to the observed data along the density function. The (rather Bayesian) rationale is that if the model is correctly specified, then the observed data can be considered as a random draw from the fitted model. If this is the case, then residuals calculated from empirical cumulative density functions based on data simulated from the fitted model, should be totally uniform (flat) across the range of the linear predictor (fitted values) regardless of the model (Binomial, Poisson, linear, quadratic, hierarchical or otherwise). This uniformity can be explored by examining qq-plots (in which the trend should match a straight line) and plots of residual against the fitted values and each individual predictor (in which the noise should be uniform around zero across the range of x-values).

To illustrate this, lets generate 10 simulated data sets from our fitted model. This will generate a matrix with 10 columns and as many rows as there were in the original data. Think of it as 10 attempts to simulate the original data from the model.

dat.sim <- simulate(dat.glmL, n = 10) dat.sim

sim_1 sim_2 sim_3 sim_4 sim_5 sim_6 sim_7 sim_8 sim_9 sim_10 1 0 0 0 0 0 0 0 0 0 0 2 0 0 0 0 0 0 0 0 0 0 3 0 0 0 0 0 0 0 0 0 0 4 0 0 0 0 0 0 0 0 0 0 5 0 0 0 0 0 0 0 0 0 0 6 0 0 1 0 0 0 1 0 0 0 7 0 0 0 0 0 0 0 0 0 0 8 0 0 0 0 0 1 0 0 0 0 9 1 1 0 0 0 1 0 1 0 0 10 0 1 1 1 0 1 0 1 0 0 11 1 1 0 1 0 0 1 0 0 1 12 1 1 1 0 0 0 1 0 0 0 13 0 1 0 0 1 1 0 0 1 1 14 0 1 1 1 1 0 1 1 1 1 15 1 1 0 1 1 1 0 1 1 1 16 1 0 1 1 1 1 1 1 1 1 17 1 1 1 1 1 1 1 1 1 0 18 1 1 1 1 1 1 1 1 1 1 19 1 1 1 1 1 1 1 1 1 1 20 1 1 1 1 1 1 1 1 1 1

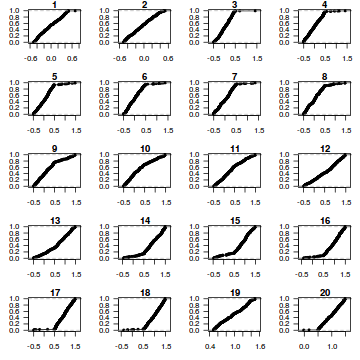

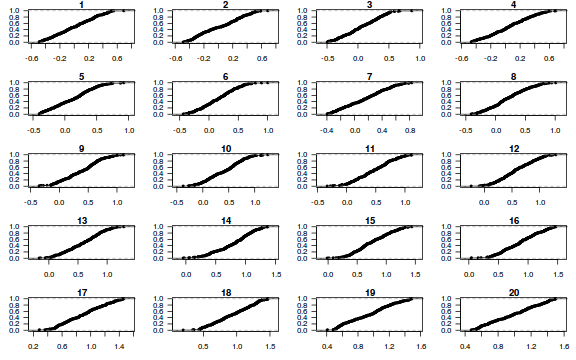

dat.sim <- simulate(dat.glmL, n = 250) par(mfrow = c(5, 4), mar = c(3, 3, 1, 1)) resid <- NULL for (i in 1:nrow(dat.sim)) { e = ecdf(data.matrix(dat.sim[i, ] + runif(250, -0.5, 0.5))) plot(e, main = i, las = 1) resid[i] <- e(dat$y[i] + runif(250, -0.5, 0.5)) }

resid

[1] 0.836 0.296 0.760 0.236 0.872 0.232 0.040 0.240 0.756 0.356 0.132 0.864 0.972 0.528 0.056 0.248 [17] 0.264 0.076 0.800 0.640

plot(resid ~ fitted(dat.glmL))

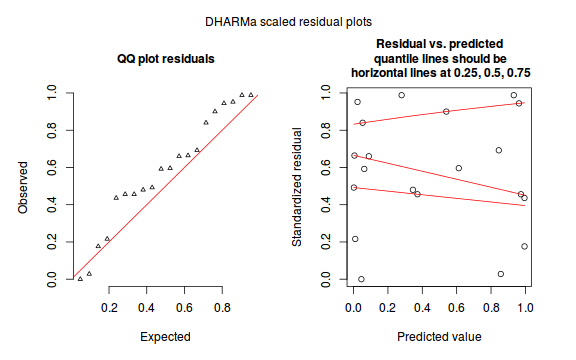

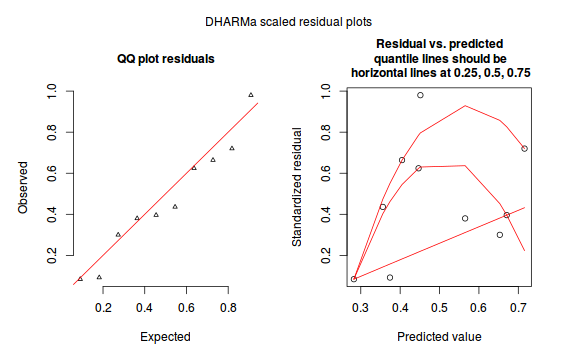

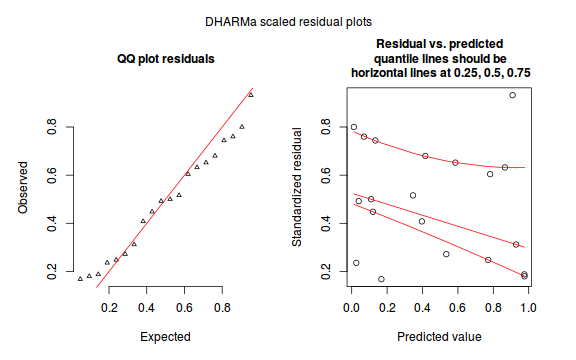

The DHARMa package provides a number of convenient routines to explore standardized residuals simulated from fitted models based on the concepts outlined above. Along with generating simulated residuals, simple qq plots and residual plots are available. By default, the residual plots include quantile regression lines (0.25, 0.5 and 0.75), each of which should be straight and flat.

- in overdispersed models, the qq trend will deviate substantially from a straight line

- non-linear models will display trends in the residuals

library(DHARMa)

dat.sim <- simulateResiduals(dat.glmL) dat.sim

Object of Class DHARMa with simulated residuals based on 250 simulations with refit = FALSE . See ?DHARMa::simulateResiduals for help. Scaled residual values: 0.492 0.664 0.216 0.952 0 0.84 0.592 0.66 0.988 0.48 0.456 0.9 0.596 0.692 0.028 0.988 0.944 0.456 0.436 0.176 ...

plotSimulatedResiduals(dat.sim)

Conclusions: there is no obvious patterns in the qq-plot or residuals, or at least there are no obvious trends remaining that would be indicative of overdispersion or non-linearity.

We can also explore the goodness of the fit of the model via:

- Pearson's $\chi^2$ residuals

- explores whether there are any significant patterns remaining in the residuals

dat.resid <- sum(resid(dat.glmL, type = "pearson")^2) 1 - pchisq(dat.resid, dat.glmL$df.resid)

[1] 0.8571451

- Deviance ($G^2$) - similar to the $\chi^2$ test above, yet uses deviance

1 - pchisq(dat.glmL$deviance, dat.glmL$df.resid)

[1] 0.8647024

- The DHARMa package also has a routine for running a Kologorov-Smirnov test

test to explore overall uniformity of the residuals as a goodness-of-fit test on the scaled

residuals.

testUniformity(dat.sim)

One-sample Kolmogorov-Smirnov test data: simulationOutput$scaledResiduals D = 0.236, p-value = 0.2153 alternative hypothesis: two-sided

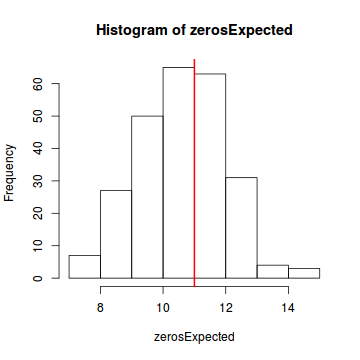

The DHARMa package also provides elegant ways to explore overdispersion, zero-inflation (and spatial and temporal autocorrelation). For these methods, the model is repeatedly refit with the simulated data to yield bootstrapped refitted residuals. The test for overdispersion compares the approximate deviances of the observed model with the those of the simulated models.

testOverdispersion(simulateResiduals(dat.glmL, refit = T))

DHARMa nonparametric overdispersion test via comparison to simulation under H0 = fitted model data: simulateResiduals(dat.glmL, refit = T) dispersion = 1.1716, p-value = 0.388 alternative hypothesis: overdispersion

testZeroInflation(simulateResiduals(dat.glmL, refit = T))

DHARMa zero-inflation test via comparison to expected zeros with simulation under H0 = fitted model data: simulateResiduals(dat.glmL, refit = T) ratioObsExp = 0.99171, p-value = 0.404 alternative hypothesis: more

In this demonstration, we fitted three logistic regressions (one for each link function). We could explore the residual plots of each of these for the purpose of comparing fit. We can also compare the fit of each of these three models via AIC (or deviance).

AIC(dat.glmL, dat.glmP, dat.glmC)

df AIC dat.glmL 2 15.65141 dat.glmP 2 15.45223 dat.glmC 2 16.10823

| Extractor | Description |

|---|---|

| residuals() | Extracts the residuals from the model |

| fitted() | Extracts the predicted (expected) response values (on the link scale) at the observed levels of the linear predictor |

| predict() | Extracts the predicted (expected) response values (on either the link, response or terms (linear predictor) scale) |

| fixef() | Extracts the fixed model coefficients |

| AIC() | Extracts the Akaike's Information Criterion from the model |

| extractAIC() | Extracts the generalized Akaike's Information Criterion from the model |

| summary() | Summarizes the important output and characteristics of the model |

| confint() | Summarizes the important output and characteristics of the model |

| r2() | R-squared of the model |

Additional methods available can be explored via the following (substituting mod for the name of the fitted model).

methods(class=class(mod))

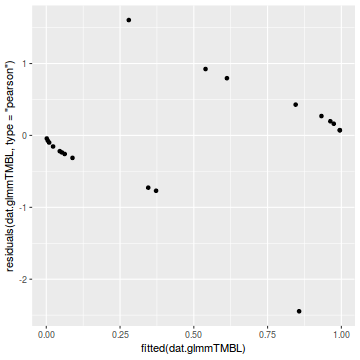

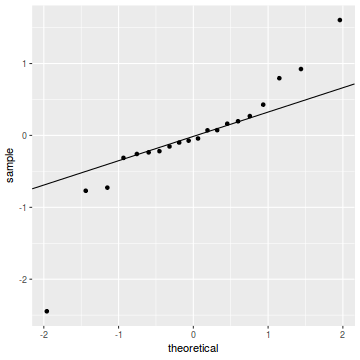

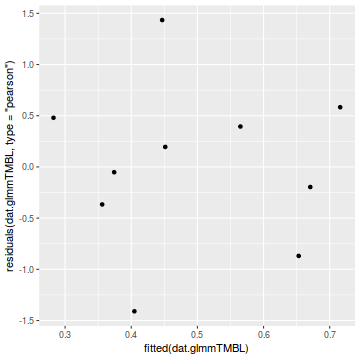

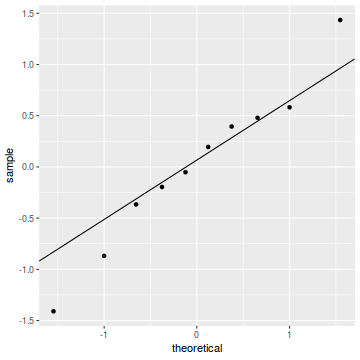

Lets explore the diagnostics - particularly the residuals

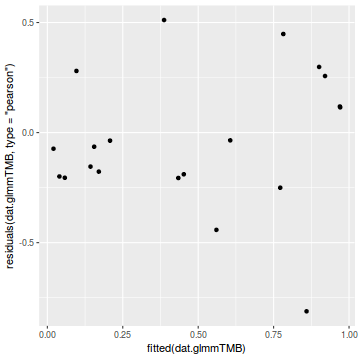

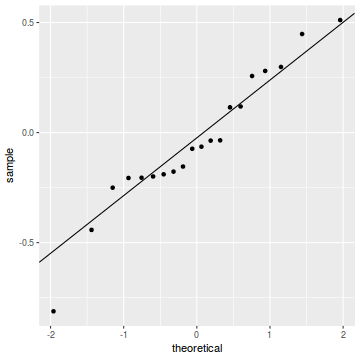

library(ggplot2) ggplot(data = NULL) + geom_point(aes(y = residuals(dat.glmmTMBL, type = "pearson"), x = fitted(dat.glmmTMBL)))

qq.line = function(x) { # following four lines from base R's qqline() y <- quantile(x[!is.na(x)], c(0.25, 0.75)) x <- qnorm(c(0.25, 0.75)) slope <- diff(y)/diff(x) int <- y[1L] - slope * x[1L] return(c(int = int, slope = slope)) } QQline = qq.line(resid(dat.glmmTMBL, type = "pearson")) ggplot(data = NULL, aes(sample = resid(dat.glmmTMBL, type = "pearson"))) + stat_qq() + geom_abline(intercept = QQline[1], slope = QQline[2])

Unfortunately, unlike with linear models (Gaussian family), the expected distribution of data (residuals) varies over the range of fitted values for numerous (often competing) ways that make diagnosing (and attributing causes thereof) miss-specified generalized linear models from standard residual plots very difficult. The use of standardized (Pearson) residuals or deviance residuals can partly address this issue, yet they still do not offer completely consistent diagnoses across all issues (miss-specified model, over-dispersion, zero-inflation).

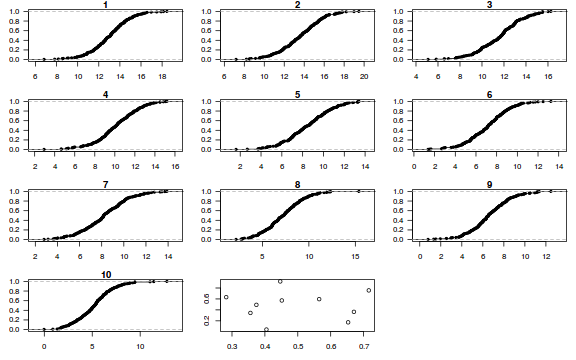

An alternative approach is to use simulated data from the fitted model to calculate an empirical cumulative density function from which residuals are are generated as values corresponding to the observed data along the density function. The (rather Bayesian) rationale is that if the model is correctly specified, then the observed data can be considered as a random draw from the fitted model. If this is the case, then residuals calculated from empirical cumulative density functions based on data simulated from the fitted model, should be totally uniform (flat) across the range of the linear predictor (fitted values) regardless of the model (Binomial, Poisson, linear, quadratic, hierarchical or otherwise). This uniformity can be explored by examining qq-plots (in which the trend should match a straight line) and plots of residual against the fitted values and each individual predictor (in which the noise should be uniform around zero across the range of x-values).

To illustrate this, lets generate 10 simulated data sets from our fitted model. This will generate a matrix with 10 columns and as many rows as there were in the original data. Think of it as 10 attempts to simulate the original data from the model.

dat.sim <- simulate(dat.glmmTMBL, n = 10) dat.sim

sim_1.1 sim_1.2 sim_2.1 sim_2.2 sim_3.1 sim_3.2 sim_4.1 sim_4.2 sim_5.1 sim_5.2 sim_6.1 sim_6.2 1 0 1 0 1 0 1 0 1 0 1 0 1 2 0 1 0 1 0 1 0 1 0 1 0 1 3 0 1 0 1 0 1 0 1 0 1 0 1 4 0 1 0 1 0 1 0 1 0 1 0 1 5 0 1 0 1 0 1 0 1 0 1 0 1 6 0 1 0 1 0 1 0 1 0 1 0 1 7 0 1 0 1 0 1 0 1 0 1 0 1 8 0 1 0 1 0 1 0 1 0 1 0 1 9 0 1 0 1 0 1 1 0 1 0 1 0 10 0 1 1 0 0 1 0 1 0 1 0 1 11 0 1 0 1 0 1 0 1 1 0 0 1 12 0 1 0 1 1 0 0 1 1 0 0 1 13 0 1 0 1 1 0 0 1 1 0 1 0 14 1 0 1 0 0 1 0 1 1 0 1 0 15 0 1 1 0 1 0 1 0 1 0 1 0 16 1 0 1 0 1 0 1 0 1 0 1 0 17 1 0 1 0 1 0 1 0 1 0 1 0 18 1 0 1 0 1 0 1 0 1 0 1 0 19 1 0 1 0 1 0 1 0 1 0 1 0 20 1 0 1 0 1 0 1 0 1 0 1 0 sim_7.1 sim_7.2 sim_8.1 sim_8.2 sim_9.1 sim_9.2 sim_10.1 sim_10.2 1 0 1 0 1 0 1 0 1 2 0 1 0 1 0 1 0 1 3 0 1 0 1 0 1 0 1 4 0 1 0 1 0 1 0 1 5 0 1 0 1 0 1 0 1 6 0 1 0 1 0 1 0 1 7 0 1 0 1 0 1 0 1 8 0 1 0 1 0 1 0 1 9 0 1 1 0 0 1 0 1 10 0 1 1 0 0 1 0 1 11 0 1 1 0 1 0 0 1 12 0 1 1 0 1 0 0 1 13 0 1 1 0 0 1 0 1 14 1 0 0 1 1 0 1 0 15 1 0 1 0 1 0 0 1 16 1 0 1 0 1 0 1 0 17 1 0 1 0 1 0 1 0 18 1 0 1 0 1 0 1 0 19 1 0 1 0 1 0 1 0 20 1 0 1 0 1 0 1 0

For binomial glmmTMB models, there are two columns (one for success and one for failure) for each simulation. We only need one of these (the success field), so we will strip out every second column.

Now for each row of these simulated data, we calculate the empirical cumulative density function and use this function to predict new y-values (=residuals) corresponding to each observed y-value. Actually, 10 simulated samples is totally inadequate, we should use at least 250. I initially used 10, just so we could explore the output. We will re-simulate 250 times. Note also, for integer responses (including binary), uniform random noise is added to both the simulated and observed data so that we can sensibly explore zero inflation. The resulting residuals will be on a scale from 0 to 1 and therefore the residual plot should be centered around a y-value of 0.5.

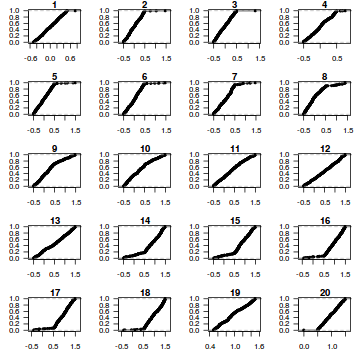

dat.sim <- simulate(dat.glmmTMBL, n = 250)[, seq(1, 250 * 2, by = 2)] par(mfrow = c(5, 4), mar = c(3, 3, 1, 1)) resid <- NULL for (i in 1:nrow(dat.sim)) { e = ecdf(data.matrix(dat.sim[i, ] + runif(250, -0.5, 0.5))) plot(e, main = i, las = 1) resid[i] <- e(dat$y[i] + runif(250, -0.5, 0.5)) }

resid

[1] 0.440 0.460 0.232 0.340 0.776 0.704 0.728 0.244 0.904 0.600 0.304 0.472 0.596 0.272 0.136 0.216 [17] 0.832 0.828 0.692 0.992

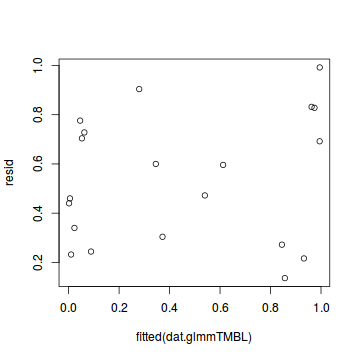

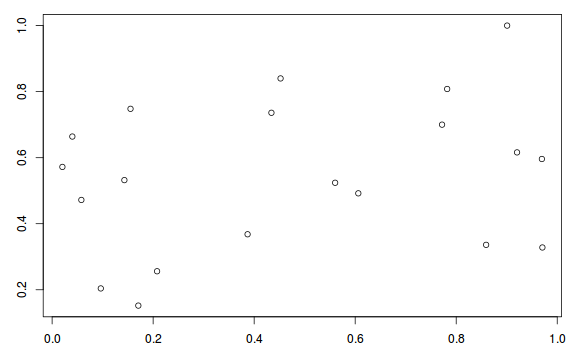

plot(resid ~ fitted(dat.glmmTMBL))

Conclusions: there is no obvious patterns in the qq-plot or residuals, or at least there are no obvious trends remaining that would be indicative of overdispersion or non-linearity.

We can also explore the goodness of the fit of the model via:

- Pearson's $\chi^2$ residuals

- explores whether there are any significant patterns remaining in the residuals

dat.resid <- sum(resid(dat.glmmTMBL, type = "pearson")^2) 1 - pchisq(dat.resid, df.residual(dat.glmmTMBL))

[1] 0.8571451

- Deviance ($G^2$) - similar to the $\chi^2$ test above, yet uses deviance

1 - pchisq(as.numeric(-2 * logLik(dat.glmmTMBL)), df.residual(dat.glmmTMBL))

[1] 0.8647024

In this demonstration, we fitted three logistic regressions (one for each link function). We could explore the residual plots of each of these for the purpose of comparing fit. We can also compare the fit of each of these three models via AIC (or deviance).

AIC(dat.glmmTMBL, dat.glmmTMBP, dat.glmmTMBC)

df AIC dat.glmmTMBL 2 15.65141 dat.glmmTMBP 2 15.45223 dat.glmmTMBC 2 16.10823

| Extractor | Description |

|---|---|

| residuals() | Extracts the residuals from the model |

| fitted() | Extracts the predicted (expected) response values (on the link scale) at the observed levels of the linear predictor |

| predict() | Extracts the predicted (expected) response values (on either the link, response or terms (linear predictor) scale) |

| coef() | Extracts the model coefficients |

| deviance() | Extracts the deviance from the model |

| AIC() | Extracts the Akaike's Information Criterion from the model |

| extractAIC() | Extracts the generalized Akaike's Information Criterion from the model |

| summary() | Summarizes the important output and characteristics of the model |

| confint() | Summarizes the important output and characteristics of the model |

| Rsq() | R-squared of the model |

| plot() | Generates a series of diagnostic plots from the model |

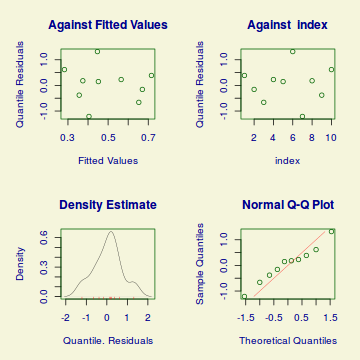

Lets explore the diagnostics - particularly the residuals

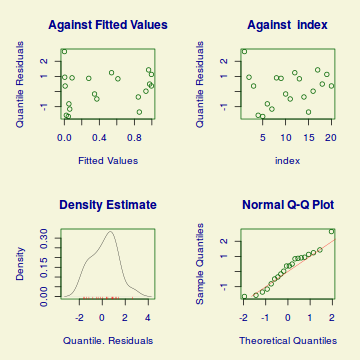

plot(dat.gamlssL)

******************************************************************

Summary of the Randomised Quantile Residuals

mean = 0.1849072

variance = 1.272725

coef. of skewness = 0.0372293

coef. of kurtosis = 2.229781

Filliben correlation coefficient = 0.9830794

******************************************************************

Unfortunately, unlike with linear models (Gaussian family), the expected distribution of data (residuals) varies over the range of fitted values for numerous (often competing) ways that make diagnosing (and attributing causes thereof) miss-specified generalized linear models from standard residual plots very difficult. The use of standardized (Pearson) residuals or deviance residuals can partly address this issue, yet they still do not offer completely consistent diagnoses across all issues (miss-specified model, over-dispersion, zero-inflation).

Conclusions: there is no obvious patterns in the qq-plot or residuals, or at least there are no obvious trends remaining that would be indicative of overdispersion or non-linearity.

We can also explore the goodness of the fit of the model via:

- Pearson's $\chi^2$ residuals

- explores whether there are any significant patterns remaining in the residuals

dat.resid <- sum(resid(dat.gamlssL, type = "weighted", what = "mu")^2) 1 - pchisq(dat.resid, dat.gamlssL$df.resid)

[1] 0.8571451

- Deviance ($G^2$) - similar to the $\chi^2$ test above, yet uses deviance

1 - pchisq(deviance(dat.gamlssL), dat.gamlssL$df.resid)

[1] 0.8647024

In this demonstration, we fitted three logistic regressions (one for each link function). We could explore the residual plots of each of these for the purpose of comparing fit. We can also compare the fit of each of these three models via AIC (or deviance).

AIC(dat.gamlssL, dat.gamlssP, dat.gamlssC)

df AIC dat.gamlssP 2 15.45223 dat.gamlssL 2 15.65141 dat.gamlssC 2 16.10823

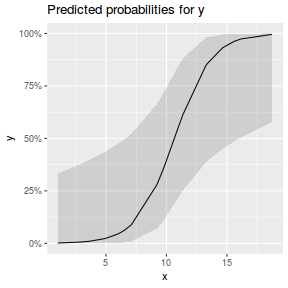

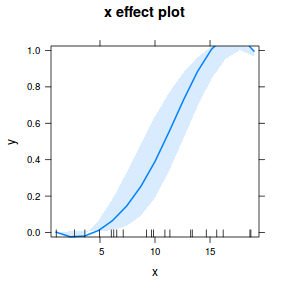

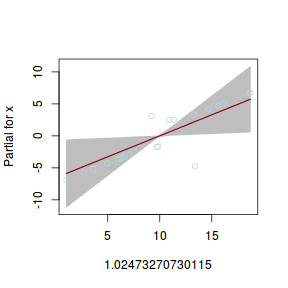

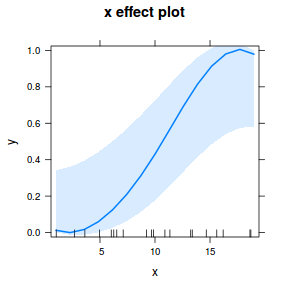

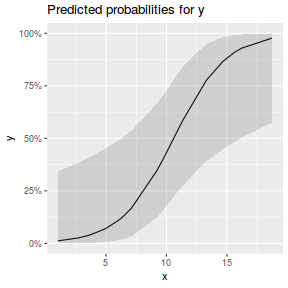

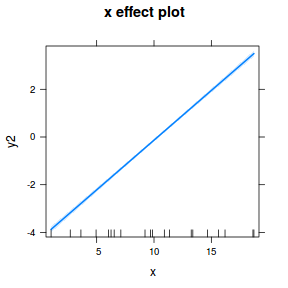

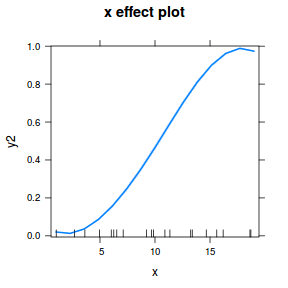

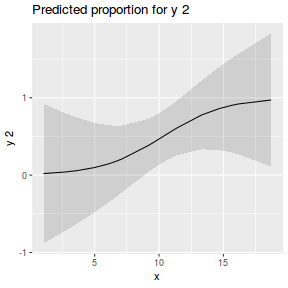

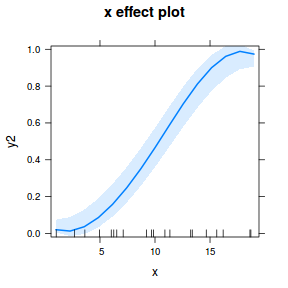

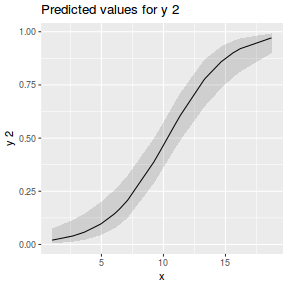

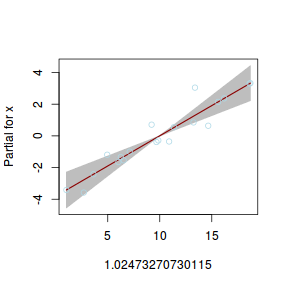

Exploring partial effects plots

## using effects library(effects) plot(allEffects(dat.glmL), type = "response")

library(sjPlot) plot_model(dat.glmL, type = "pred", show.ci = TRUE, terms = "x")

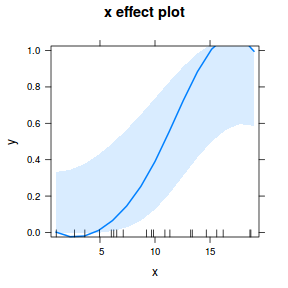

## using effects library(effects) plot(allEffects(dat.glmmTMBL), type = "response")

library(sjPlot) plot_model(dat.glmmTMBL, type = "eff", show.ci = TRUE, terms = "x")

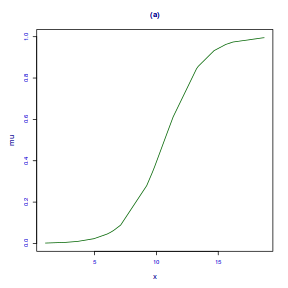

## using gamlss term.plot(dat.gamlssL, x = x, se = T, partial = TRUE)

fittedPlot(dat.gamlssL, x = x)

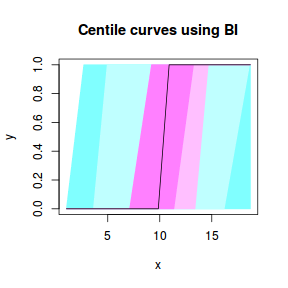

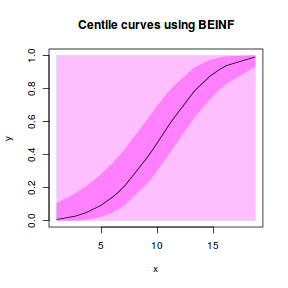

centiles.fan(dat.gamlssL, xvar = x)

## using effects library(effects) plot(allEffects(dat.gamlssL), type = "response")

Error in gamlss(formula = y ~ x, family = BI(), data = dat, method = "qr", : Method must be RS(), CG() or mixed()

library(sjPlot) plot_model(dat.gamlssL, type = "pred", show.ci = TRUE, terms = "x")

Error in faminfo$family: $ operator is invalid for atomic vectors

Exploring the model parameters, test hypotheses

If there was any evidence that the assumptions had been violated or the model was not an appropriate fit, then we would need to reconsider the model and start the process again. In this case, there is no evidence that the test will be unreliable so we can proceed to explore the test statistics. The main statistic of interest is the Wald statistic ($z$) for the slope parameter.

summary(dat.glmL)

Call:

glm(formula = y ~ x, family = "binomial", data = dat)

Deviance Residuals:

Min 1Q Median 3Q Max

-1.97157 -0.33665 -0.08191 0.30035 1.59628

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) -6.9899 3.1599 -2.212 0.0270 *

x 0.6559 0.2936 2.234 0.0255 *

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for binomial family taken to be 1)

Null deviance: 27.526 on 19 degrees of freedom

Residual deviance: 11.651 on 18 degrees of freedom

AIC: 15.651

Number of Fisher Scoring iterations: 6

exp(coef(dat.glmL))

(Intercept) x 0.000921113 1.926780616

Conclusions:

- Intercept: when x is equal to zero, the odds of a success is

9 × 10-4times greater than the odds of failure. Or the corollary, the odds of a failure is1085.6432times greater than the odds of success. - Slope: for every 1 unit increase in x, the ratio odds of success to odds of failure changes by a factor of

1.9268. That is the odds ratio of success to failure nearly doubles with every 1 unit increase in x. From a inference testing perspective, we would reject the null hypothesis of no relationship.

summary(dat.glmmTMBL)

Family: binomial ( logit )

Formula: y ~ x

Data: dat

AIC BIC logLik deviance df.resid

15.7 17.6 -5.8 11.7 18

Conditional model:

Estimate Std. Error z value Pr(>|z|)

(Intercept) -6.9899 3.1599 -2.212 0.0270 *

x 0.6559 0.2936 2.234 0.0255 *

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

exp(fixef(dat.glmmTMBL)$cond)

(Intercept) x 0.0009211129 1.9267806990

Conclusions:

- Intercept: when x is equal to zero, the odds of a success is

9 × 10-4times greater than the odds of failure. Or the corollary, the odds of a failure is1085.6432times greater than the odds of success. - Slope: for every 1 unit increase in x, the ratio odds of success to odds of failure changes by a factor of

1.9268. That is the odds ratio of success to failure nearly doubles with every 1 unit increase in x. From a inference testing perspective, we would reject the null hypothesis of no relationship.

summary(dat.gamlssL)

******************************************************************

Family: c("BI", "Binomial")

Call: gamlss(formula = y ~ x, family = BI(), data = dat)

Fitting method: RS()

------------------------------------------------------------------

Mu link function: logit

Mu Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -6.9899 3.1598 -2.212 0.0401 *

x 0.6559 0.2936 2.234 0.0384 *

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

------------------------------------------------------------------

No. of observations in the fit: 20

Degrees of Freedom for the fit: 2

Residual Deg. of Freedom: 18

at cycle: 2

Global Deviance: 11.65141

AIC: 15.65141

SBC: 17.64287

******************************************************************

exp(coef(dat.gamlssL))

(Intercept) x 0.000921113 1.926780616

Conclusions:

- Intercept: when x is equal to zero, the odds of a success is

9 × 10-4times greater than the odds of failure. Or the corollary, the odds of a failure is1085.6432times greater than the odds of success. - Slope: for every 1 unit increase in x, the ratio odds of success to odds of failure changes by a factor of

1.9268. That is the odds ratio of success to failure nearly doubles with every 1 unit increase in x. From a inference testing perspective, we would reject the null hypothesis of no relationship.

Further explorations of the trends

A measure of the strength of the relationship can be obtained according to: $$quasi R^2 = 1-\left(\frac{deviance}{null~deviance}\right)$$

1 - (dat.glmL$deviance/dat.glmL$null)

[1] 0.5767057

1 - (as.numeric(-2 * logLik(dat.glmmTMBL))/as.numeric(-2 * logLik(update(dat.glmmTMBL, ~1))))

[1] 0.5767057

Rsq(dat.gamlssL)

[1] 0.5478346

We might also be interested in the LD50 - the value of $x$ where the probability switches from favoring 1 to favoring 0. LD50 is calculated as: $$LD50 = - \frac{intercept}{slope}$$

-dat.glmL$coef[1]/dat.glmL$coef[2]

(Intercept) 10.65781

-fixef(dat.glmmTMBL)$cond[1]/fixef(dat.glmmTMBL)$cond[2]

(Intercept) 10.65781

-coef(dat.gamlssL)[1]/coef(dat.gamlssL)[2]

(Intercept) 10.65781

Logistic regression outcomes are often expressed in terms of odds ratios. Odds ratios are the ratio (or difference) of odds (which are themselves the ratio of the probability of one state to the probability of the alternate state) under two different scenarios. For example, the odds of the success/fail ratio when one condition is met vs when it is not met.

In Logistic regression, the parameters are on a log odds scale. Recall that the slope parameter of a model is typically interpreted as the change in expected response per unit change in predictor. Therefore, for logistic regression, the slope parameter represents the change (difference) on a log scale. Based on simple log laws, if we exponentiate this difference (slope), it becomes a division, and thus a ratio - a ratio of odds.

Alternatively, we could express this odds ratio in terms of relative risk. Whereas the odds ratio is the ratio of odds, relative risk is the ratio of probabilities. If risk is the probability of a state (probability of being absent), then we can express the effect of a treatment as the change in probability (risk) due to the treatment. This is the risk ratio and is calculated as: $$ R = \frac{OR}{(1+P_o+(P_o.OR)} $$ where $OR$ is the odd ratio and $P_o$ is the proportion of the incidence in the response - essentially the mean on the binary response.

exp(coef(dat.glmL)[2])

x 1.926781

# Or for arbitrary unit changes library(oddsratio) ## 1 unit change or_glm(dat, dat.glmL, inc = list(x = 1))

predictor oddsratio CI_low (2.5 %) CI_high (97.5 %) increment 1 x 1.927 1.278 4.551 1

## 5 units change or_glm(dat, dat.glmL, inc = list(x = 5))

predictor oddsratio CI_low (2.5 %) CI_high (97.5 %) increment 1 x 26.556 3.407 1951.831 5

## Alternatively, we could express this as relative risk RR <- OR / (1 - P0 + (P0 * OR)) where P0 is ## the proportion of incidence in response sjstats::or_to_rr(exp(coef(dat.glmL)[2]), mean(model.frame(dat.glmL)[[1]]))

x 1.359711

sjstats::odds_to_rr(dat.glmL)

RR lower.ci upper.ci (Intercept) 0.00167349 1.814187e-07 0.1349919 x 1.35971129 1.135840e+00 1.7517501

- the odds ratio is

1.9267806. For a one unit increase in x, the odds of success nearly double. - the associated relative risk is

1.3597113. For a one unit increase in x, the probability of success increases by 36%. Every one unit change in x results in a 36% increase in risk of y being 1.

exp(fixef(dat.glmmTMBL)$cond[2])

x 1.926781

## Alternatively, we could express this as relative risk RR <- OR / (1 - P0 + (P0 * OR)) where P0 is ## the proportion of incidence in response sjstats::or_to_rr(exp(fixef(dat.glmmTMBL)$cond[2]), mean(model.frame(dat.glmmTMBL)[[1]]))

x 1.359711

- the odds ratio is

1.9267806. For a one unit increase in x, the odds of success nearly double. - the associated relative risk is

1.3597113. For a one unit increase in x, the probability of success increases by 36%. Every one unit change in x results in a 36% increase in risk of y being 1.

exp(coef(dat.gamlssL)[2])

x 1.926781

## Alternatively, we could express this as relative risk RR <- OR / (1 - P0 + (P0 * OR)) where P0 is ## the proportion of incidence in response sjstats::or_to_rr(exp(coef(dat.gamlssL)[2]), mean(model.frame(dat.gamlssL)[[1]]))

x 1.359711

- the odds ratio is

1.9267806. For a one unit increase in x, the odds of success nearly double. - the associated relative risk is

1.3597113. For a one unit increase in x, the probability of success increases by 36%. Every one unit change in x results in a 36% increase in risk of y being 1.

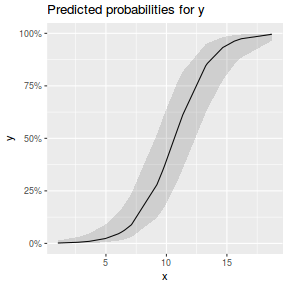

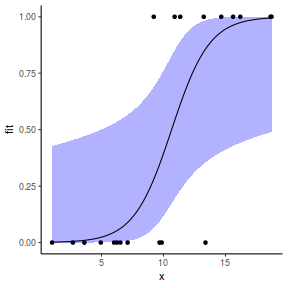

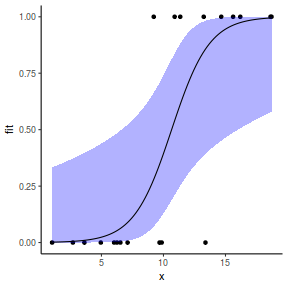

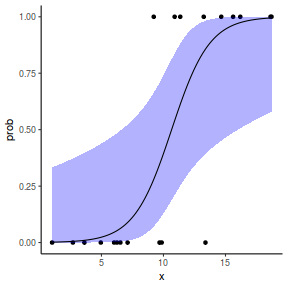

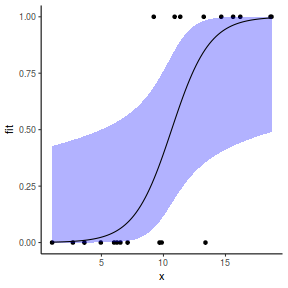

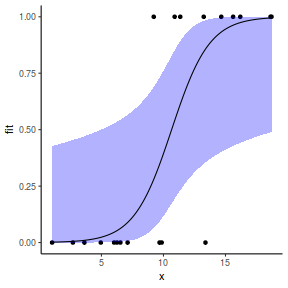

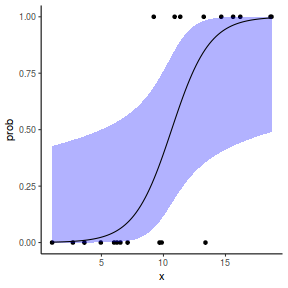

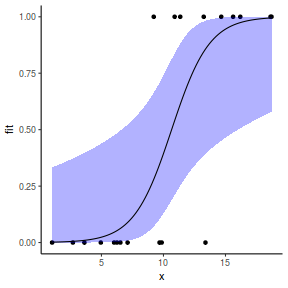

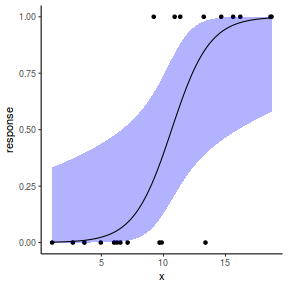

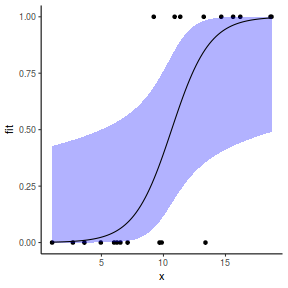

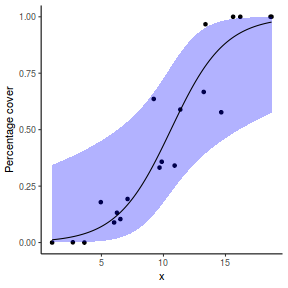

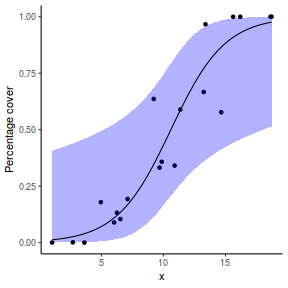

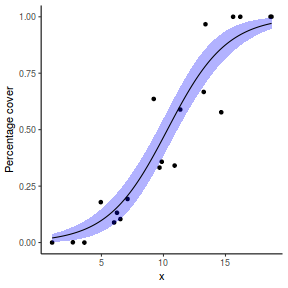

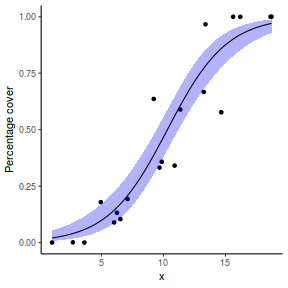

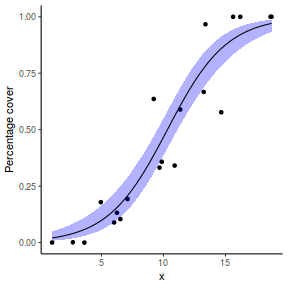

Finally, we will create a summary plot.

## using predict newdata = with(dat, data.frame(x = seq(min(x), max(x), len = 100))) fit = predict(dat.glmL, newdata = newdata, type = "link", se = TRUE) q = qt(0.975, df = df.residual(dat.glmL)) newdata = cbind(newdata, fit = binomial()$linkinv(fit$fit), lower = binomial()$linkinv(fit$fit - q * fit$se.fit), upper = binomial()$linkinv(fit$fit + q * fit$se.fit)) ggplot(data = newdata, aes(y = fit, x = x)) + geom_point(data = dat, aes(y = y)) + geom_ribbon(aes(ymin = lower, ymax = upper), fill = "blue", alpha = 0.3) + geom_line() + theme_classic()

## using effects library(effects) xlevels = as.list(with(dat, data.frame(x = seq(min(x), max(x), len = 100)))) newdata = as.data.frame(Effect("x", dat.glmL, xlevels = xlevels)) ggplot(data = newdata, aes(y = fit, x = x)) + geom_point(data = dat, aes(y = y)) + geom_ribbon(aes(ymin = lower, ymax = upper), fill = "blue", alpha = 0.3) + geom_line() + theme_classic()

## using emmeans library(emmeans) newdata = with(dat, data.frame(x = seq(min(x), max(x), len = 100))) newdata = summary(emmeans(ref_grid(dat.glmL, newdata), ~x), type = "response") ggplot(data = newdata, aes(y = prob, x = x)) + geom_point(data = dat, aes(y = y)) + geom_ribbon(aes(ymin = asymp.LCL, ymax = asymp.UCL), fill = "blue", alpha = 0.3) + geom_line() + theme_classic()

## or manually newdata = with(dat, data.frame(x = seq(min(x), max(x), len = 100))) Xmat = model.matrix(~x, data = newdata) coefs = coef(dat.glmL) fit = as.vector(coefs %*% t(Xmat)) se = sqrt(diag(Xmat %*% vcov(dat.glmL) %*% t(Xmat))) q = qt(0.975, df = df.residual(dat.glmL)) newdata = cbind(newdata, fit = binomial()$linkinv(fit), lower = binomial()$linkinv(fit - q * se), upper = binomial()$linkinv(fit + q * se)) ggplot(data = newdata, aes(y = fit, x = x)) + geom_point(data = dat, aes(y = y)) + geom_ribbon(aes(ymin = lower, ymax = upper), fill = "blue", alpha = 0.3) + geom_line() + theme_classic()

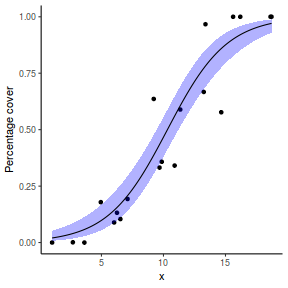

## using predict newdata = with(dat, data.frame(x = seq(min(x), max(x), len = 100))) fit = predict(dat.glmmTMBL, newdata = newdata, type = "link", se = TRUE) q = qt(0.975, df = df.residual(dat.glmL)) newdata = cbind(newdata, fit = binomial()$linkinv(fit$fit), lower = binomial()$linkinv(fit$fit - q * fit$se.fit), upper = binomial()$linkinv(fit$fit + q * fit$se.fit)) ggplot(data = newdata, aes(y = fit, x = x)) + geom_point(data = dat, aes(y = y)) + geom_ribbon(aes(ymin = lower, ymax = upper), fill = "blue", alpha = 0.3) + geom_line() + theme_classic()

## using effects library(effects) xlevels = as.list(with(dat, data.frame(x = seq(min(x), max(x), len = 100)))) newdata = as.data.frame(Effect("x", dat.glmmTMBL, xlevels = xlevels)) ggplot(data = newdata, aes(y = fit, x = x)) + geom_point(data = dat, aes(y = y)) + geom_ribbon(aes(ymin = lower, ymax = upper), fill = "blue", alpha = 0.3) + geom_line() + theme_classic()

## using emmeans library(emmeans) newdata = with(dat, data.frame(x = seq(min(x), max(x), len = 100))) newdata = summary(emmeans(ref_grid(dat.glmmTMBL, newdata), ~x), type = "response") ggplot(data = newdata, aes(y = prob, x = x)) + geom_point(data = dat, aes(y = y)) + geom_ribbon(aes(ymin = lower.CL, ymax = upper.CL), fill = "blue", alpha = 0.3) + geom_line() + theme_classic()

## or manually newdata = with(dat, data.frame(x = seq(min(x), max(x), len = 100))) Xmat = model.matrix(~x, data = newdata) coefs = fixef(dat.glmmTMBL)$cond fit = as.vector(coefs %*% t(Xmat)) se = sqrt(diag(Xmat %*% vcov(dat.glmmTMBL)$cond %*% t(Xmat))) q = qnorm(0.975) newdata = cbind(newdata, fit = binomial()$linkinv(fit), lower = binomial()$linkinv(fit - q * se), upper = binomial()$linkinv(fit + q * se)) ggplot(data = newdata, aes(y = fit, x = x)) + geom_point(data = dat, aes(y = y)) + geom_ribbon(aes(ymin = lower, ymax = upper), fill = "blue", alpha = 0.3) + geom_line() + theme_classic()

## using predict - unfortunately, this still does not ## support se! ## using effects - not yet supported ## using emmeans library(emmeans) newdata = with(dat, data.frame(x = seq(min(x), max(x), len = 100))) newdata = summary(emmeans(ref_grid(dat.gamlssL, newdata), ~x), type = "response") ggplot(data = newdata, aes(y = response, x = x)) + geom_point(data = dat, aes(y = y)) + geom_ribbon(aes(ymin = asymp.LCL, ymax = asymp.UCL), fill = "blue", alpha = 0.3) + geom_line() + theme_classic()

## or manually newdata = with(dat, data.frame(x = seq(min(x), max(x), len = 100))) Xmat = model.matrix(~x, data = newdata) coefs = coef(dat.gamlssL) fit = as.vector(coefs %*% t(Xmat)) se = sqrt(diag(Xmat %*% vcov(dat.gamlssL) %*% t(Xmat))) q = qt(0.975, df = df.residual(dat.gamlssL)) newdata = cbind(newdata, fit = binomial()$linkinv(fit), lower = binomial()$linkinv(fit - q * se), upper = binomial()$linkinv(fit + q * se)) ggplot(data = newdata, aes(y = fit, x = x)) + geom_point(data = dat, aes(y = y)) + geom_ribbon(aes(ymin = lower, ymax = upper), fill = "blue", alpha = 0.3) + geom_line() + theme_classic()

Grouped binary data (and proportional data)

Scenario and Data

In the previous demonstration, the response variable represented the state of a single item per level of the predictor variable ($x$). That single item could be observed having a value of either 1 or 0. Another common situation is to observe the number of items in one of two states (typically dead or alive) for each level of a treatment. For example, you could tally up the number of germinated and non-germinated seeds out of a bank of 10 seeds at each of 8 temperature or nutrient levels.

Recall that the binomial distribution represents the density (probability) of all possible successes (germinations) out of a total of $N$ items (seeds). Hence the binomial distribution is also a suitable error distribution for such grouped binary data.

Similarly, proportional data (in which the response represents the frequency of events out of a set total of events) is also a good match for the binomial distribution. After all, the binomial distribution represents the distribution of successes out of a set number of trials. Examples of such data might be the proportion of female kangaroos with joey or the proportion of seeds taken by ants etc.

Indeed, binary data (ungrouped: presence/absence, dead/alive etc) is just a special case of proportional data in which the total (number of trials) in each case is 1. That is, the data are 1 out of 1 or 0 out of 1.

For this demonstration, we will model the number of successes against a uniformly distributed predictor ($x$). The number of trials in each group (level of the predictor) will vary slightly (yet randomly) so as to mimic complications that inevadably occur in real experiments.

- the number of levels of $x$ = 10

- the continuous $x$ variable is a random uniform spread of measurements between 10 and 20

- the rate of change in log odds ratio per unit change in x (slope) = -0.25. The magnitude of the slope is an indicator of how abruptly the log odds ratio changes. A very abrupt change would occur if there was very little variability in the trend. That is, a large slope would be indicative of a threshold effect. A lower slope indicates a gradual change in odds ratio and thus a less obvious and precise switch between the likelihood of 1 vs 0.

- the inflection point (point where the slope of the line is greatest) = 10. The inflection point indicates the value of the predictor ($x$) where the log Odds are 50% (switching between 1 being more likely and 0 being more likely). This is also known as the LD50.

- the intercept (which has no real interpretation) is equal to the negative of the slope multiplied by the inflection point

- generate the values of $y$ expected at each $x$ value, we evaluate the linear predictor.

- generate random numbers of trials per group by drawing integers out of a binomial distribution with a total size of 20 and probability of 0.9. Hence the maximum number of trials per group will be 20, yet some will be less than that.

- the number of success per group are drawn from a binomial distribution with a total size equal to the trial sizes generated in the previous step and probabilities equal to the expected $y$ values

- finally, the number of failures per group are calculated as the difference between the total trial size and number of successes per group.

set.seed(8) # The number of levels of x n.x <- 10 # Create x values that at uniformly distributed throughout the rate of 10 to 20 x <- sort(runif(n = n.x, min = 10, max = 20)) # The slope is the rate of change in log odds ratio for each unit change in x the smaller the slope, # the slower the change (more variability in data too) slope = -0.25 # Inflection point is where the slope of the line is greatest this is also the LD50 point inflect <- 15 # Intercept (no interpretation) intercept <- -1 * (slope * inflect) # The linear predictor linpred <- intercept + slope * x # Predicted y values y.pred <- exp(linpred)/(1 + exp(linpred)) # Add some noise and make binary (0's and 1's) n.trial <- rbinom(n = n.x, 20, prob = 0.9) success <- rbinom(n = n.x, size = n.trial, prob = y.pred) failure <- n.trial - success dat <- data.frame(success, failure, x)

Exploratory data analysis and initial assumption checking

- All of the observations are independent - this must be addressed at the design and collection stages

- The response variable (and thus the residuals) should be matched by an appropriate distribution (in the case of a binary response - a binomial is appropriate).

- All observations are equally influential in determining the trends - or at least no observations are overly influential. This is most effectively diagnosed via residuals and other influence indices and is very difficult to diagnose prior to analysis

- the relationship between the linear predictor (right hand side of the regression formula) and the link function should be linear. A scatterplot with smoother can be useful for identifying possible non-linearity.

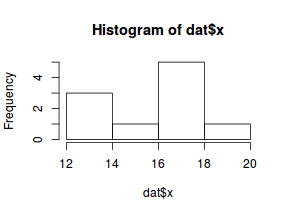

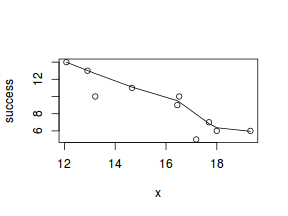

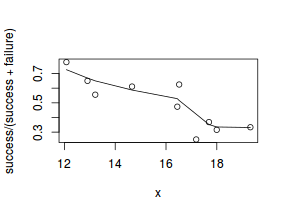

So lets explore linearity by creating a histogram of the predictor variable ($x$) and a scatterplot of the relationship between the either the number of successes ($success$) or the number of ($failures$) and the predictor ($x$). Note, that this will not account for the differences in trial size per group and so a scatterplot of the relationship between the number of successes ($success$) or the number of ($failures$) divided by the total number of trials against the predictor ($x$) might be more appropriate.

hist(dat$x)

# now for the scatterplot plot(success ~ x, dat) with(dat, lines(lowess(success ~ x)))

# scatterplot standardized for trial size plot(success/(success + failure) ~ x, dat) with(dat, lines(lowess(success/(success + failure) ~ x)))

Conclusions: the predictor ($x$) does not display any skewness (although it is not all that uniform - random data hey!) or other issues that might lead to non-linearity. The lowess smoother on either scatterplot does not display major deviations from a standard sigmoidal curve and thus linearity is likely to be satisfied. Violations of linearity could be addressed by either:

- define a non-linear linear predictor (such as a polynomial, spline or other non-linear function)

- transform the scale of the predictor variables

Model fitting or statistical analysis

We perform the logistic regression using the glm() function. Clearly the number of successes is also dependent on the number of trials. Larger numbers of trials might be expected to yeild higher numbers of successes. Model weights can be used to account for differences in the total numbers of trials within each group. The most important (=commonly used) parameters/arguments for logistic regression are:

- formula: the linear model relating the response variable to the linear predictor

- family: specification of the error distribution (and link function). Can be specified as either a string or as one of the family functions (which allows for the explicit declaration of the link function).

For examples:

- family="binomial" or equivalently family=binomial(link="logit")

- family=binomial(link="probit")

- data: the data frame containing the data

- weights: vector of weights to be used in the fitting process. These are particularly useful when dealing with grouped binary data from unequal sample sizes.

I will demonstrate logistic regression with a range of possible link functions (each of which yield different parameter interpretations):

- logit

$$log\left(\frac{\pi}{1-\pi}\right)=\beta_0+\beta_1x_1+\epsilon_i, \hspace{1cm}\epsilon\sim Binom(\lambda)$$

dat.glmL <- glm(cbind(success, failure) ~ x, data = dat, family = "binomial") # OR dat <- within(dat, total <- success + failure) dat.glmL <- glm(I(success/total) ~ x, data = dat, family = "binomial", weight = total)

- probit

$$\phi^{-1}\left(\pi\right)=\beta_0+\beta_1x_1+\epsilon_i, \hspace{1cm}\epsilon\sim Binom(\lambda)$$

dat.glmP <- glm(cbind(success, failure) ~ x, data = dat, family = binomial(link = "probit")) # OR dat <- within(dat, total <- success + failure) dat.glmP <- glm(I(success/total) ~ x, data = dat, family = binomial(link = "probit"), weight = total)

- complimentary log-log

$$log(-log(1-\pi))=\beta_0+\beta_1x_1+\epsilon_i, \hspace{1cm}\epsilon\sim Binom(\lambda)$$

dat.glmC <- glm(cbind(success, failure) ~ x, data = dat, family = binomial(link = "cloglog")) # OR dat <- within(dat, total <- success + failure) dat.glmC <- glm(I(success/total) ~ x, data = dat, family = binomial(link = "cloglog"), weight = total)

We perform the logistic regression using the glmmTMB() function. The most important (=commonly used) parameters/arguments for logistic regression are:

- formula: the linear model relating the response variable to the linear predictor

- family: specification of the error distribution (and link function). Can be specified as either a string or as one of the family functions (which allows for the explicit declaration of the link function).

For examples:

- family="binomial" or equivalently family=binomial(link="logit")

- family=binomial(link="probit")

- data: the data frame containing the data

- weights: vector of weights to be used in the fitting process. These are particularly useful when dealing with grouped binary data from unequal sample sizes (see below).

I will demonstrate logistic regression with a range of possible link functions (each of which yield different parameter interpretations):

- logit

$$log\left(\frac{\pi}{1-\pi}\right)=\beta_0+\beta_1x_1+\epsilon_i, \hspace{1cm}\epsilon\sim Binom(\lambda)$$

library(glmmTMB) dat.glmmTMBL <- glmmTMB(cbind(success, failure) ~ x, data = dat, family = "binomial")

- probit

$$\phi^{-1}\left(\pi\right)=\beta_0+\beta_1x_1+\epsilon_i, \hspace{1cm}\epsilon\sim Binom(\lambda)$$

dat.glmmTMBP <- glmmTMB(cbind(success, failure) ~ x, data = dat, family = binomial(link = "probit"))

- complimentary log-log

$$log(-log(1-\pi))=\beta_0+\beta_1x_1+\epsilon_i, \hspace{1cm}\epsilon\sim Binom(\lambda)$$

dat.glmmTMBC <- glmmTMB(cbind(success, failure) ~ x, data = dat, family = binomial(link = "cloglog"))

We perform the logistic regression using the gamlss() function. The most important (=commonly used) parameters/arguments for logistic regression are:

- formula: the linear model relating the response variable to the linear predictor

- family: specification of the error distribution (and link function).

This is a different specification from glm() and supports a much wider variety of

families, including mixtures.

For examples:

- family=BI() or equivalently family=BI(mu.link="logit")

- family=BI(mu.link="probit")

- data: the data frame containing the data

- weights: vector of weights to be used in the fitting process. These are particularly useful when dealing with grouped binary data from unequal sample sizes (see below).

I will demonstrate logistic regression with a range of possible link functions (each of which yield different parameter interpretations):

- logit

$$log\left(\frac{\pi}{1-\pi}\right)=\beta_0+\beta_1x_1+\epsilon_i, \hspace{1cm}\epsilon\sim Binom(\lambda)$$

library(gamlss) dat.gamlssL <- gamlss(cbind(success, failure) ~ x, data = dat, family = BI())

GAMLSS-RS iteration 1: Global Deviance = 38.6222 GAMLSS-RS iteration 2: Global Deviance = 38.6222

- probit

$$\phi^{-1}\left(\pi\right)=\beta_0+\beta_1x_1+\epsilon_i, \hspace{1cm}\epsilon\sim Binom(\lambda)$$

dat.gamlssP <- gamlss(cbind(success, failure) ~ x, data = dat, family = BI(mu.link = "probit"))

GAMLSS-RS iteration 1: Global Deviance = 38.6267 GAMLSS-RS iteration 2: Global Deviance = 38.6267

- complimentary log-log

$$log(-log(1-\pi))=\beta_0+\beta_1x_1+\epsilon_i, \hspace{1cm}\epsilon\sim Binom(\lambda)$$

dat.gamlssC <- gamlss(cbind(success, failure) ~ x, data = dat, family = BI(mu.link = "cloglog"))

GAMLSS-RS iteration 1: Global Deviance = 38.5955 GAMLSS-RS iteration 2: Global Deviance = 38.5955

Model evaluation

Prior to exploring the model parameters, it is prudent to confirm that the model did indeed fit the assumptions and was an appropriate fit to the data.

Lets explore the diagnostics - particularly the residuals

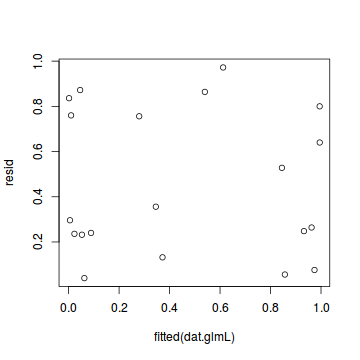

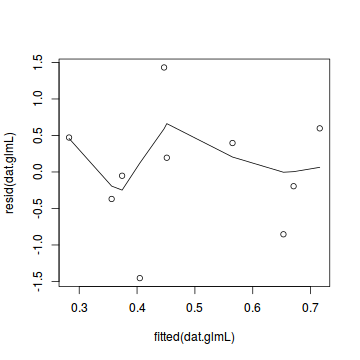

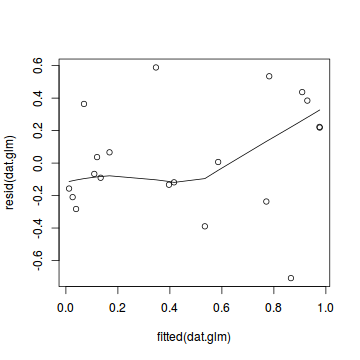

plot(resid(dat.glmL) ~ fitted(dat.glmL)) lines(lowess(resid(dat.glmL) ~ fitted(dat.glmL)))

dat.sim <- simulateResiduals(dat.glmL) plotSimulatedResiduals(dat.sim)

Conclusions: there is no obvious patterns in the residuals, or at least there are no obvious trends remaining that would be indicative of non-linearity.

We can also explore the goodness of the fit of the model via:

- Pearson's $\chi^2$ residuals

- explores whether there are any significant patterns remaining in the residuals

dat.resid <- sum(resid(dat.glmL, type = "pearson")^2) 1 - pchisq(dat.resid, dat.glmL$df.resid)

[1] 0.6763891

- Deviance ($G^2$) - similar to the $chi^2$ test above, yet uses deviance

1 - pchisq(dat.glmL$deviance, dat.glmL$df.resid)

[1] 0.6646471

- using the DHARMa package

testUniformity(dat.sim)

One-sample Kolmogorov-Smirnov test data: simulationOutput$scaledResiduals D = 0.18, p-value = 0.8473 alternative hypothesis: two-sided

In the absence of any evidence of a goodness of the fit of the model, there is unlikely to be any issues with overdispersion. However, it is worth estimating the dispersion parameter nonetheless.

- Pearson's $\chi^2$ residuals

- explores whether there are any significant patterns remaining in the residuals

dat.resid <- sum(resid(dat.glmL, type = "pearson")^2) dat.resid/dat.glmL$df.resid

[1] 0.7174332

- Deviance ($G^2$) - similar to the $chi^2$ test above, yet uses deviance

dat.glmL$deviance/dat.glmL$df.resid

[1] 0.7305604

- using the DHARMa package

testOverdispersion(simulateResiduals(dat.glmL, refit = T))

Error in ecdf(out$refittedResiduals[i, ] + runif(out$nSim, -0.5, 0.5)): 'x' must have 1 or more non-missing values

testZeroInflation(simulateResiduals(dat.glmL, refit = T))

Error in ecdf(out$refittedResiduals[i, ] + runif(out$nSim, -0.5, 0.5)): 'x' must have 1 or more non-missing values

In this demonstration, we fitted three logistic regressions (one for each link function). We could explore the residual plots of each of these for the purpose of comparing fit. We can also compare the fit of each of these three models via AIC (or deviance).

AIC(dat.glm, dat.glmP, dat.glmC)

Error in AIC(dat.glm, dat.glmP, dat.glmC): object 'dat.glm' not found

library(ggplot2) ggplot(data = NULL) + geom_point(aes(y = residuals(dat.glmmTMBL, type = "pearson"), x = fitted(dat.glmmTMBL)))

qq.line = function(x) { # following four lines from base R's qqline() y <- quantile(x[!is.na(x)], c(0.25, 0.75)) x <- qnorm(c(0.25, 0.75)) slope <- diff(y)/diff(x) int <- y[1L] - slope * x[1L] return(c(int = int, slope = slope)) } QQline = qq.line(resid(dat.glmmTMBL, type = "pearson")) ggplot(data = NULL, aes(sample = resid(dat.glmmTMBL, type = "pearson"))) + stat_qq() + geom_abline(intercept = QQline[1], slope = QQline[2])

dat.sim <- simulate(dat.glmmTMBL, n = 250)[, seq(1, 250 * 2, by = 2)] par(mfrow = c(4, 3), mar = c(3, 3, 1, 1)) resid <- NULL for (i in 1:nrow(dat.sim)) { e = ecdf(data.matrix(dat.sim[i, ] + runif(250, -0.5, 0.5))) plot(e, main = i, las = 1) resid[i] <- e(dat$success[i] + runif(250, -0.5, 0.5)) } resid

[1] 0.756 0.364 0.172 0.596 0.572 0.920 0.040 0.492 0.344 0.632

plot(resid ~ fitted(dat.glmmTMBL))

Conclusions: there is no obvious patterns in the residuals, or at least there are no obvious trends remaining that would be indicative of non-linearity.

We can also explore the goodness of the fit of the model via:

- Pearson's $\chi^2$ residuals

- explores whether there are any significant patterns remaining in the residuals

dat.resid <- sum(resid(dat.glmmTMBL, type = "pearson")^2) 1 - pchisq(dat.resid, df.residual(dat.glmmTMBL))

[1] 0.6763891

In the absence of any evidence of a goodness of the fit of the model, there is unlikely to be any issues with overdispersion. However, it is worth estimating the dispersion parameter nonetheless.

- Pearson's $\chi^2$ residuals

- explores whether there are any significant patterns remaining in the residuals

dat.resid <- sum(resid(dat.glmmTMBL, type = "pearson")^2) dat.resid/df.residual(dat.glmmTMBL)

[1] 0.7174332

In this demonstration, we fitted three logistic regressions (one for each link function). We could explore the residual plots of each of these for the purpose of comparing fit. We can also compare the fit of each of these three models via AIC (or deviance).

AIC(dat.glmmTMBL, dat.glmmTMBP, dat.glmmTMBC)

df AIC dat.glmmTMBL 2 42.62224 dat.glmmTMBP 2 42.62673 dat.glmmTMBC 2 42.59548

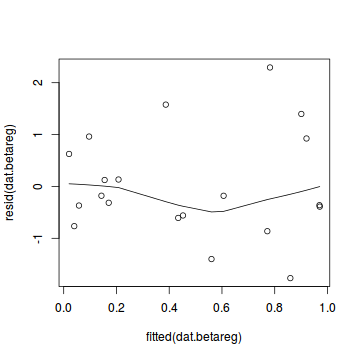

plot(dat.gamlssL)

******************************************************************

Summary of the Randomised Quantile Residuals

mean = 0.0461986

variance = 0.4918538

coef. of skewness = -0.04136291

coef. of kurtosis = 2.284877

Filliben correlation coefficient = 0.9844053

******************************************************************

Unfortunately, unlike with linear models (Gaussian family), the expected distribution of data (residuals) varies over the range of fitted values for numerous (often competing) ways that make diagnosing (and attributing causes thereof) miss-specified generalized linear models from standard residual plots very difficult. The use of standardized (Pearson) residuals or deviance residuals can partly address this issue, yet they still do not offer completely consistent diagnoses across all issues (miss-specified model, over-dispersion, zero-inflation).

Conclusions: there is no obvious patterns in the qq-plot or residuals, or at least there are no obvious trends remaining that would be indicative of overdispersion or non-linearity.

We can also explore the goodness of the fit of the model via:

- Pearson's $\chi^2$ residuals

- explores whether there are any significant patterns remaining in the residuals

dat.resid <- sum(resid(dat.gamlssL, type = "weighted", what = "mu")^2) 1 - pchisq(dat.resid, dat.gamlssL$df.resid)

[1] 0.6763891

In this demonstration, we fitted three logistic regressions (one for each link function). We could explore the residual plots of each of these for the purpose of comparing fit. We can also compare the fit of each of these three models via AIC (or deviance).

AIC(dat.gamlssL, dat.gamlssP, dat.gamlssC)

df AIC dat.gamlssC 2 42.59548 dat.gamlssL 2 42.62224 dat.gamlssP 2 42.62673

Exploring the model parameters, test hypotheses

If there was any evidence that the assumptions had been violated or the model was not an appropriate fit, then we would need to reconsider the model and start the process again. In this case, there is no evidence that the test will be unreliable so we can proceed to explore the test statistics. The main statistic of interest is the Wald statistic ($z$) for the slope parameter.

summary(dat.glmL)

Call:

glm(formula = I(success/total) ~ x, family = "binomial", data = dat,

weights = total)

Deviance Residuals:

Min 1Q Median 3Q Max

-1.45415 -0.32619 0.07158 0.45284 1.43145

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) 4.01854 1.08802 3.693 0.000221 ***

x -0.25622 0.06803 -3.766 0.000166 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for binomial family taken to be 1)

Null deviance: 21.0890 on 9 degrees of freedom

Residual deviance: 5.8445 on 8 degrees of freedom

AIC: 42.622

Number of Fisher Scoring iterations: 3

exp(coef(dat.glmL))

(Intercept) x 55.6200133 0.7739699

library(broom) tidy(dat.glmL)

# A tibble: 2 x 5 term estimate std.error statistic p.value <chr> <dbl> <dbl> <dbl> <dbl> 1 (Intercept) 4.02 1.09 3.69 0.000221 2 x -0.256 0.0680 -3.77 0.000166

glance(dat.glmL)

# A tibble: 1 x 7

null.deviance df.null logLik AIC BIC deviance df.residual

<dbl> <int> <dbl> <dbl> <dbl> <dbl> <int>

1 21.1 9 -19.3 42.6 43.2 5.84 8

Conclusions:

- Intercept: when x is equal to zero, the odds of a success is

55.62times greater than the odds of failure. - Slope: for every 1 unit increase in x, the ratio odds of success to odds of failure changes by a factor of

0.774. That is the odds ratio of success to failure declines at a rate of nearly 3/4 for every 1 unit increase in x. From a inference testing perspective, we would reject the null hypothesis of no relationship.

summary(dat.glmmTMBL)

Family: binomial ( logit )

Formula: cbind(success, failure) ~ x

Data: dat

AIC BIC logLik deviance df.resid

42.6 43.2 -19.3 38.6 8

Conditional model:

Estimate Std. Error z value Pr(>|z|)

(Intercept) 4.01854 1.08804 3.693 0.000221 ***

x -0.25622 0.06803 -3.766 0.000166 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

exp(fixef(dat.glmmTMBL)$cond)

(Intercept) x 55.6200323 0.7739699

library(broom) library(broom.mixed) # devtools::install_github('bbolker/broom.mixed') tidy(dat.glmmTMBL)

effect component group term estimate std.error statistic p.value 1 fixed cond fixed (Intercept) 4.0185434 1.08803755 3.693387 0.0002212871 2 fixed cond fixed x -0.2562223 0.06803094 -3.766261 0.0001657106

glance(dat.glmmTMBL)

sigma logLik AIC BIC df.residual 1 1 -19.31112 42.62224 43.22741 8

Conclusions:

- Intercept: when x is equal to zero, the odds of a success is

55.62times greater than the odds of failure. - Slope: for every 1 unit increase in x, the ratio odds of success to odds of failure changes by a factor of

0.774. That is the odds ratio of success to failure declines at a rate of nearly 3/4 for every 1 unit increase in x. From a inference testing perspective, we would reject the null hypothesis of no relationship.

summary(dat.gamlssL)

******************************************************************

Family: c("BI", "Binomial")

Call: gamlss(formula = cbind(success, failure) ~ x, family = BI(), data = dat)

Fitting method: RS()

------------------------------------------------------------------

Mu link function: logit

Mu Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 4.01854 1.08805 3.693 0.0061 **

x -0.25622 0.06803 -3.766 0.0055 **

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

------------------------------------------------------------------

No. of observations in the fit: 10

Degrees of Freedom for the fit: 2

Residual Deg. of Freedom: 8

at cycle: 2

Global Deviance: 38.62224

AIC: 42.62224

SBC: 43.22741

******************************************************************

exp(coef(dat.gamlssL))

(Intercept) x 55.6200134 0.7739699

library(broom) tidy(dat.gamlssL)

parameter term estimate std.error statistic p.value 1 mu (Intercept) 4.0185431 1.0880241 3.693432 0.006099988 2 mu x -0.2562222 0.0680295 -3.766340 0.005494302

glance(dat.gamlssL)

# A tibble: 1 x 6

df logLik AIC BIC deviance df.residual

<int> <dbl> <dbl> <dbl> <dbl> <dbl>

1 0 -19.3 42.6 43.2 38.6 8.

Conclusions:

- Intercept: when x is equal to zero, the odds of a success is

55.62times greater than the odds of failure. - Slope: for every 1 unit increase in x, the ratio odds of success to odds of failure changes by a factor of

0.774. That is the odds ratio of success to failure declines at a rate of nearly 3/4 for every 1 unit increase in x. From a inference testing perspective, we would reject the null hypothesis of no relationship.

Further explorations of the trends

A measure of the strength of the relationship can be obtained according to: $$quasi R^2 = 1-\left(\frac{deviance}{null~deviance}\right)$$

1 - (dat.glmL$deviance/dat.glmL$null)

[1] 0.722866

We might also be interested in the LD50 - the value of $x$ where the probability switches from favoring 1 to favoring 0. LD50 is calculated as: $$LD50 = - \frac{intercept}{slope}$$

-dat.glmL$coef[1]/dat.glmL$coef[2]

(Intercept) 15.68382

A measure of the strength of the relationship can be obtained according to: $$quasi R^2 = 1-\left(\frac{deviance}{null~deviance}\right)$$

1 - exp((2/nrow(dat)) * (logLik(update(dat.glmmTMBL, ~1))[1] - logLik(dat.glmmTMBL)[1]))

[1] 0.7822599

# This does not seem to work for proportional data. The formula above relies on deviance being # residual deviance that is -2*log(L/L_0) rather than -2*log(L) 1 - (as.numeric(-2 * logLik(dat.glmmTMBL))/as.numeric(-2 * logLik(update(dat.glmmTMBL, ~1))))

[1] 0.2830044

MuMIn::r.squaredGLMM(dat.glmmTMBL) # only seems to work when it is a mixed model

Error in reformulate(vapply(lapply(.findbars(form), "[[", 2L), deparse, : 'termlabels' must be a character vector of length at least one

We might also be interested in the LD50 - the value of $x$ where the probability switches from favoring 1 to favoring 0. LD50 is calculated as: $$LD50 = - \frac{intercept}{slope}$$

-fixef(dat.glmmTMBL)$cond[1]/fixef(dat.glmmTMBL)$cond[2]

(Intercept) 15.68382

A measure of the strength of the relationship can be obtained according to: $$quasi R^2 = 1-\left(\frac{deviance}{null~deviance}\right)$$

Rsq(dat.gamlssL)

[1] 0.7822599

We might also be interested in the LD50 - the value of $x$ where the probability switches from favoring 1 to favoring 0. LD50 is calculated as: $$LD50 = - \frac{intercept}{slope}$$

-coef(dat.gamlssL)[1]/coef(dat.gamlssL)[2]

(Intercept) 15.68382

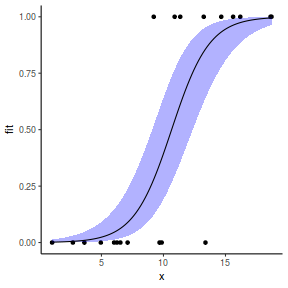

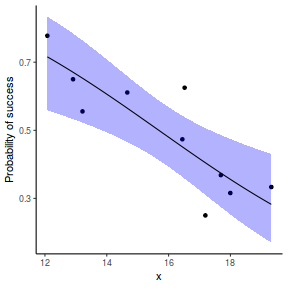

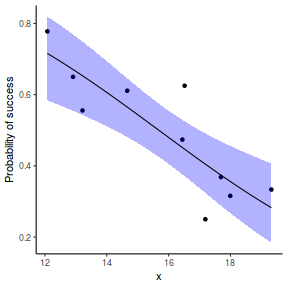

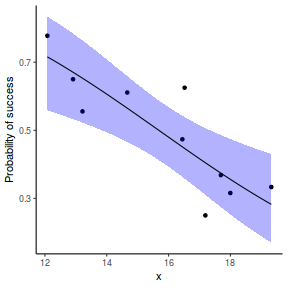

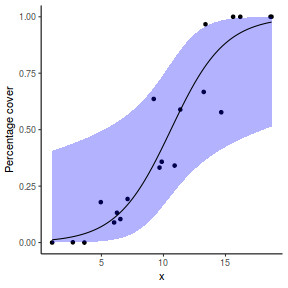

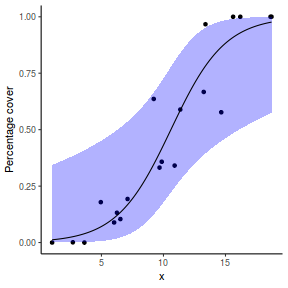

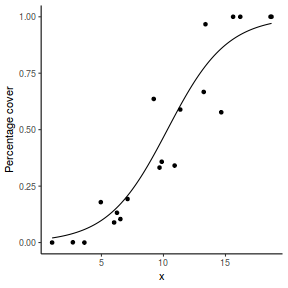

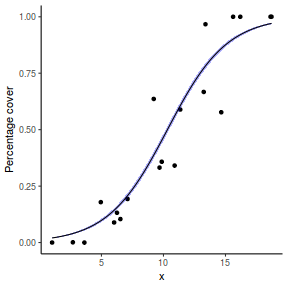

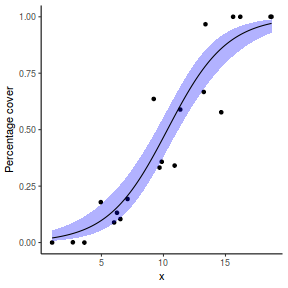

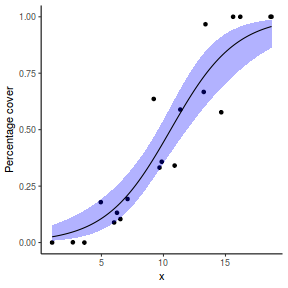

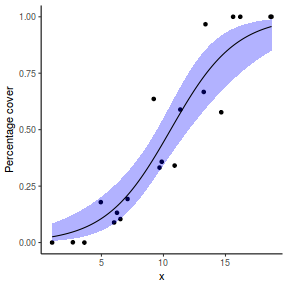

Finally, we will create a summary plot.

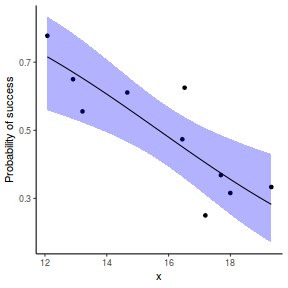

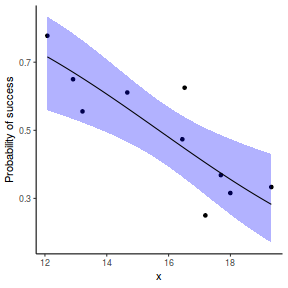

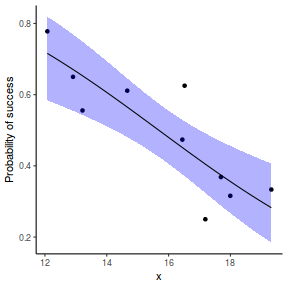

# using predict newdata = with(dat, data.frame(x = seq(min(x), max(x), len = 100))) fit = predict(dat.glmL, newdata = newdata, type = "link", se = TRUE) q = qt(0.975, df = df.residual(dat.glmL)) newdata = cbind(newdata, fit = binomial()$linkinv(fit$fit), lower = binomial()$linkinv(fit$fit - q * fit$se.fit), upper = binomial()$linkinv(fit$fit + q * fit$se.fit)) ggplot(data = newdata, aes(y = fit, x = x)) + geom_point(data = dat, aes(y = success/(success + failure))) + geom_ribbon(aes(ymin = lower, ymax = upper), fill = "blue", alpha = 0.3) + geom_line() + scale_y_continuous("Probability of success") + theme_classic()

## using effects library(effects) xlevels = as.list(with(dat, data.frame(x = seq(min(x), max(x), len = 100)))) newdata = as.data.frame(Effect("x", dat.glmL, xlevels = xlevels)) ggplot(data = newdata, aes(y = fit, x = x)) + geom_point(data = dat, aes(y = success/(success + failure))) + geom_ribbon(aes(ymin = lower, ymax = upper), fill = "blue", alpha = 0.3) + geom_line() + scale_y_continuous("Probability of success") + theme_classic()

## using emmeans library(emmeans) newdata = with(dat, data.frame(x = seq(min(x), max(x), len = 100))) newdata = summary(emmeans(ref_grid(dat.glmL, newdata), ~x), type = "response") ggplot(data = newdata, aes(y = prob, x = x)) + geom_point(data = dat, aes(y = success/(success + failure))) + geom_ribbon(aes(ymin = asymp.LCL, ymax = asymp.UCL), fill = "blue", alpha = 0.3) + geom_line() + scale_y_continuous("Probability of success") + theme_classic()

Error in FUN(X[[i]], ...): object 'prob' not found

## or manually newdata = with(dat, data.frame(x = seq(min(x), max(x), len = 100))) Xmat = model.matrix(~x, data = newdata) coefs = coef(dat.glmL) fit = as.vector(coefs %*% t(Xmat)) se = sqrt(diag(Xmat %*% vcov(dat.glmL) %*% t(Xmat))) q = qt(0.975, df = df.residual(dat.glmL)) newdata = cbind(newdata, fit = binomial()$linkinv(fit), lower = binomial()$linkinv(fit - q * se), upper = binomial()$linkinv(fit + q * se)) ggplot(data = newdata, aes(y = fit, x = x)) + geom_point(data = dat, aes(y = success/(success + failure))) + geom_ribbon(aes(ymin = lower, ymax = upper), fill = "blue", alpha = 0.3) + geom_line() + scale_y_continuous("Probability of success") + theme_classic()

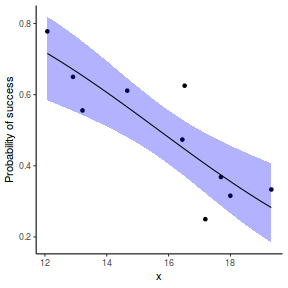

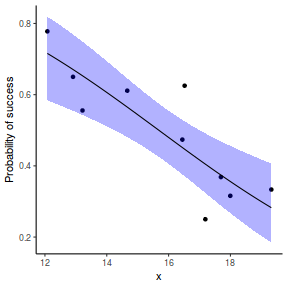

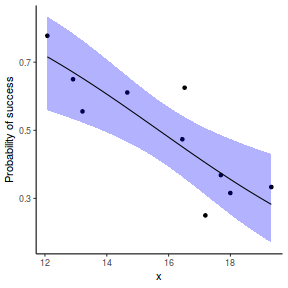

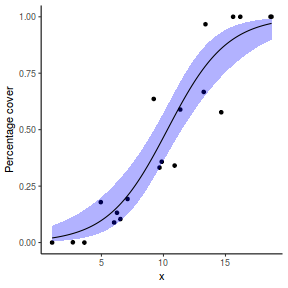

# using predict newdata = with(dat, data.frame(x = seq(min(x), max(x), len = 100))) fit = predict(dat.glmmTMBL, newdata = newdata, type = "link", se = TRUE) q = qt(0.975, df = df.residual(dat.glmmTMBL)) newdata = cbind(newdata, fit = binomial()$linkinv(fit$fit), lower = binomial()$linkinv(fit$fit - q * fit$se.fit), upper = binomial()$linkinv(fit$fit + q * fit$se.fit)) ggplot(data = newdata, aes(y = fit, x = x)) + geom_point(data = dat, aes(y = success/(success + failure))) + geom_ribbon(aes(ymin = lower, ymax = upper), fill = "blue", alpha = 0.3) + geom_line() + scale_y_continuous("Probability of success") + theme_classic()

## using effects library(effects) xlevels = as.list(with(dat, data.frame(x = seq(min(x), max(x), len = 100)))) newdata = as.data.frame(Effect("x", dat.glmmTMBL, xlevels = xlevels)) ggplot(data = newdata, aes(y = fit, x = x)) + geom_point(data = dat, aes(y = success/(success + failure))) + geom_ribbon(aes(ymin = lower, ymax = upper), fill = "blue", alpha = 0.3) + geom_line() + scale_y_continuous("Probability of success") + theme_classic()

## using emmeans library(emmeans) newdata = with(dat, data.frame(x = seq(min(x), max(x), len = 100))) newdata = summary(emmeans(ref_grid(dat.glmmTMBL, newdata), ~x), type = "response") ggplot(data = newdata, aes(y = prob, x = x)) + geom_point(data = dat, aes(y = success/(success + failure))) + geom_ribbon(aes(ymin = lower.CL, ymax = upper.CL), fill = "blue", alpha = 0.3) + geom_line() + scale_y_continuous("Probability of success") + theme_classic()

## or manually newdata = with(dat, data.frame(x = seq(min(x), max(x), len = 100))) Xmat = model.matrix(~x, data = newdata) coefs = fixef(dat.glmmTMBL)$cond fit = as.vector(coefs %*% t(Xmat)) se = sqrt(diag(Xmat %*% vcov(dat.glmmTMBL)$cond %*% t(Xmat))) q = qnorm(0.975) newdata = cbind(newdata, fit = binomial()$linkinv(fit), lower = binomial()$linkinv(fit - q * se), upper = binomial()$linkinv(fit + q * se)) ggplot(data = newdata, aes(y = fit, x = x)) + geom_point(data = dat, aes(y = success/(success + failure))) + geom_ribbon(aes(ymin = lower, ymax = upper), fill = "blue", alpha = 0.3) + geom_line() + scale_y_continuous("Probability of success") + theme_classic()

## using predict - unfortunately, this still does not support ## se! ## using effects - not yet supported ## using emmeans library(emmeans) newdata = with(dat, data.frame(x = seq(min(x), max(x), len = 100))) newdata = summary(emmeans(ref_grid(dat.gamlssL, newdata), ~x), type = "response") ggplot(data = newdata, aes(y = response, x = x)) + geom_point(data = dat, aes(y = success/(success + failure))) + geom_ribbon(aes(ymin = asymp.LCL, ymax = asymp.UCL), fill = "blue", alpha = 0.3) + geom_line() + scale_y_continuous("Probability of success") + theme_classic()

## or manually newdata = with(dat, data.frame(x = seq(min(x), max(x), len = 100))) Xmat = model.matrix(~x, data = newdata) coefs = coef(dat.gamlssL) fit = as.vector(coefs %*% t(Xmat)) se = sqrt(diag(Xmat %*% vcov(dat.gamlssL) %*% t(Xmat))) q = qt(0.975, df = df.residual(dat.gamlssL)) newdata = cbind(newdata, fit = binomial()$linkinv(fit), lower = binomial()$linkinv(fit - q * se), upper = binomial()$linkinv(fit + q * se)) ggplot(data = newdata, aes(y = fit, x = x)) + geom_point(data = dat, aes(y = success/(success + failure))) + geom_ribbon(aes(ymin = lower, ymax = upper), fill = "blue", alpha = 0.3) + geom_line() + scale_y_continuous("Probability of success") + theme_classic()

Percentage data

Scenario and Data

In some ways, percentage data is similar to proportional data. In both cases, the expected values (ratio in the case of proportional data) are in the range from 0 to 1. However, the observations themselves have different geneses. For example, whereas for the proportional data, the observations (e.g. successes out of a total number of trials) are likely to be drawn from a binomial distribution, the same cannot be said for percentage data (as these are not counts).

Some modeling routines in R allow fractions to be modelled against a binomial distribution. Others are more strict and do not allow this. Arguably, percentage data is more appropriately modelled against a beta distribution.

For this simulated demonstration, lets assume that someone has designed a fairly simple linear regression experiment in which data are recorded along a perceived gradient in X. The measured response is the percent cover of some species. A total of 20 observations were observed.

- the number of levels of $x$ = 20

- the continuous $x$ variable is a random uniform spread of measurements between 1 and 20

- the rate of change in log odds ratio per unit change in x (slope) = 0.5. The magnitude of the slope is an indicator of how abruptly the log odds ratio changes. A very abrupt change would occur if there was very little variability in the trend. That is, a large slope would be indicative of a threshold effect. A lower slope indicates a gradual change in odds ratio and thus a less obvious and precise switch between the likelihood of 1 vs 0.

- the inflection point (point where the slope of the line is greatest) = 10. The inflection point indicates the value of the predictor ($x$) where the log Odds are 50% (switching between 1 being more likely and 0 being more likely). This is also known as the LD50.

- the intercept (which has no real interpretation) is equal to the negative of the slope multiplied by the inflection point

- generate the values of $y$ expected at each $x$ value, we evaluate the linear predictor.

- generate random numbers of trials per group by drawing integers out of a beta distribution with a total size of 20, shape1=$\alpha$ and shape2=$\beta$. We can express this relative to the linear predictor and some nominal $\sigma^2$ such that: $$ \begin{align} \mu &= \frac{\alpha}{\alpha+\beta}\\ \sigma^2 &= \frac{\alpha\beta}{(\alpha+\beta)^2 (\alpha+\beta+1)}\\ \end{align} $$ After a bit of rearranging, we observe that: $$ \begin{align} a &= \left(\frac{1-\mu}{\sigma^2} - \frac{1}{\mu}\right).\mu^2\\ b &= a\left(\frac{1}{\mu} - 1\right)\\ \end{align} $$

- the percent covers are drawn from a beta distribution

set.seed(8) # The number of samples n.x <- 20 # Create x values that at uniformly distributed throughout the rate of 1 to 20 x <- sort(runif(n = n.x, min = 1, max = 20)) # The slope is the rate of change in log odds ratio for each unit change in x the smaller the slope, # the slower the change (more variability in data too) slope = 0.5 # Inflection point is where the slope of the line is greatest this is also the LD50 point inflect <- 10 # Intercept (no interpretation) intercept <- -1 * (slope * inflect) # The linear predictor linpred <- intercept + slope * x # Predicted y values y.pred <- exp(linpred)/(1 + exp(linpred)) # Add some noise and make binomial y.pred <- exp(linpred)/(1 + exp(linpred)) sigma = 0.01 estBetaParams <- function(mu, var) { alpha <- ((1 - mu)/var - 1/mu) * mu^2 beta <- alpha * (1/mu - 1) return(params = list(alpha = alpha, beta = beta)) } betaShapes = estBetaParams(y.pred, sigma) y = rbeta(n = n.x, shape1 = betaShapes$alpha, shape2 = betaShapes$beta) dat <- data.frame(y = round(y, 3), x)

Exploratory data analysis and initial assumption checking

- All of the observations are independent - this must be addressed at the design and collection stages

- The response variable (and thus the residuals) should be matched by an appropriate distribution (in the case of a percent cover response - a beta is appropriate).

One important consideration is that the beta distribution is defined in the range [0,1] but does not include 0 or 1. Often percentage data does have

0 or 1 values. If this is the case, there are a couple of options:

- convert values of 0 into something close to (yet slightly larger than 0) and do similar for values of 1.

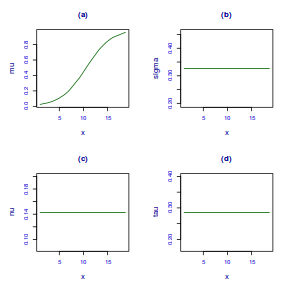

- consider a zero-inflated beta (when there are values of zero), one-inflated beta (when there are values of one), or zero-one-inflated beta (when there are values of zero and one).