| 3 |

0 |

| 2.5 |

1 |

| 6 |

2 |

| 5.5 |

3 |

| 9 |

4 |

| 8.6 |

5 |

| 12 |

6 |

\[

\begin{alignat*}{4}

3.0\quad&=\quad&\beta_0\times 1\quad&+\quad&\beta_1\times 0\quad&+\quad&\varepsilon_1\\

2.5\quad&=\quad&\beta_0\times 1\quad&+\quad&\beta_1\times 1\quad&+\quad&\varepsilon_1\\

6.0\quad&=\quad&\beta_0\times 1\quad&+\quad&\beta_1\times 2\quad&+\quad&\varepsilon_2\\

5.5\quad&=\quad&\beta_0\times 1\quad&+\quad&\beta_1\times 3\quad&+\quad&\varepsilon_3\\

9.0\quad&=\quad&\beta_0\times 1\quad&+\quad&\beta_1\times 4\quad&+\quad&\varepsilon_4\\

8.6\quad&=\quad&\beta_0\times 1\quad&+\quad&\beta_1\times 5\quad&+\quad&\varepsilon_5\\

12.0\quad&=\quad&\beta_0\times 1\quad&+\quad&\beta_1\times 6\quad&+\quad&\varepsilon_6\\

\end{alignat*}

\]

|

Estimation

|

\[

\begin{alignat*}{4}

3.0\quad&=\quad&\beta_0\times 1\quad&+\quad&\beta_1\times 0\quad&+\quad&\varepsilon_1\\

2.5\quad&=\quad&\beta_0\times 1\quad&+\quad&\beta_1\times 1\quad&+\quad&\varepsilon_1\\

6.0\quad&=\quad&\beta_0\times 1\quad&+\quad&\beta_1\times 2\quad&+\quad&\varepsilon_2\\

5.5\quad&=\quad&\beta_0\times 1\quad&+\quad&\beta_1\times 3\quad&+\quad&\varepsilon_3\\

9.0\quad&=\quad&\beta_0\times 1\quad&+\quad&\beta_1\times 4\quad&+\quad&\varepsilon_4\\

8.6\quad&=\quad&\beta_0\times 1\quad&+\quad&\beta_1\times 5\quad&+\quad&\varepsilon_5\\

12.0\quad&=\quad&\beta_0\times 1\quad&+\quad&\beta_1\times 6\quad&+\quad&\varepsilon_6\\

\end{alignat*}

\]

|

\[\underbrace{\begin{pmatrix}

3.0\\2.5\\6.0\\5.5\\9.0\\8.6\\12.0

\end{pmatrix}}_\text{Response values} =

\underbrace{\begin{pmatrix}

1&0\\1&1\\1&2\\1&3\\1&4\\1&5\\1&6

\end{pmatrix}}_\text{Model matrix} \times

\underbrace{\begin{pmatrix}

\beta_0\\\beta_1

\end{pmatrix}}_\text{Parameter vector} +

\underbrace{\begin{pmatrix}

\varepsilon_1\\\varepsilon_2\\\varepsilon_3\\\varepsilon_4&\\\varepsilon_5\\\varepsilon_6

\end{pmatrix}}_\text{Residual vector}

\]

Matrix algebra

\[\underbrace{\begin{pmatrix}

3.0\\2.5\\6.0\\5.5\\9.0\\8.6\\12.0

\end{pmatrix}}_\text{Response values} =

\underbrace{\begin{pmatrix}

1&0\\1&1\\1&2\\1&3\\1&4\\1&5\\1&6

\end{pmatrix}}_\text{Model matrix} \times

\underbrace{\begin{pmatrix}

\beta_0\\\beta_1

\end{pmatrix}}_\text{Parameter vector} +

\underbrace{\begin{pmatrix}

\varepsilon_1\\\varepsilon_2\\\varepsilon_3\\\varepsilon_4&\\\varepsilon_5\\\varepsilon_6

\end{pmatrix}}_\text{Residual vector}

\]

\[

Y = X\beta+\epsilon

\]

\[

\hat\beta = (X'X)^{-1}X'Y

\]

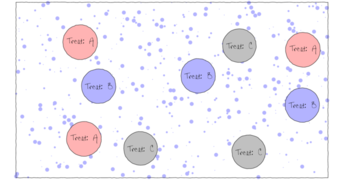

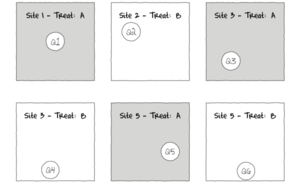

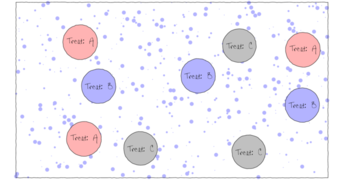

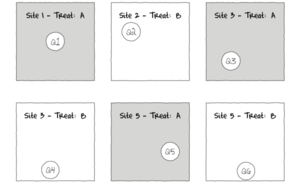

Simple ANOVA

Three treatments (One factor - 3 levels), three replicates

Simple ANOVA

Two treatments, three replicates

Categorical predictor

Y A dummy1 dummy2 dummy3

--- --- -------- -------- --------

2 G1 1 0 0

3 G1 1 0 0

4 G1 1 0 0

6 G2 0 1 0

7 G2 0 1 0

8 G2 0 1 0

10 G3 0 0 1

11 G3 0 0 1

12 G3 0 0 1

\[y_{ij}=\mu+\beta_1(dummy_1)_{ij} + \beta_2(dummy_2)_{ij} +\beta_3(dummy_3)_{ij} + \varepsilon_{ij}\]

Overparameterized

\[y_{ij}=\color{darkorange}{\mu}+\color{darkorange}{\beta_1}(dummy_1)_{ij} + \color{darkorange}{\beta_2}(dummy_2)_{ij} +\color{darkorange}{\beta_3}(dummy_3)_{ij} + \varepsilon_{ij}\]

Y A Intercept dummy1 dummy2 dummy3

--- --- ----------- -------- -------- --------

2 G1 1 1 0 0

3 G1 1 1 0 0

4 G1 1 1 0 0

6 G2 1 0 1 0

7 G2 1 0 1 0

8 G2 1 0 1 0

10 G3 1 0 0 1

11 G3 1 0 0 1

12 G3 1 0 0 1

Overparameterized

\[y_{ij}=\color{darkorange}{\mu}+\color{darkorange}{\beta_1}(dummy_1)_{ij} + \color{darkorange}{\beta_2}(dummy_2)_{ij} +\color{darkorange}{\beta_3}(dummy_3)_{ij} + \varepsilon_{ij}\]

- three treatment groups

- four parameters to estimate

- need to re-parameterize

Categorical predictor

\[y_{i}=\mu+\beta_1(dummy_1)_{i} + \beta_2(dummy_2)_{} +\beta_3(dummy_3)_{i} + \varepsilon_{i}\]

Means parameterization

\[y_{i}=\beta_1(dummy_1)_{i} + \beta_2(dummy_2)_{i} +\beta_3(dummy_3)_{i} + \varepsilon_{ij}\] \[y_{ij}=\alpha_i + \varepsilon_{ij} \quad \mathsf{i=p}\]

Categorical predictor

Means parameterization

\[y_{i}=\beta_1(dummy_1)_{i} + \beta_2(dummy_2)_{i} +\beta_3(dummy_3)_{i} + \varepsilon_{i}\]

Y A dummy1 dummy2 dummy3

--- --- -------- -------- --------

2 G1 1 0 0

3 G1 1 0 0

4 G1 1 0 0

6 G2 0 1 0

7 G2 0 1 0

8 G2 0 1 0

10 G3 0 0 1

11 G3 0 0 1

12 G3 0 0 1

Categorical predictor

Means parameterization

|

Raw data

|

|

Matrix representation

|

|

y

|

A

|

|

2

|

G1

|

|

3

|

G1

|

|

4

|

G1

|

|

6

|

G2

|

|

7

|

G2

|

|

8

|

G2

|

|

10

|

G3

|

|

11

|

G3

|

|

12

|

G3

|

|

|

\[

y_i = \alpha_1 D_{1i} + \alpha_2 D_{2i} + \alpha_3 D_{3i} + \varepsilon_i\\

y_i = \alpha_p+\varepsilon_i, \\ \text{where}~p= \text{number of levels of the factor}\\\text{and } D = \text{dummy variables}\] \[\begin{pmatrix}

2\\3\\4\\6\\7\\8\\10\\11\\12

\end{pmatrix} =

\begin{pmatrix}

1&0&0\\1&0&0\\1&0&0\\0&1&0\\0&1&0\\0&1&0\\0&0&1\\0&0&1\\0&0&1

\end{pmatrix} \times

\begin{pmatrix}

\alpha_1\\\alpha_2\\\alpha_3

\end{pmatrix} +

\begin{pmatrix}

\varepsilon_1\\\varepsilon_2\\\varepsilon_3\\\varepsilon_4&\\\varepsilon_5\\\varepsilon_6\\\varepsilon_7&\\\varepsilon_8\\\varepsilon_9

\end{pmatrix}

\]

|

\[\alpha_1 = 3, \alpha_2 = 7, \alpha_3 = 11\]

Categorical predictor

Means parameterization

|

Parameter

|

Estimates

|

Null hypothesis

|

|

\(\alpha^*_1\)

|

mean of group 1

|

H\(_0\): \(\alpha_1=\alpha_1=0\)

|

|

\(\alpha^*_2\)

|

mean of group 2

|

H\(_0\): \(\alpha_2=\alpha_2=0\)

|

|

\(\alpha^*_3\)

|

mean of group 3

|

H\(_0\): \(\alpha_3=\alpha_3=0\)

|

|

…

| |

|

> summary(lm(Y~-1+A))$coef

Estimate Std. Error t value Pr(>|t|)

AG1 3 0.5773503 5.196152 2.022368e-03

AG2 7 0.5773503 12.124356 1.913030e-05

AG3 11 0.5773503 19.052559 1.351732e-06

- but typically interested exploring effects

Categorical predictor

\[y_{i}=\mu+\beta_1(dummy_1)_{i} + \beta_2(dummy_2)_{i} +\beta_3(dummy_3)_{i} + \varepsilon_{i}\]

Effects parameterization

\[y_{ij}=\mu+\beta_2(dummy_2)_{ij} + \beta_3(dummy_3)_{ij} + \varepsilon_{ij}\] \[y_{ij}=\mu + \alpha_i + \varepsilon_{ij} \qquad \mathsf{i=p-1}\]

Categorical predictor

Effects parameterization

\[y_{i}=\alpha + \beta_2(dummy_2)_{i} +\beta_3(dummy_3)_{i} + \varepsilon_{i}\]

Y A alpha dummy2 dummy3

--- --- ------- -------- --------

2 G1 1 0 0

3 G1 1 0 0

4 G1 1 0 0

6 G2 1 1 0

7 G2 1 1 0

8 G2 1 1 0

10 G3 1 0 1

11 G3 1 0 1

12 G3 1 0 1

Categorical predictor

Effects parameterization

|

Raw data

|

|

Matrix representation

|

|

y

|

A

|

|

2

|

G1

|

|

3

|

G1

|

|

4

|

G1

|

|

6

|

G2

|

|

7

|

G2

|

|

8

|

G2

|

|

10

|

G3

|

|

11

|

G3

|

|

12

|

G3

|

|

|

\[y_i = \mu + \alpha_p+\varepsilon_i, \\ \text{where}~p= \text{number of levels minus 1}\] \[\begin{pmatrix}

2\\3\\4\\6\\7\\8\\10\\11\\12

\end{pmatrix} =

\begin{pmatrix}

1&0&0\\1&0&0\\1&0&0\\1&1&0\\1&1&0\\1&1&0\\1&0&1\\1&0&1\\1&0&1

\end{pmatrix} \times

\begin{pmatrix}

\mu\\\alpha_2\\\alpha_3

\end{pmatrix} +

\begin{pmatrix}

\varepsilon_1\\\varepsilon_2\\\varepsilon_3\\\varepsilon_4&\\\varepsilon_5\\\varepsilon_6\\\varepsilon_7&\\\varepsilon_8\\\varepsilon_9

\end{pmatrix}

\]

|

\[\alpha_1 = 3, \alpha_2 = 4, \alpha_3 = 8\]

Categorical predictor

Treatment contrasts

|

Parameter

|

Estimates

|

Null hypothesis

|

|

\(Intercept\)

|

mean of control group (\(\mu_1\))

|

H\(_0\): \(\mu=\mu_1=0\)

|

|

\(\alpha^*_2\)

|

mean of group 2 minus mean of control group (\(\mu_2-\mu_1\))

|

H\(_0\): \(\alpha^*_2=\mu_2-\mu_1=0\)

|

|

\(\alpha^*_3\)

|

mean of group 3 minus mean of control group (\(\mu_3-\mu_1\))

|

H\(_0\): \(\alpha^*_3=\mu_3-\mu_1=0\)

|

|

…

| |

|

> contrasts(A) <-contr.treatment

> contrasts(A)

2 3

G1 0 0

G2 1 0

G3 0 1

> summary(lm(Y~A))$coef

Estimate Std. Error t value Pr(>|t|)

(Intercept) 3 0.5773503 5.196152 2.022368e-03

A2 4 0.8164966 4.898979 2.713682e-03

A3 8 0.8164966 9.797959 6.506149e-05

Categorical predictor

Treatment contrasts

|

Parameter

|

Estimates

|

Null hypothesis

|

|

\(Intercept\)

|

mean of control group (\(\mu_1\))

|

H\(_0\): \(\mu=\mu_1=0\)

|

|

\(\alpha^*_2\)

|

mean of group 2 minus mean of control group (\(\mu_2-\mu_1\))

|

H\(_0\): \(\alpha^*_2=\mu_2-\mu_1=0\)

|

|

\(\alpha^*_3\)

|

mean of group 3 minus mean of control group (\(\mu_3-\mu_1\))

|

H\(_0\): \(\alpha^*_3=\mu_3-\mu_1=0\)

|

|

…

| |

|

> summary(lm(Y~A))$coef

Estimate Std. Error t value Pr(>|t|)

(Intercept) 3 0.5773503 5.196152 2.022368e-03

A2 4 0.8164966 4.898979 2.713682e-03

A3 8 0.8164966 9.797959 6.506149e-05

Categorical predictor

User defined contrasts

User defined contrasts

Grp2 vs Grp3

Grp1 vs (Grp2 & Grp3)

|

Grp1

|

Grp2

|

Grp3

|

|

\(\alpha_2\)

|

0

|

1

|

-1

|

|

\(\alpha_3\)

|

1

|

-0.5

|

-0.5

|

> contrasts(A) <- cbind(c(0,1,-1),c(1, -0.5, -0.5))

> contrasts(A)

[,1] [,2]

G1 0 1.0

G2 1 -0.5

G3 -1 -0.5

Categorical predictor

User defined contrasts

- \(p -1\) comparisons (contrasts)

- all contrasts must be orthogonal

Categorical predictor

Orthogonality

Four groups (A, B, C, D)

\(p-1=3~\) comparisons

- A vs B :: A \(>\) B

- B vs C :: B \(>\) C

- A vs C ::

Categorical predictor

User defined contrasts

> contrasts(A) <- cbind(c(0,1,-1),c(1, -0.5, -0.5))

> contrasts(A)

[,1] [,2]

G1 0 1.0

G2 1 -0.5

G3 -1 -0.5

\[

\begin{array}{rcl}

0\times 1.0 &=& 0\\

1\times -0.5 &=& -0.5\\

-1\times -0.5 &=& 0.5\\

sum &=& 0

\end{array}

\]

Categorical predictor

User defined contrasts

> contrasts(A) <- cbind(c(0,1,-1),c(1, -0.5, -0.5))

> contrasts(A)

[,1] [,2]

G1 0 1.0

G2 1 -0.5

G3 -1 -0.5

> crossprod(contrasts(A))

[,1] [,2]

[1,] 2 0.0

[2,] 0 1.5

> summary(lm(Y~A))$coef

Estimate Std. Error t value Pr(>|t|)

(Intercept) 7 0.3333333 21.000000 7.595904e-07

A1 -2 0.4082483 -4.898979 2.713682e-03

A2 -4 0.4714045 -8.485281 1.465426e-04

Categorical predictor

User defined contrasts

> contrasts(A) <- cbind(c(1, -0.5, -0.5),c(1,-1,0))

> contrasts(A)

[,1] [,2]

G1 1.0 1

G2 -0.5 -1

G3 -0.5 0

> crossprod(contrasts(A))

[,1] [,2]

[1,] 1.5 1.5

[2,] 1.5 2.0

Partitioning of variance (ANOVA)

ANOVA

Partitioning variance

ANOVA

Partitioning variance

> anova(lm(Y~A))

Analysis of Variance Table

Response: Y

Df Sum Sq Mean Sq F value Pr(>F)

A 2 96 48 48 0.0002035 ***

Residuals 6 6 1

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Categorical predictor

Post-hoc comparisons

|

No. of Groups

|

No. of comparisons

|

Familywise Type I error probability

|

|

3

|

3

|

0.14

|

|

5

|

10

|

0.40

|

|

10

|

45

|

0.90

|

Categorical predictor

Post-hoc comparisons

Bonferoni

> summary(lm(Y~A))$coef

Estimate Std. Error t value Pr(>|t|)

(Intercept) 7 0.3333333 21.000000 7.595904e-07

A1 -8 0.9428090 -8.485281 1.465426e-04

A2 4 0.8164966 4.898979 2.713682e-03

> 0.05/3

[1] 0.01666667

Categorical predictor

Post-hoc comparisons

Tukey’s test

> library(multcomp)

> data.lm<-lm(Y~A)

> summary(glht(data.lm, linfct=mcp(A="Tukey")))

Simultaneous Tests for General Linear Hypotheses

Multiple Comparisons of Means: Tukey Contrasts

Fit: lm(formula = Y ~ A)

Linear Hypotheses:

Estimate Std. Error t value Pr(>|t|)

G2 - G1 == 0 4.0000 0.8165 4.899 0.00653 **

G3 - G1 == 0 8.0000 0.8165 9.798 < 0.001 ***

G3 - G2 == 0 4.0000 0.8165 4.899 0.00679 **

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Adjusted p values reported -- single-step method)

Assumptions

- Normality

- Homogeneity of variance

- Independence

Worked Examples

|

Format of day.csv data files

|

|---|

|

TREAT

|

BARNACLE

|

|---|

|

ALG1

|

27

|

|

..

|

..

|

|

ALG2

|

24

|

|

..

|

..

|

|

NB

|

9

|

|

..

|

..

|

|

S

|

12

|

|

..

|

..

|

|

TREAT

|

Categorical listing of surface types. ALG1 = algal species 1, ALG2 = algal species 2, NB = naturally bare surface, S = scraped bare surface.

|

|

BARNACLE

|

The number of newly recruited barnacles on each plot after 4 weeks.

|

|

|

> day <- read.csv('../data/day.csv', strip.white=T)

> head(day)

TREAT BARNACLE

1 ALG1 27

2 ALG1 19

3 ALG1 18

4 ALG1 23

5 ALG1 25

6 ALG2 24

Worked Examples

Question: what effects do different substrate types have on barnacle recruitment

Linear model:

\[

Barnacle_{i} = \mu + \alpha_j +\varepsilon_i \hspace{1cm} \varepsilon \sim{} \mathcal{N}(0, \sigma^2)

\]

Worked Examples

|

Format of partridge.csv data files

|

|---|

|

GROUP

|

LONGEVITY

|

|---|

|

PREG8

|

35

|

|

..

|

..

|

|

NON0

|

40

|

|

..

|

..

|

|

PREG1

|

46

|

|

..

|

..

|

|

VIRGIN1

|

21

|

|

..

|

..

|

|

VIRGIN8

|

16

|

|

..

|

..

|

|

GROUP

|

Categorical listing of female partner type.

PREG1 = 1 pregnant partner, NONE0 = no female partners, PREG8 = 8 pregnant partners, VIRGIN1 = 1 virgin partner, VIRGIN8 = 8 virgin partners.

Groups 1,2,3 - Control groups

Groups 4,5 - Experimental groups.

|

|

LONGEVITY

|

Longevity of male fruitflies (days)

|

|

|

> partridge <- read.csv('../data/partridge.csv', strip.white=T)

> head(partridge)

GROUP LONGEVITY

1 PREG8 35

2 PREG8 37

3 PREG8 49

4 PREG8 46

5 PREG8 63

6 PREG8 39

> str(partridge)

'data.frame': 125 obs. of 2 variables:

$ GROUP : Factor w/ 5 levels "NONE0","PREG1",..: 3 3 3 3 3 3 3 3 3 3 ...

$ LONGEVITY: int 35 37 49 46 63 39 46 56 63 65 ...

Worked Examples

Question: what effects does mating have on the longevity of male fruitflies

Linear model:

\[

Longevity_{i} = \mu + \alpha_j +\varepsilon_i \hspace{1cm} \varepsilon \sim{} \mathcal{N}(0, \sigma^2)

\]

|